GridDB operation control guide

Revision: 2207

Table of Contents

- 1 Introduction

- 2 Service

- 3 Integrated operation control GUI (gs_admin)

- 4 Cluster operation control command interpreter (gs_sh)

- 4.1 Overview

- 4.2 Using gs_sh

- 4.3 Definition of a GridDB cluster

- 4.3.1 Definition of node variable

- 4.3.2 Definition of cluster variable

- 4.3.3 Defining the SQL connection destination of a cluster

- 4.3.4 Definition of a user

- 4.3.5 Definition of arbitrary variables

- 4.3.6 Displaying the variable definition

- 4.3.7 Saving a variable definition in a script file

- 4.3.8 Executing a script file

- 4.4 GridDB cluster operation controls

- 4.4.1 Cluster status

- 4.4.2 Starting a node

- 4.4.3 Stopping a node

- 4.4.4 Batch entry of nodes in a cluster

- 4.4.5 Batch detachment of nodes from a cluster

- 4.4.6 Node entry in a cluster

- 4.4.7 Detaching a node from a cluster

- 4.4.8 Adding a node to a cluster

- 4.4.9 Displaying cluster status data

- 4.4.10 Displaying configuration data

- 4.4.11 Displaying node status

- 4.4.12 Displaying event log

- 4.5 Data operation in a database

- 4.6 Database management

- 4.7 User management

- 4.8 Container management

- 4.9 Other operations

- 4.10 Options and sub-commands specifications

- 5 Operating commands

- 5.1 Command list

- 5.2 Common functions of operating commands

- 5.3 Points to note during use

- 5.4 Starting/stopping a node

- 5.5 User management

- 5.6 Cluster management

- 5.6.1 Joining a cluster configuration

- 5.6.2 Leaving a cluster configuration

- 5.6.3 Stop all clusters

- 5.6.4 Getting cluster configuration data

- 5.6.5 Getting node status

- 5.6.6 Adding a node to a cluster

- 5.6.7 Manual failover of a cluster

- 5.6.8 Getting partition data

- 5.6.9 Increasing the no. of nodes of the cluster

- 5.6.10 Set up autonomous data redistribution of a cluster

- 5.6.11 Controlling the checkpoint

- 5.7 Log data

- 5.8 Backup/restoration

- 5.9 Maintenance

- 6 Export/import

- 6.1 Overview

- 6.2 Configuration of export/import execution environment

- 6.3 Export function

- 6.3.1 Specifying process target

- 6.3.2 Specifying the output format of a row data file

- 6.3.3 Specifying the output configuration of container data file

- 6.3.4 Specifying the output destination

- 6.3.5 Specifying the number parallel executions

- 6.3.6 Test execution function

- 6.3.7 Error continuation specification

- 6.3.8 Other functions

- 6.4 Import function

- 6.5 Command/option specifications

- 6.6 Format of container data file

- 7 Web API

- 8 Long term archive

- 9 Cluster configuration method other than multicast method

- 10 Annexes

1 Introduction

1.1 Aim & composition of this manual

This manual describes the operating functions of GridDB.

It is written for system designers and system administrators responsible for GridDB system's construction and operation management respectively.

The following subjects are covered in this manual.

-

Service

- This section explains the GridDB service performed automatically during OS start-up.

-

Integrated operation control GUI (gs_admin)

- This section explains the web-based integrated operation control GUI (gs_admin) integrating the operating functions of a GridDB cluster.

-

Cluster operation control command interpreter (gs_sh)

- This section explains the GridDB cluster operation control functions and the command interpreter (gs_sh) to provide data operations.

-

Operating commands

- This section explains the various operating commands of GridDB.

-

Export/import

- This section explains the export/import tools.

-

Web API

- This section explains the Web API that can register rows, acquire rows, and execute a SQL SELECT statement for GridDB cluster.

1.2 Explanation of terms

This section explains the main terms used in the GridDB description.

| Term | Meaning |

|---|---|

| Node | Refers to the individual server process to perform data management in GridDB. |

| Cluster | Single or a set of nodes that perform data management together in an integrated manner. |

| Partition | Logical area where data is stored. It exists only within GridDB, and not visible directly to the user. |

| Partition group | A group of several partitions. |

| It is also a data unit in the file system when perpetuated in a disk. | |

| Row | A chunk of data managed in GridDB, which consists of a key and multiple values. |

| Container (table) | Data structure serving as an I/F with the user. Container to manage a set of rows. It is called a container when operating with NoSQL I/F, and a table when operating with NewSQL I/F. 2 data types exist, collection (table) and timeseries container (timeseries table). |

| Collection (table) | One type of container (table) to manage rows having a general key. |

| Timeseries container (timeseries table) | One type of container (table) to manage rows having a timeseries key. Possesses a special function to handle timeseries data. |

| Master node | The node that performs cluster management. |

| Follower node | All other nodes in the cluster other than the master node. |

| Owner node | Within the replicated containers, it records the location of the master container. |

| Number of nodes constituting a cluster | No. of nodes that can join a cluster. |

| Number of nodes already participating in a cluster | No. of nodes joined to a cluster. |

| Stable state | Cluster state in which the number of nodes already participating in a cluster = number of nodes constituting a cluster. |

Number of data characters used in GridDB.

| Item | Details | Remarks |

|---|---|---|

| Connection server | No maximum value specified | Limits such as 2048 bytes exist |

| depending on Web server and other system dependency. | ||

| Port | 0-65535 | |

| User name | Maximum 32 bytes. 1 or more ASCII alphanumeric characters, | Linux system-dependent |

| "_" (underscore) and numbers cannot be specified as the lead character. | ||

| No case sensitivity. | ||

| Password | No maximum length specified. | Linux system-dependent |

| Cluster name | Maximum length 64 characters. 1 or more ASCII alphanumeric characters, | Dependent on GridDB specifications |

| "_" (underscore) and numbers cannot be specified as the lead character. | ||

| No case sensitivity. | ||

| Path | Maximum 1023 bytes | Linux system-dependent |

| File name | Maximum 255 bytes | Linux system-dependent |

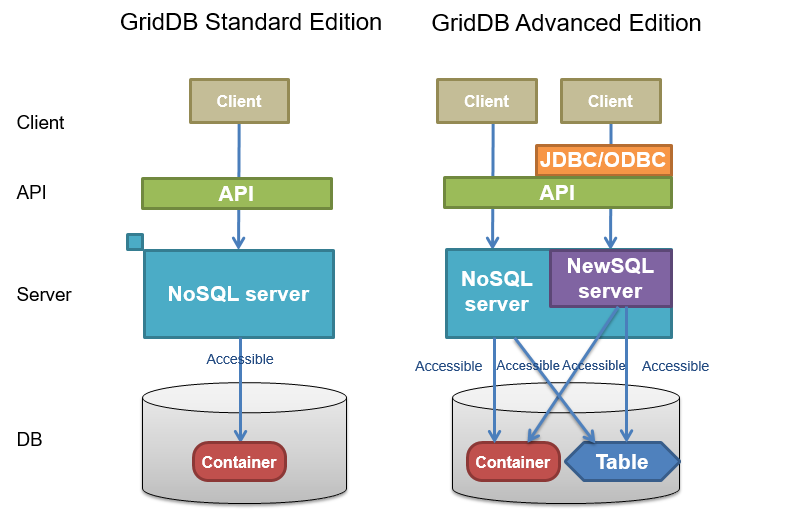

1.3 Access to container (table)

GridDB has a NoSQL interface (NoSQL I/F) and a NewSQL interface (NewSQL I/F). And GridDB manages data using a data structure. It is called a container when operating with NoSQL I/F, and a table when operating with NewSQL I/F. The GridDB Standard Edition supports the NoSQL interface while the GridDB Advanced Edition supports both NoSQL and NewSQL interfaces.

- Containers created by NoSQL I/F can be operated as tables by NewSQL I/F.

- Tables created by NewSQL I/F can be operated as tables by NoSQL I/F.

Access range of container and table

1.4 Definition file

The 2 types of definition file used in GridDB are as follows.

- Cluster definition file

- Node definition file

A cluster definition file contains settings that are common in the entire clusters.

A node definition file contains different settings for each node.

These definition file samples are installed at the following locations.

/usr/griddb/ # installation directory

conf/ # sample definition file directory

gs_cluster.json.tmpl # cluster definition file sample

gs_node.json.tmpl # node definition file sample

In a new installation, the same files are also placed in the conf directory under the GridDB home directory. During operations, edit these definition files.

/var/lib/gridstore/ # GridDB home directory

conf/ # definition file storage directory

gs_cluster.json # (edited) cluster definition file

gs_node.json # (edited) node definition file

Configure the definition file to match the applicable system environment.

[Memo]

- When the GridDB version is upgraded, new parameters may be added to the definition file. Compare the definition file used with the newly-installed sample and reflect the changes where appropriate during use.

2 Service

2.1 Overview

GridDB service is automatically performed during OS start-up to start a GridDB node (hereinafter, node) or GridDB cluster (hereinafter cluster). The node or cluster is stopped when the OS is shut down.

The following can be carried out by the service.

- Start, stop and restart a node (cluster)

- Check the process status of a node (cluster)

2.2 Terms

The terms used are defined below.

| Term | Meaning |

|---|---|

| Service script | Script file is executed automatically during OS startup. It is installed from the GridDB server package in |

/etc/init.d/gridstore and registered in the system as a GridDB service. | |

| PID file | File containing only the process ID (PID) of the gsserver process. This is created in |

$GS_HOME/conf/gridstore.pid when the gsserver process is started. | |

| Start configuration file | File containing the parameters that can be set while in service. Depending on the GridDB server package, |

it is installed in /etc/sysconfig/gridstore/gridstore.conf . |

2.3 Preparing to use the service

The procedure to use and install GridDB service is as follows.

- Install GridDB server package and client package.

- Configure the respective GridDB nodes that constitute a GridDB cluster.

- Configure the start configuration file.

See the “GridDB Quick Start Guide” (GridDB_QuickStartGuide.html) for the procedure to install GridDB and configure a GridDB node.

2.4 Service specifications

2.4.1 Parameters

A list of parameters is available to control the GridDB service operations. By default, nodes and cluster are configured to start and stop when the OS is started and stopped respectively.

A list of the parameters is given below.

| Parameters | Default | Description |

|---|---|---|

| GS_USER | admin | GridDB user name |

| GS_PASSWORD | admin | GS_USER password |

| CLUSTER_NAME | INPUT_YOUR_CLUSTER_NAME_HERE | Cluster name to join |

| MIN_NODE_NUM | 1 | Number of nodes constituting a cluster |

To change the parameters, edit the start configuration file ( /etc/sysconfig/gridstore/gridstore.conf ).

When a server package is updated or uninstalled, the start configuration file will not be overwritten or uninstalled.

[Points to note]

- Do not directly edit a parameter described in service script. The edited file will be lost when the server package is uninstalled or updated. When changing the parameters, edit the start configuration file.

-

When composing a cluster with multiple nodes, use the same parameter file for each node to be attached to the cluster. In particular, if a cluster is expanded by an operation control command, command interpreter, etc. during system operation, the parameter

MIN_NODE_NUMof all the nodes needs to be changed to the number of nodes constituting a cluster after the expansion.

2.4.2 Log

See the boot log( /var/log/boot.log ) and operating command log( $GS_HOME/log ) for details of the service log.

2.5 Functions

GridDB service functions are shown below.

[Points to note]

- Functions other than the status can be executed by a root user only.

2.5.1 start

Action:

-

Start a node and join to a cluster.

# service gridstore start

- This function executes gs_startnode command to start a node and gs_joincluster command to join to a cluster.

- When the gs_startnode command is executed, the system waits for the recovery process to end.

- When the gs_joincluster command is executed, the system doesn't wait for the cluster to start operation.

-

Set the cluster name in

CLUSTER_NAME. -

Set the number of nodes constituting a cluster in

MIN_NODE_NUM.

[Points to note]

- If an error occurs in the middle of a cluster operation, the gsserver process will be stopped.

2.5.2 stop

Action:

-

Leave from a cluster and stop a node.

# service gridstore stop

- End if there are no more processes, and error if the timeout time has passed (termination code 150).

- If there are no processes started by the service, termination code 0 will be returned.

- This function executes gs_leavecluster command to leave a node from a cluster before stopping a node.

- When the gs_leavecluster command is executed, the system waits for the node to leave from the cluster.

- A node stopping process will be performed regardless of the termination code of the gs_leavecluster command.

[Points to note]

- When stopping the cluster, execute the gs_stopcluster command and leave/stop each node by a service stop. If you do not stop the cluster with the gs_stopcluster command, autonomous data arrangement may occur due to node leaving. If data relocation happens frequently, network or disk I/O may become a load. If you leave the node after stopping the cluster, data arrangement will not occur. To prevent unnecessary data arrangement, please use the gs_stopcluster command when stopping the cluster.

- A node started by an operating command or command interpreter (gs_sh) cannot be stopped by a service stop. Use the respective tools to stop the node.

2.5.3 status

Action:

-

Display whether the node process is under execution or not.

# service gridstore status

2.5.4 restart

Action:

- Stop and start continuously.

2.5.5 condrestart

Action:

- Restart if there is a lock file.

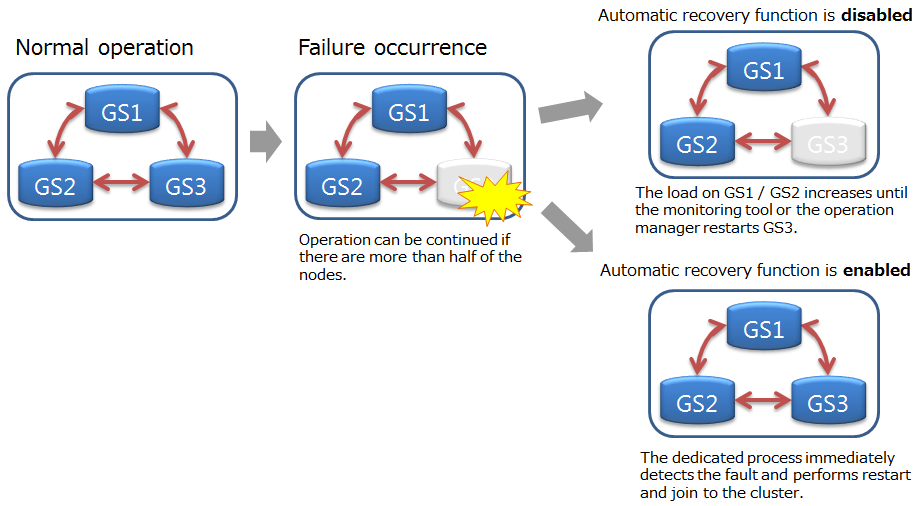

2.6 Automatic recovery function

2.6.1 Overview

If the GridDB node abnormally terminates or the node process is forcibly terminated, it will automatically restart the node and join to the cluster. Operation manager does not need to be aware of restoring the cluster status to normal operation.

Automatic recovery function

[Points to note]

Automatic restart is not performed in the following cases:

- In case of the user explicitly turns it off.

- In case of an unrecoverable failure (Node status: ABNORMAL).

- In case of trying automatic restart more than 5 times.

- In case of the node is not joined to the cluster before the failure.

2.6.2 Parameters

The parameters of automatic recovery function is as follows.

| Parameter | Default | Value |

|---|---|---|

| SVC_ENABLE_AUTO_RESTART | true | true(Enabled)/false(Disabled) |

| GS_USER | admin | Set as appropriate |

| GS_PASSWORD | admin | Set as appropriate |

To change the parameters, edit the start configuration file ( /etc/sysconfig/gridstore/gridstore.conf ).

SVC_ENABLE_AUTO_RESTART

- Set whether to enable or disable this function.

- This parameter can be changed by restarting the node.

- If you want to control GridDB's fault recovery with another monitoring system, set false.

GS_USER/GS_PASSWORD

- Set the GridDB administrator user name and password.

-

These parameters are used in the following cases:

- a. In case of starting, stopping, restarting by service

- b. In case of the -u option is not specified with the gs_startnode

[Points to note]

- If the specified GS_USER / GS_PASSWORD is invalid, or if these are not specified, the GridDB node will fail to start up.

2.7 Error message list

Service error messages are as shown below.

| Code | Message | Meaning |

|---|---|---|

| F00003 | Json load error | Reading of definition file failed. |

| F01001 | Stop service timed out | Stop node process timed out. |

| F01002 | Startnode error | An error occurred in the node startup process. |

| F01003 | Startnode timed out | Start node process timed out. |

| F01004 | Joincluster error | An error occurred in the join cluster process. |

| F01005 | Joincluster timed out | Join cluster process timed out. |

| F01006 | Leavecluster error | An error occurred in the leave cluster process. |

| F02001 | Command execution error | An error occurred in the command execution. |

| F02002 | Command execution timed out | Command execution timed out. |

[Memo]

- If an error occurs with each command execution, the operating command error will be displayed and recorded at the same time. Refer to the item on operating commands (gs_startnode, gs_joincluster, and gs_leavecluster) as well when troubleshooting errors.

3 Integrated operation control GUI (gs_admin)

3.1 Overview

The integrated operation control GUI (hereinafter described as gs_admin) is a Web application that integrates GridDB cluster operation functions.

The following operations can be carried out using gs_admin.

-

Management and operation of cluster configuration

- Consolidate cluster data and node data in node repository

- Edit node repository

- Operation of cluster configuration

-

Compare cluster and node data

- Display a dashboard to show the performance data and log analysis data

- Cluster status and configuration data

- Node version and data (checkpoint) written to a data disk

- Container data arranged in a node

- Viewing event log of a node, dynamic changes in the event log output level for error analysis purposes

- Execute SQL command on a cluster and display results

-

See node performance data

- Display node performance data in a graph

- The performance data of the node at a specific point is stored and compared with the current performance data

-

Database-related function in a cluster.

- Database list display

- Tree display of database and container

- Create a database in a cluster, delete database from a cluster

- Assign, revoke database access rights for a general user

- Execution of SQL commands on a database, result display (GridDB Advanced Edition only)

-

General user-related functions in a cluster.

- Creating a general user in a cluster, deleting a general user from a cluster

- Changing the password of a general user

-

Container-related functions in a database.

- Create a container in a database, delete a container from a database

- Save the container search and search tree structural

- Container data display and index, trigger settings in a container

- Execute a TQL command on a container (inquiry language) and display results

3.1.1 gs_admin configuration

gs_admin needs to be installed on a machine in which nodes constituting a cluster have been started, or in a machine on the network with the same subnet and multicast distribution.

3.2 Setting up gs_admin

gs_admin is a Web application that runs on Tomcat.

To use gs_admin, Tomcat and Java have to be installed beforehand. The compatible versions are as follows.

- Apache Tomcat 7.0/8.0

- Oracle Java 7/8

In addition, the GridDB versions compatible with gs_admin Ver.4.1 are as follows.

- GridDB Standard Edition Ver.4.1

- GridDB Advanced Edition Ver.4.1

The procedure to use gs_admin is as follows.

- Configure the respective GridDB nodes that constitute a GridDB cluster.

- Install and configure gs_admin.

- Access the gs_admin application URI with a browser, and log in as a gs_admin user.

See the “GridDB Quick Start Guide” (GridDB_QuickStartGuide.html) for the procedure to configure a GridDB node.

The procedure to install and configure gs_admin is as follows.

- Installation of GridDB client package

- Deploy gs_admin.war in Tomcat

- gs_admin user settings

- gs_admin.properties file settings

- Node repository settings

- adminHome rights settings

3.2.1 Installation of client package

Install the GridDB client package (griddb-xx-client-X.X.X-linux.x86_64.rpm).

Log into a machine installed with the Web application as a root user, and install the package using the command below.

# rpm -Uvh griddb-xx-client-X.X.X-linux.x86_64.rpm

(*) xx indicates the GridDB edition. (se, ae, ve)

(*) X.X.X indicates the GridDB version.

When a client package is installed, a directory named admin is created in the GridDB home directory ( /var/lib/gridstore/admin ). This directory ( /var/lib/gridstore/admin ) is known as adminHome hereinafter.

gs_admin configuration data and data used by gs_admin are installed in adminHome. As there are functions in gs_admin to operate adminHome files, the appropriate rights need to be set. Rights settings will be described later.

The configuration under adminHome is as follows.

capture/ # snapshot storage directory (*)

[Node address]_[port no.]/YYYYMMDDHHMMSS.json # snapshot file (*)

conf/ # configuration file directory

gs_admin.properties # static parameter file to be configured initially

gs_admin.settings # dynamic parameter file to configure display-related settings

password # gs_admin user definition file

repository.json # node repository file

log/ # log file directory of gs_admin (*)

gs_admin-YYYYMMDD.log # log file (*)

tree/ # structural file directory of container tree (*)

foldertree-[cluster name]-[user name].json # folder tree file (*)

Files and directories marked with a (*) are created automatically by gs_admin.

[Points to note]

- Files and directories under adminHome will not be deleted even if the client package is uninstalled. Manually delete the files if they are not required.

3.2.2 Deployment in Tomcat

gs_admin is a Web application that runs on Tomcat. To use gs_admin, there is a need to deploy the gs_admin war file in Tomcat. Tomcat settings are omitted in this section.

The deployment procedure is as follows.

Deploy the war file included in the GridDB client package (griddb-xx-client-X.X.X-linux.x86_64.rpm) in Tomcat.

When a client package is installed, war file is installed under the following directory.

- /usr/griddb/web/gs_admin.war

Copy gs_admin.war to the webapps directory under the Tomcat installation directory.

$ cp /usr/griddb/web/gs_admin.war [Tomcat installation directory]/webapps

3.2.3 gs_admin user settings

When using gs_admin, perform authentication as a gs_admin user.

Administrator users of GridDB clusters under management need to be set up as gs_admin users.

The gs_admin user definition file is found in /var/lib/gridstore/admin/conf/password .

This file will not be created when a client package is installed.

To use this easily, copy the user definition file of the node in the cluster you want to manage ( /var/lib/gridstore/conf/password ) to the gs_admin user definition file ( /var/lib/gridstore/admin/conf/password ). In this case, all administrator users listed in the copied user definition file will become gs_admin users.

[Memo]

- See User management for details about user management in GridDB.

- See Managing multiple clusters if you want to manage multiple clusters in gs_admin.

3.2.4 gs_admin.properties file settings

The configuration file is found in /var/lib/gridstore/admin/conf/gs_admin.properties . Set together with the GridDB cluster configuration as a gsadm user.

Reload the Web application if the property file has been overwritten.

gs_admin.properties contains the following settings.

| Parameters | Default | Description |

|---|---|---|

| adminUser | admin | Set the gs_admin administrator user. |

| Multiple user names can be set by separating the names with commas. A gs_admin administrator user may use the following functions. | ||

| - Cluster operation function | ||

| - Repository management function | ||

| ospassword | - | Set the password of the node gsadm user (OS user). |

| The following functions can be used when the password is set. | ||

| - Node start operation (Start) in the cluster operation functions | ||

| - OS data display screen |

[Memo]

-

When installing GridDB, gsadm is registered as an OS user to use GridDB. As no password has been set up in a gsadm user, a password needs to be set up in advance if you want to set up

ospasswordin gs_admin.

3.2.5 Node repository settings

Node repository files are files to centrally manage cluster configuration data and node data ( /var/lib/gridstore/admin/conf/repository.json ). They are used to specify cluster under management and cluster operation functions. Set together with the GridDB cluster configuration as a gsadm user.

The default file contents are as follows.

{

"header" : {

"lastModified" : "",

"version" : "2.7.0"

},

"clusters" : [

{

"name" : "INPUT_YOUR_CLUSTER_NAME_HERE",

"address" : "239.0.0.1",

"port" : 31999,

"jdbcAddress" : "239.0.0.1",

"jdbcPort" : 41999

}

],

"nodes" : [

{

"address" : "192.168.1.10",

"port" : 10040,

"sshPort" : 22,

"clusterName" : "INPUT_YOUR_CLUSTER_NAME_HERE"

}

]

}

To configure a node repository, either edit the file directly or use the repository management screen. When configuring using the repository management screen, see the functions on the repository management screen and Starting management of a cluster in operation with gs_admin (recommended).

Use of the operation control command or command interpreter (gs_sh) is recommended when performing cluster configuration for the first time.

3.2.6 adminHome rights setting

Files and directories are created automatically by gs_admin under adminHome. As a result, a Tomcat execution user requires read and write rights to adminHome. Therefore, owners of files and directories under adminHome are changed to Tomcat execution users (tomcat by default) beforehand.

Change the owner as a root user.

# chown -R tomcat:tomcat /var/lib/gridstore/admin

[Memo]

-

The default directory of adminHome is

/var/lib/gridstore/adminbut this can be changed by changing the Web application settings. Change the value ofadminHomein/webapps/gs_admin/WEB-INF/classes/conf/gs_adminPath.propertiesunder the Tomcat home (/usr/local/tomcatby default).

[Points to note]

- When upgrading the gs_admin version, gs_adminPath.properties is recreated when the war file is reinstalled. The value of gs_adminPath.properties needs to be reset if it is going to be changed in the operations.

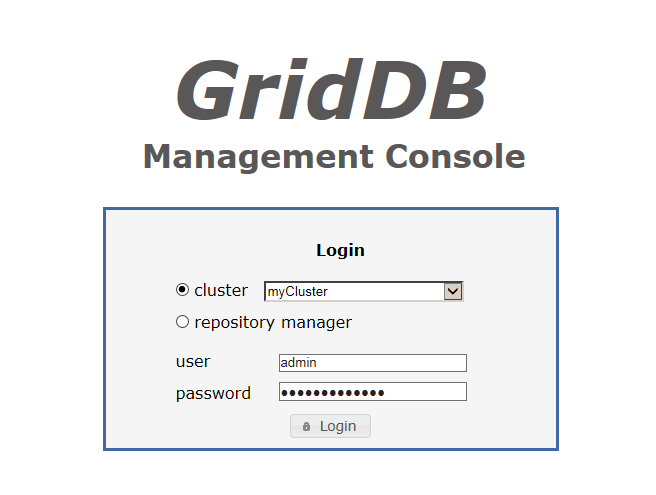

3.3 Login and login destination screen

3.3.1 Login screen

Access the application URI below to access gs_admin.

http://[Tomcat operating machine address]:8080/gs_admin

he login screen appears when you access the gs_admin application URI.

Login screen

In the log-in screen, you can choose from 2 different environment; cluster or repository manager. In the former option, you need to select the cluster that you would like to manage from the drop-down list. Once logged in, you will be taken to the Integrated operation control screen

On the other hand, for the latter option, you will be taken to the Repository management screen.

When logging in, enter the user name and password of the gs_admin user in the user and password box respectively, and click the Login button.

[Memo]

- The port no. of the Tomcat operating machine differs depending on the environment. Default port is 8080.

- It is possible to log into the integrated operation control screen even if the node has not been started.

3.3.2 Integrated operation control screen

The integrated operation control screen is shown below.

Integrated operation control screen

The integrated operation control screen is made up of the following elements.

| Element | Abbreviation | Location | Function |

|---|---|---|---|

| Tree view | Tree | Left | Display, select a list of operating targets |

| Data display and input section | View | Right | Data display and data input subject to operation |

| Menu area | - | Top | Log out |

| Message area | - | Bottom | - |

Tree function

In Tree, a cluster or container can be selected as the main operation target by switching tabs at the top.

| Tab | Tree name | Main functions |

|---|---|---|

| ClusterTree | Cluster tree | Display a list of the clusters and nodes, select the operating targets |

| ContainerTree | Container tree | Display a list of the databases, search for containers, select operating targets |

View function

In View, the tab displayed at the top of View differs for each operating target selected in Tree. The function can be switched by selecting the tab at the top.

See the items of each tree and screen for details.

3.3.3 Repository management screen

This function can be used by a gs_admin administrator user only.

Select repository manager in the login screen and login as a gs_admin administrator user to arrive at the repository management screen.

The repository management screen is shown below.

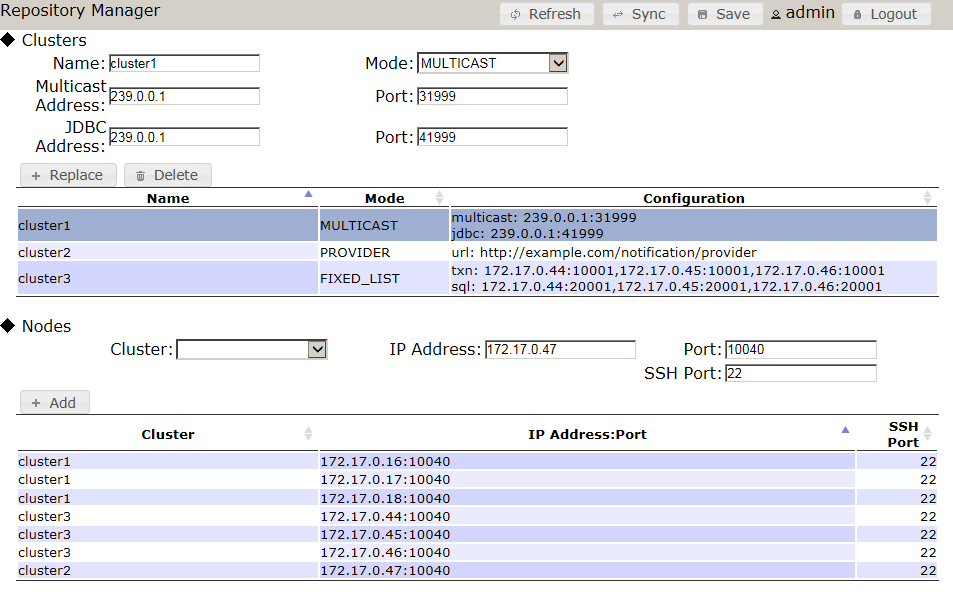

Repository management screen

The following functions are available in the repository management screen.

-

Displaying repository data

-

Display of the node repository file (

/var/lib/gridstore/admin/conf/repository.json) is divided into 2 sections. The top half of the screen shows the cluster data, whereas the bottom half displays the node data.

-

Display of the node repository file (

-

Editing repository data

- Any changes/edit in the repository data will not be applied unless it is saved.

-

To add clusters and nodes to the repository, enter the cluster and node data in the boxes provided, and click the Add button.

- A cluster cannot be added or replaced when the cluster name is duplicated.

- A node cannot be added or replaced when the combination of the IP address and port is duplicated.

-

Replace and Delete can be performed by selecting a row in the table.

- Upon selecting a row, the contents of the row will be copied to the input column.

- Multiple rows cannot be selected.

-

Besides the cluster name, one of the following data is required to add or replace a cluster depending on the cluster connection method.

- If the cluster connection method is "MULTICAST": multicast address, multicast port

- If the cluster connection method is "FIXED_LIST": connection destination list of the fixed list method

- If the cluster connection method is "PROVIDER": provider URL of the provider method

- IP address and port are required to add or replace a node.

- When deleting a cluster, if there are registered nodes in the deleted cluster after deletion, a dialog to confirm deletion of the cluster from these nodes will appear. Select Yes to delete.

-

Cluster synchronization

- Data of a cluster in operation can be acquired and registered in a repository.

-

Click the Sync button to display the dialog for inputting the IP address and port. Enter the IP address and port of any node constituting the cluster here, select Sync, and click Yes in the confirmation dialog to overwrite and update the display in the repository management screen.

- The specified node need not be a master node.

-

Specify

/system/serviceAddressof the node definition file (gs_node.json) as the IP address. -

Specify

/system/servicePortof the node definition file (gs_node.json) as the port. - SSH port is registered as 22 by default.

-

Updating repository data

- Click the Refresh button to import the node repository file again.

- Unsaved contents will be discarded.

-

Saving repository data

- Click the Save button to save the contents displayed on the screen.

- As long as the data is not saved yet, no changes will be made to the node repository file.

The specifications of the input column are as follows.

Cluster

-

Cluster name (Name)

-

Specify

/cluster/clusterNameof the cluster definition file (gs_cluster.json).

-

Specify

-

Cluster connection method (Notification Mode)

-

Select one of the following from the drop-down box

- Multicast method: MULTICAST

- Fixed list method: FIXED_LIST

- Provider method: PROVIDER

-

Select one of the following from the drop-down box

-

Multicast address (Multicast Address)

-

Specify

/transaction/notificationAddressof the cluster definition file (gs_cluster.json). - Must be specified when using the multicast method.

-

Specify

-

Multicast port (Multicast Port)

-

Specify

/transaction/notificationPortof the cluster definition file (gs_cluster.json). - Must be specified when using the multicast method.

-

Specify

-

JDBC address (JDBC Address)

-

Specify

/sql/notificationAddressof the cluster definition file (gs_cluster.json). - Specify when using the multicast method. (optional)

- This is necessary when using the SQL screen in the GridDB Advanced Edition.

-

Specify

-

JDBC port (JDBC Port)

-

Specify

/sql/notificationPortof the cluster definition file (gs_cluster.json). - Specify when using the multicast method. (optional)

- This is necessary when using the SQL screen in the GridDB Advanced Edition.

-

Specify

-

Connection destination list of fixed list method (Transaction Member)

-

Combine the

/cluster/notificationMember/transaction/addressand/cluster/notificationMember/transaction/portin the cluster definition file (gs_cluster.json) with a “:” and specify the value of each node by separating them with a comma. - Example: 192.168.10.1:10001,192.168.10.2:10001,192.168.10.3:10001

-

Combine the

-

Connection destination list of fixed list method (SQL Member)

-

Combine the

/cluster/notificationMember/sql/addressand/cluster/notificationMember/sql/portin the cluster definition file (gs_cluster.json) with a “:” and specify the value of each node by separating them with a comma. - Example: 192.168.10.1:20001,192.168.10.2:20001,192.168.10.3:20001

-

Combine the

-

Provider URL of provider method (Provider URL)

-

Specify

/cluster/notificationProvider/urlof the cluster definition file (gs_cluster.json). - This is necessary when using the provider method.

-

Specify

Node

-

Cluster

- Select a registered cluster in the selection box.

-

IP Address

-

Specify

/system/serviceAddressof the node definition file (gs_node.json).

-

Specify

-

Port

-

Specify

/system/servicePortof the node definition file (gs_node.json).

-

Specify

-

SSH Port

- Specify the SSH port of the machine with operating nodes. Default port is 22.

3.4 Cluster tree-related functions

3.4.1 Cluster tree

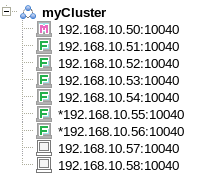

Summary

In a cluster tree, the nodes constituting a cluster under management, i.e the repository nodes (clusterName is the cluster under management) are displayed in a tree format.

Cluster tree

An * will appear at the beginning of a node which has not been registered in the repository.

A description of the icons shown in a cluster tree is given below.

| Icon | Description |

|---|---|

| Cluster | |

| Master node | |

| Follower node | |

| Started node | |

| Stopped node | |

| Status unconfirmed node | |

| Message |

Context menu

When an element of the tree is right clicked, a context menu appears according to which element is clicked, cluster or node. Data update and element operation can then be performed by selecting an item from the menu.

The menus and functions for the respective selected elements are as follows.

| Selected element | Menu | Function |

|---|---|---|

| Cluster | refresh | Get list of nodes in a tree again |

| Node | refresh | Display the latest node information in View |

Operating target and view tab

When an element in the tree is left clicked, the functions appear in the View according to which element is clicked, cluster or node. The function can be changed by tapping the top section of the View.

| Selected element | Tab | Screen name | Function |

|---|---|---|---|

| Cluster | Dashboard | Dashboard screen | Display a variety of data in the entire cluster. |

| Status | Cluster status screen | Display configuration data and information of cluster under management. | |

| Monitor | OS data display screen | Display OS data of a machine with operating nodes. | |

| Configuration | Cluster operation screen | Cluster operations such as startup and termination of node can be carried out. | |

| Node | System | System data screen | Display system data of the node. |

| Container | Container list screen | Display a list of containers stored in the node. | |

| Performance | Performance data screen | Display performance data of the node as a graph. | |

| Snapshot | Snapshot screen | Display the measured performance values in a table. The values can be compared with the values measured earlier. | |

| Log | Log screen | Display the event log of a node and the output level of an event log can be set. |

[Memo]

- If the master node of a cluster is changed, re-acquisition of the node list may fail. Log out once first before logging in again.

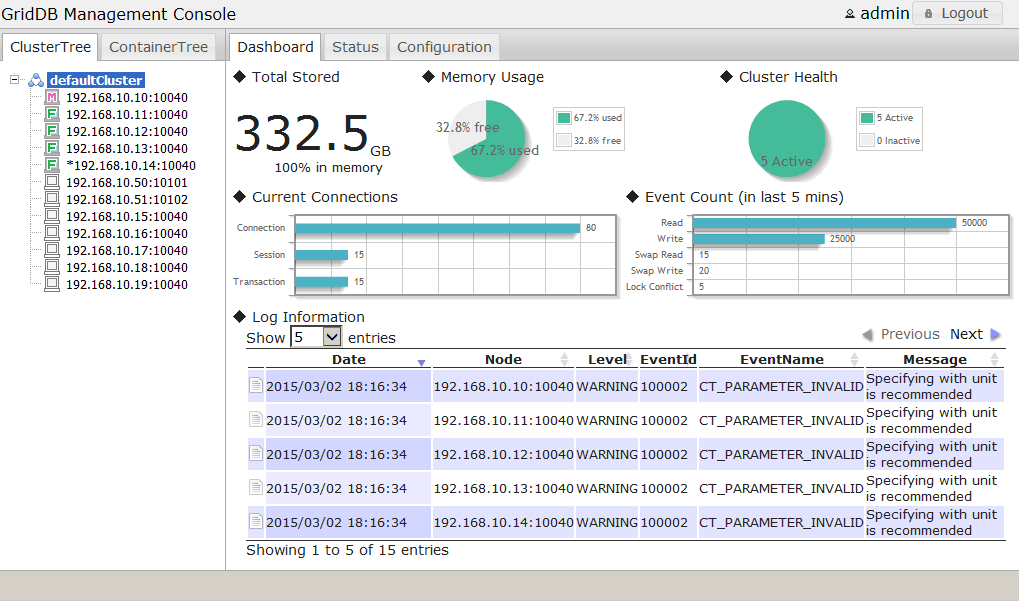

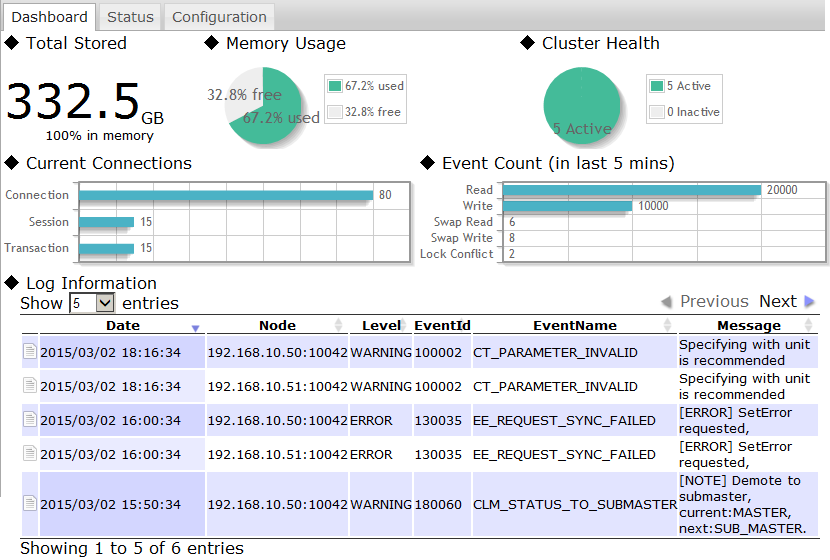

3.4.2 Dashboard screen

Summary

The dashboard screen contains a variety of information related to the entire cluster such as memory usage, cluster health, log information, etc.

Method of use

| Type of tree | Operating target | Tab |

|---|---|---|

| Cluster tree | Cluster | Dashboard |

Screen

Dashboard screen

Functions

The following functions are available in the dashboard screen.

-

Total volume of data in the cluster (◆Total Stored)

- Display the total volume of data in the cluster along with its unit. (KB - TB)

- The % of the total volume of data saved in the memory is also displayed.

-

Total amount of memory used (◆Memory Usage)

- The utilization rate of memory is displayed on a pie chart.

-

Cluster health (◆Cluster Health)

- Display the proportion of operating nodes and non-operating nodes on a pie chart.

-

Network connection in a cluster (◆Current Connections)

- Display the current connection, session and number of transactions of the cluster on a bar chart.

-

Number of cluster events (in the past 5 minutes) (◆Event Count)

- Display the read command, write command, swap read, swap write and event count of the lock bids which occurred in the past 5 minutes in a cluster.

- *Past 5 minutes refers to the past 5 minutes starting from the time the latest performance data is output to the log.

-

Log analysis data (◆Log Information)

- Display the WARNING and ERROR logs of each node constituting a cluster.

- Move and hover the cursor to the icon on the far left to display detailed data of the target log.

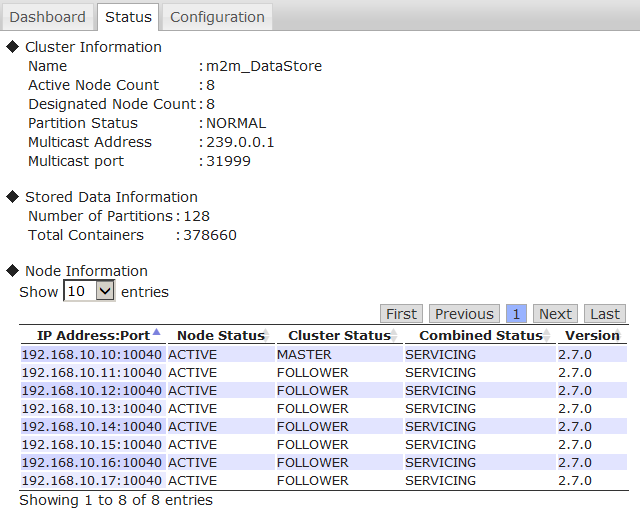

3.4.3 Cluster status screen

Summary

The cluster status screen displays the current cluster's and node's configuration data and information such as the cluster's name, active node count, number of partitions, and the node's IP address, etc., as shown below.

Method of use

| Type of tree | Operating target | Tab |

|---|---|---|

| Cluster tree | Cluster | Status |

Screen

Cluster status screen

Functions

The cluster status screen is comprised of the following components.

-

Cluster data display (◆Cluster Information)

-

Display the following data acquired from a master node.

- Cluster name, operation node number, number of nodes constituting a cluster, partition status, multicast address and port

-

Display the following data acquired from a master node.

-

Data-related information display (◆Stored Data Information)

-

Display the following data acquired from a cluster.

- No. of partitions, total no. of containers

-

Display the following data acquired from a cluster.

-

Display data on nodes constituting a cluster (◆Node Information)

-

Display the following data of each node.

- IP address and port, node status, cluster status, composite status, node version

- Composite status is the status of a node displayed on the cluster operation screen.

-

Display the following data of each node.

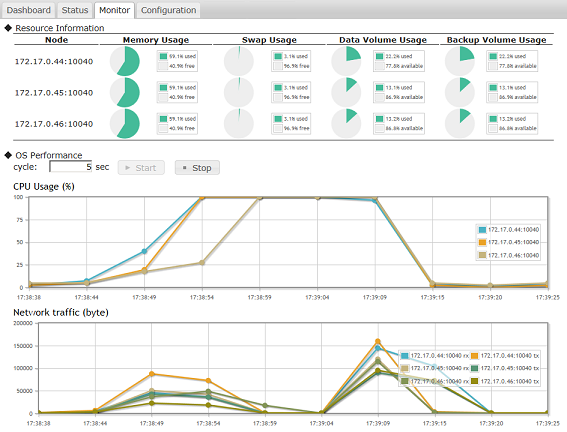

3.4.4 OS data display screen

Summary

The OS data display screen is comprised of two components, Resource Information and OS Performance of the current cluster. The GridDB performance analysis, and the CPU and Network load status are displayed by pie charts and line graphs respectively.

Method of use

| Type of tree | Operating target | Tab |

|---|---|---|

| Cluster tree | Cluster | Monitor |

Screen

OS data display screen

Functions

The OS data display screen is comprised of the following components.

-

Display resource Information of a node (◆Resource Information)

-

Display the following data acquired from a node.

- CPU utilization rate

- Memory, swap memory capacity, utilization rate

- Capacity of data directory and backup directory, disk utilization rate

-

Display the following data acquired from a node.

-

OS performance data display (◆OS Performance)

-

Click the Start button to get the performance data from the node at the specified cycle interval and draw 2 graphs.

- CPU utilization rate

- Network transfer volume

-

Click the Start button to get the performance data from the node at the specified cycle interval and draw 2 graphs.

[Memo]

-

This function cannot be used if the

ospasswordhas not been set up in gs_admin.properties. - This setting is necessary in order to connect to the node execution environment from the gs_admin execution environment as an OS user “gsadm”. See the manual of each OS for details on the SSH connection procedure.

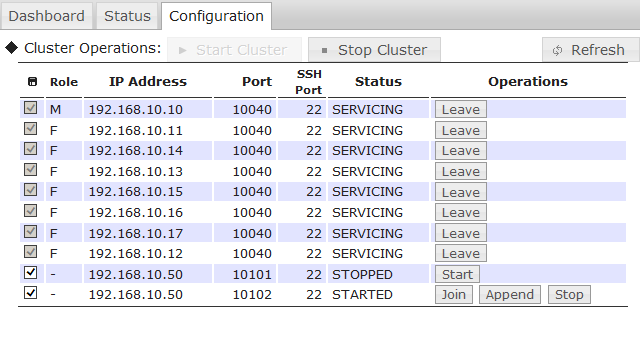

3.4.5 Cluster operation screen

This function can be used by the gs_admin administrator only.

Summary

The cluster operations screen consists of a list of table of the running nodes, as well as the start and end node features.

Method of use

| Type of tree | Operating target | Tab |

|---|---|---|

| Cluster tree | Cluster | Configuration |

Screen

Cluster operation screen

Functions

The following functions are available in the cluster operation screen.

-

Displaying list of nodes

-

The cluster operation screen can display three of the following types of nodes, which have been registered to the repository:

- Nodes that constitute the actual cluster (displayed at the top of the table)

- Nodes that have been allocated to the current (being managed) cluster but do not constitute (not yet configured to) the actual cluster

- Nodes that have NOT been allocated to any cluster

-

The cluster operation screen can display three of the following types of nodes, which have been registered to the repository:

-

Displaying node data

-

The following data is available for each of the nodes.

- Cluster registration status (check box)

- Role in the cluster (Role)

- IP address and port, and SSH port

- Node status

- The cluster registration status indicates whether the nodes are registered in the current cluster.

- There are 3 types of role - master node (M), follower node (F), and unassigned role (-).

- Node status appears only for the nodes that constitute the actual cluster, and the nodes that have been allocated to the current (being managed) cluster but do not constitute (not yet configured to) the actual cluster

-

The following data is available for each of the nodes.

-

Registration and removal of nodes

- *Contents of a node repository are edited by this function.

- Click the check box next to the cluster registration status to register or delete a node to/from a cluster.

- In this screen, only nodes that have been registered into a cluster can be operated.

- When a cluster is in operation, the check box is disabled.

-

Cluster operations components

-

Start cluster

- Compose a cluster with all the nodes registered in the cluster.

- This command can be executed only when the node status of all the nodes is STARTED.

-

Stop cluster

- Stop a cluster in operation.

- This command can be executed only when the cluster is in operation.

-

Start cluster

-

Node operations components

-

Executable operating buttons will appear in the operations column of each node.

- Display buttons differ depending on the node status.

-

Starting a node (Start)

- Start a node which has been stopped (status STOPPED)

-

Joining a cluster (Join)

- Add or re-add a node to a cluster.

-

Leave a cluster (Leave)

- Remove a node from the active cluster.

-

Increase the number of nodes in a cluster (Append)

- Increase the no. of nodes of the active cluster.

-

Stopping a node (Stop)

- Stop the node in operation.

- Cannot be executed while a cluster is operating. Stop the node after removing it from the cluster.

-

Executable operating buttons will appear in the operations column of each node.

[Memo]

- To start a node, this setting is necessary in order to connect to the node execution environment from the gs_admin execution environment as an OS user “gsadm”. See the manual of each OS for details on the SSH connection procedure.

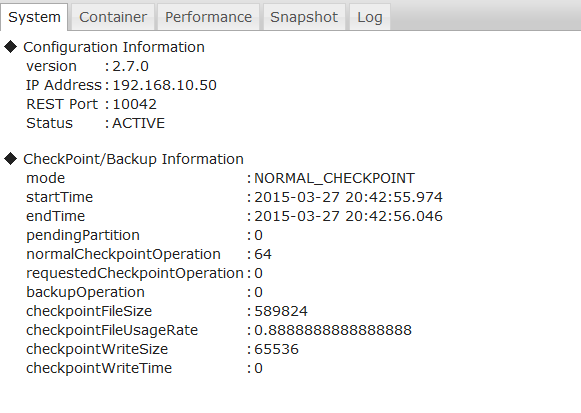

3.4.6 System data screen

Summary

The system data screen is comprised of configuration and checkpoint/backup information.

Method of use

| Type of tree | Operating target | Tab |

|---|---|---|

| Cluster tree | Node | System |

Screen

System data screen

Functions

The following functions are available in the system data screen.

-

Displaying node data (◆Configuration Information)

-

Display the following data acquired from a node.

- Node version, IP address and port, node status

-

Display the following data acquired from a node.

-

Displaying checkpoint, backup data (◆ CheckPoint/Backup Information)

- Display the number of checkpoint executions, number of backups performed, etc., as shown in the screenshot above.

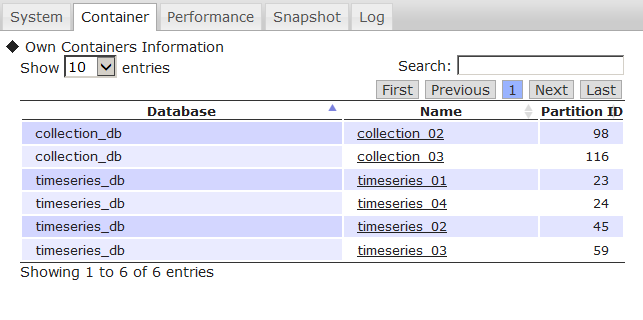

3.4.7 Container list screen

Summary

The container list screen contains containers information such as the name of the containers and to which database it belongs to.

Method of use

| Type of tree | Operating target | Tab |

|---|---|---|

| Cluster tree | Node | Container |

Screen

Container list screen

Functions

The following functions are available in the container list screen.

-

Displaying the container list (◆Own Containers Information)

-

Display a list of the containers stored in a node.

- Display the database name, container name and partition ID.

-

Display a list of the containers stored in a node.

-

Hyperlink to the container details screen

- Click on the container name to move to the container details screen and get detailed information of the container.

[Memo]

- The container tree needs to be initialized before moving to the container details screen. If the container details cannot be displayed, click the ContainerTree tab on the Tree once to initialize the container tree.

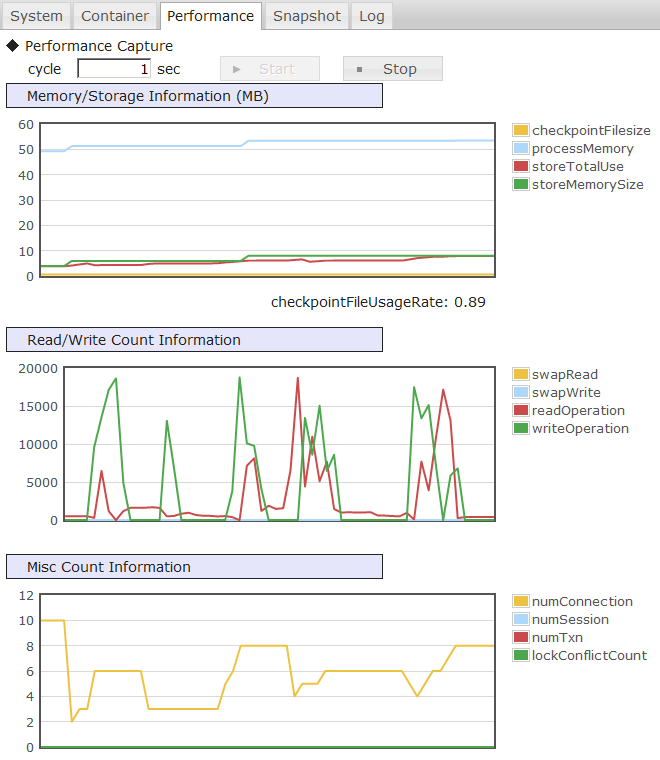

3.4.8 Performance data screen

Summary

The performance data screen shows graphical representation of the node's performance such as memory/storage, read/write count, and misc. count information.

Method of use

| Type of tree | Operating target | Tab |

|---|---|---|

| Cluster tree | Node | Performance |

Screen

Performance data screen

Functions

The following functions are available in the performance data screen.

-

Plot a graph (◆Performance Capture)

- Clicking the Start button will bring up 3 graphs showing the performance of the node at a specified interval.

- The operation can be stopped with the Stop button.

-

Memory/Storage Information

- Display the volume of data saved in the memory and stored in a disk.

- Unit is in MB.

-

Read/Write Count Information

- Display the number of times the memory or disk is read from or written to over time.

-

Misc Count Information

- Display the current connection, session, transaction, and number of lock bids generated.

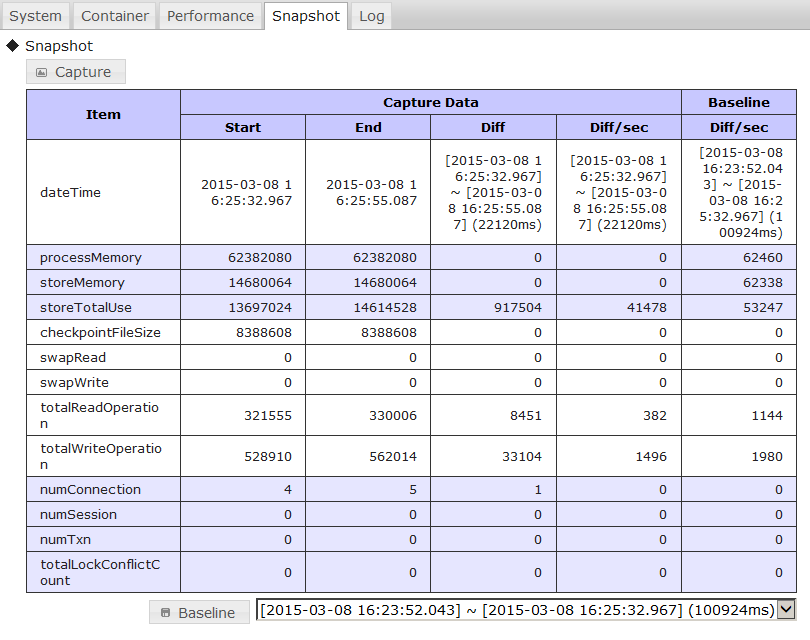

3.4.9 Snapshot screen

Summary

The snapshot screen shows the node's performance at a point in time. The values can be compared with the values measured earlier.

Method of use

| Type of tree | Operating target | Tab |

|---|---|---|

| Cluster tree | Node | Snapshot |

Screen

Snapshot screen

Functions

The following functions are available in the snapshot screen.

-

Gathering of performance data

- Click the Capture button to get the current performance data of the node.

-

Differential display

- Click the Capture button several times to save and display the baseline and the difference (Diff) between the previous performance data (Start) and current performance data (End).

-

Display and save baseline

- Click the Baseline button to save the displayed differential value per second as the Baseline.

- Saved data can be selected from the selection box. Only the latest 10 cases will be displayed in the selection box.

- The baseline is saved in the capture directory of adminHome with the time indicated in Start as its name.

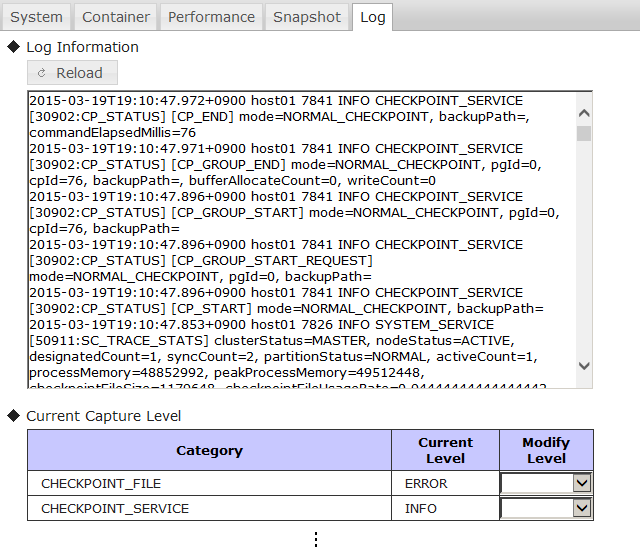

3.4.10 Log screen

Summary

The log screen contains the event log information of a node and the corresponding setting of its output level.

Method of use

| Type of tree | Operating target | Tab |

|---|---|---|

| Cluster tree | Node | Log |

Screen

Log screen

Functions

The following functions are available in the log screen.

-

Displaying event log (◆Log Information)

- Display the last 30 event logs of the node.

- Event logs can be acquired again with the reload button.

-

Event log output level display setting (◆Current Capture Level)

- The current event log output level can be checked for each category.

- The log output levels are ERROR, WARNING, INFO and DEBUG.

-

The changed log level is initialized by restarting the node.

- Edit the node definition file (gs_node.json) of the target node to be perpetuated.

[Points to note]

- Be sure to follow the instructions of the support desk when changing the log output level.

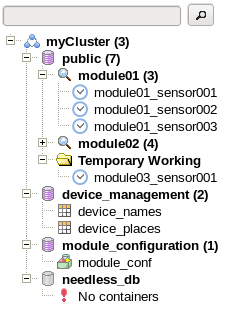

3.5 Container tree-related functions

3.5.1 Container tree

Summary

In a container tree, the databases and containers which exist in a cluster under management are displayed in a tree format.

The cluster under management is displayed at the top of the tree (the figure within the parentheses () refer to the total number of databases in the cluster).

Container tree

A description of the icons shown in a container tree is given below.

| Icon | Description |

|---|---|

| Cluster | |

| Database | |

| Database (does not exist) | |

| Container (collection) | |

| Container (timeseries container) | |

| Partitioned table (container) | |

| Search folder | |

| Temporary work folder | |

| Message |

Functions

The following functions are available in a container tree.

-

Displaying cluster operation functions

- Upon selecting a cluster, a list of functions such as database creation etc. will appear in View.

-

Auto detection of database

- Automatically detects all databases existing in a cluster and displays them under the cluster.

- If the displayed database no longer exists in the cluster, it can be deleted from the Tree.

-

Selecting database subject to operation

- Upon selecting a database, a list of functions such as container creation etc. will appear in View.

-

Searching for container

- Containers in each database can be searched using key words. Search conditions can be added by separating them with a single-byte space.

- A search is conducted by selecting a database or search folder, entering the key words in the search bar at the top, and then clicking the search button or pressing the Enter key.

- A search folder is created during a search. Figure within parentheses () indicates the number of containers found.

- Search results can be filtered by stratifying the search folder.

- Search folders can be deleted with the context menu or Delete key. Even if it is deleted, containers in clusters that are displayed in the folder will not be deleted.

-

Deleting container

- A container can be deleted from a cluster with the context menu or Delete key. Display a confirmation dialog prior to deletion.

-

Selecting container subject to operation

- Icon changes depending on the type of container selected.

- Upon selecting a container, detailed information etc. of the container will appear in View.

-

Temporary work folder

- This folder is created under the database when a container is created in the container creation screen, or when the container name is clicked from the container data screen.

- Can be deleted with the context menu or Delete key. Even if it is deleted, containers in clusters that are displayed in the folder will not be deleted.

-

Saving tree structure

- Tree structure after the search is saved and imported during the next login. (File differs for each cluster and user)

- Objects saved include clusters, databases and search folders. Search results, containers and temporary work folders will not be saved.

-

These are saved in

/var/lib/gridstore/admin/tree/foldertree-[cluster name]-[user name].jsonin the Tomcat operating machine.

After login, the ClusterTree tab and node list are displayed automatically. Upon switching to the ContainerTree tab, the tree structure of the container tree will be added automatically if it has been saved. However, search folders will not be searched again automatically.

The following operations cannot be carried out in a container tree.

-

Search for containers across the database

- Containers in a cluster cannot be searched across a database.

-

Creation/deletion of database

- Perform the operation from the database management screen.

-

Creation of container

- Perform the operation from the container creation screen.

-

Deletion of partitioned table (container)

- Perform the operation from the SQL screen with a SQL command.

Context menu

When an element of the tree is right clicked, a context menu appears according to which element is clicked, cluster or node. Data update and element operation can then be performed by selecting an item from the menu.

The menus and functions for the respective selected elements are as follows.

| Selection element | Menu | Function |

|---|---|---|

| Cluster | refresh | Read the tree structure of the tree again and automatically detect the database |

| Database | refresh | Check the database existence and search for containers again |

| Container | refresh | Display the latest container information in View |

| drop | Deletion of container (with confirmation dialog) | |

| Search folder | refresh | Search for container again |

| remove | Deletion of folder | |

| Temporary work folder | remove | Deletion of folder |

[Memo]

- Each function of a container tree can be used only when a cluster is in operation.

Operating target and view tab

When an element in the tree is left clicked, the functions appear in the View according to which element is clicked, cluster or node. The function can be changed by tapping the top section of the View.

| Selection element | Tab | Screen name | Function overview |

|---|---|---|---|

| Cluster | Database | Database management screen | A database can be created or deleted, and access rights can be assigned or revoked. |

| User | User management screen | A general user can be created or deleted and the password can be changed. | |

| SQL | SQL screen | The results of a SQL command executed on the database can be displayed. | |

| Database | Create | Container creation screen | A container can be created in a database. |

| SQL | SQL screen | The results of a SQL command executed on the database can be displayed. | |

| Container | Details | Container details screen | Column and index configuration data of a container will be displayed. |

| Index | Index setting screen | An index can be created or deleted for each column of a container. | |

| Trigger | Trigger setting screen | A container trigger can be created, edited or deleted. | |

| TQL | TQL screen | Execute a TQL (query language) on a container and display the results. | |

| Partitioned table | Details | Container details screen | Column, index and table partitioning data of a container will be displayed. |

| (container) |

3.5.2 Database management screen

Summary

The database management screen contains two components, database creation and deletion function, and configuration of access rights (grant, revoke, drop, etc.) for database users.

Method of use

| Type of tree | Operating target | Tab |

|---|---|---|

| Container tree | Cluster | Database |

Screen

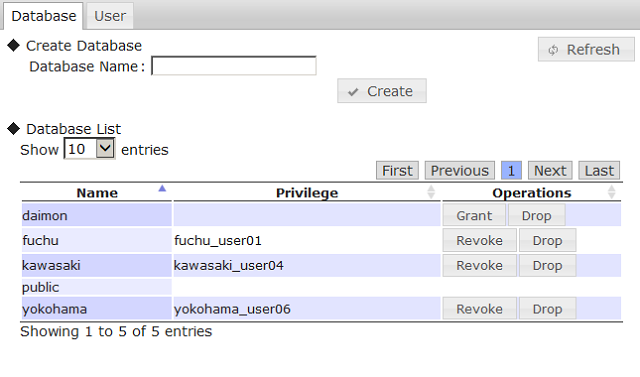

Database management screen

Functions

The following functions are available in the database management screen.

-

Creating database (◆Create Database)

- Input the database name and click the Create button to create a database in a cluster.

-

Displaying database list (◆Database List)

-

Display a list of the databases existing in a cluster.

- Display the database name, access rights (Privilege), and operations in the database.

- Display general users who have access rights to the database in the access rights (Privilege) column.

- Public database cannot be operated.

-

Display a list of the databases existing in a cluster.

-

Deleting database (Drop)

- Delete a database from a cluster.

- An error will occur if a container exists in the database.

-

Assignment of access rights (Grant)

- Assign database access rights to a general user.

- Click Grant to display the user selection dialog. A general user existing in a cluster can be selected from the selection box.

- Only one general user has access rights to the database.

- A single user can have access rights to multiple databases.

-

Revoking access rights (Revoke)

- Revoke database access rights from a general user.

- In the user management screen tab, when a general user is deleted from the user list, all access rights of that user will be automatically deleted.

3.5.3 User management screen

Summary

In the user management window, addition and deletion of general user, as well as modification of the password can be performed.

Method of use

| Type of tree | Operating target | Tab |

|---|---|---|

| Container tree | Cluster | User |

Screen

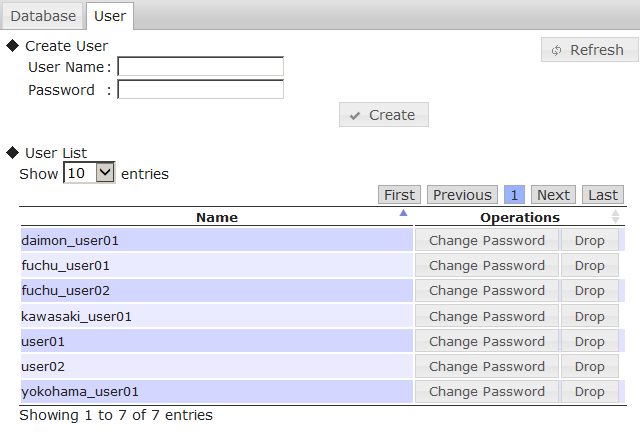

User management screen

Functions

-

Creating a user (◆Create User)

- Input the desired user name and password, and click the Create button to register a general user into the cluster.

-

Displaying a list of the users (◆User List)

-

Display a list of the general users existing in the cluster.

- Display the user name and operation on the user.

-

Display a list of the general users existing in the cluster.

-

Deleting user (Drop)

- Delete a general user from the cluster.

- If the user has access rights to the database, all access rights will be automatically revoked.

-

Changing password (Change Password)

- The password of a user can be changed. The original password is not needed.

- Click Change Password to display the input dialog for a new password. Enter the new password and click Change to change the password.

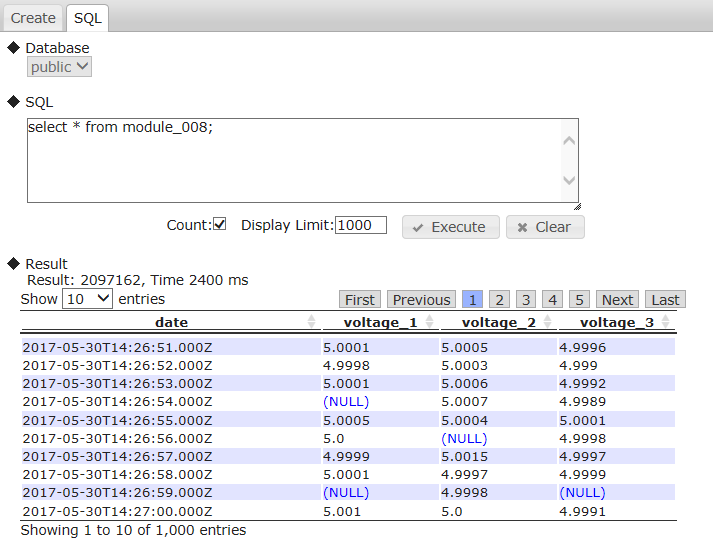

3.5.4 SQL screen

This function can be used in the GridDB Advanced Edition only.

Summary

The results of a SQL command executed on the database are displayed.

Method of use

| Type of tree | Operating target | Tab |

|---|---|---|

| Container tree | Cluster | SQL |

| Container tree | Database | SQL |

Screen

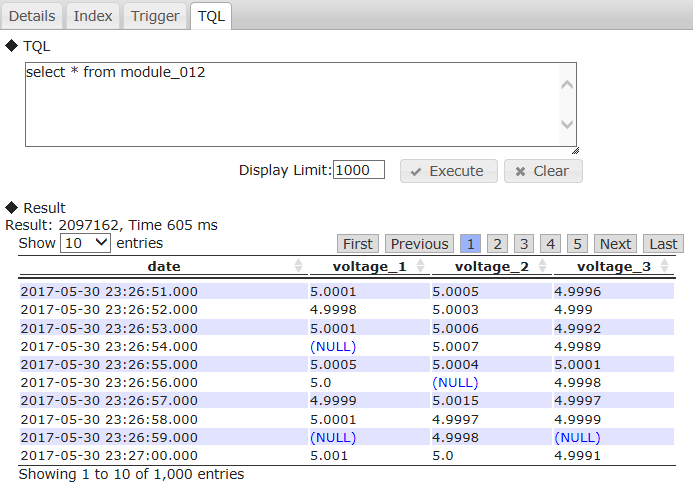

SQL screen

Functions

The following functions are available in the SQL screen.

-

Selecting a database subject to execution (◆Database)

- A database existing in a cluster can be selected with the selection box.

- If a database is selected in a container tree, it will be fixed in the selected database.

-

SQL execution (◆SQL)

-

Enter the SQL command and click the Execute button to execute the command. The results are displayed in ◆Result.

- Although a new line can be returned for input to be carried out, there is only 1 SQL command that can be executed.

- Inputs can be deleted with the Clear button.

- By setting Count, the number of hits is displayed. Otherwise, the number is not displayed. (In the latter case, by not getting the number, the response is faster).

- The number of rows to display is indicated in Display Limit.

- See “GridDB Advanced Edition SQL Reference” (GridDB_AE_SQL_Reference.html) for details of the SQL syntax that can be used.

-

Enter the SQL command and click the Execute button to execute the command. The results are displayed in ◆Result.

-

Displaying result (◆Result)

-

If the SELECT command is executed, the number of hits, the number of rows located, and elapsed time (ms) will be displayed (in a table format).

- The execution time of the SQL statement is displayed as elapsed time in ms.

- A NULL value and a value of array type are displayed as (NULL). A value of BLOB is displayed as (BLOB).

-

A value of TIMESTAMP is displayed as following format:

- Date and time format: ISO8601 format

- Timezone: UTC

- Example: 2018-11-07T12:30:00.417Z

- If the INSERT/DELETE/UPDATE command is executed, the no. of rows will be displayed. If other DCL commands or DDL commands are executed, SUCCESS will be displayed in the results.

-

If the SELECT command is executed, the number of hits, the number of rows located, and elapsed time (ms) will be displayed (in a table format).

[Memo]

-

When using this screen, the JDBC address and port need to be added to the cluster data of the node repository.

- Settings in the node repository can be executed from the repository management screen.

- When the number of displaying columns is over 1024, the number of displaying rows at once is decreased and some displaying formats are changed.

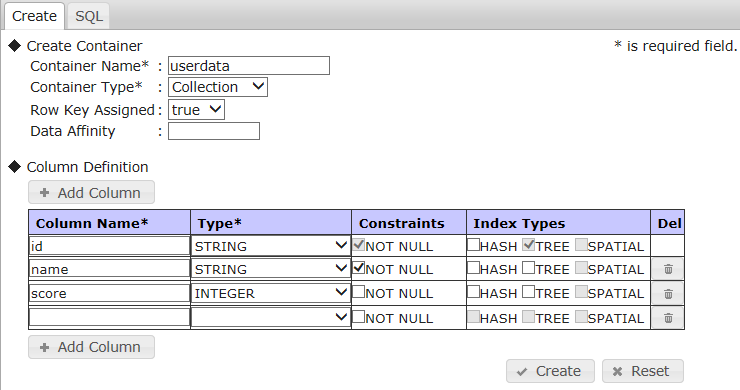

3.5.5 Container creation screen

Summary

The container creation window allows for creation of container and modification of the column.

Method of use

| Type of tree | Operating target | Tab |

|---|---|---|

| Container tree | Database | Create |

Screen

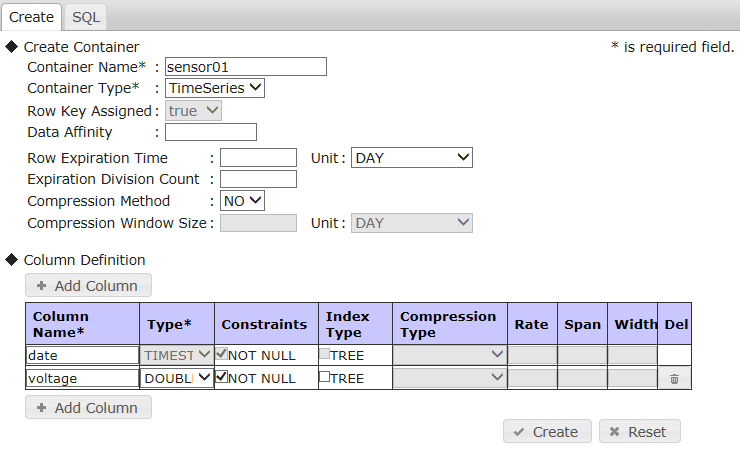

Container creation screen (collection)

Container creation screen (timeseries container)

Functions

The following functions are available in the container creation screen.

-

Creating a container

- Specify the container name and container type, followed by the parameters according to the container type, and then click the Create button to create a container in a database.

-

Selection of container type

- The container type can be either a collection (Collection) or timeseries container (TimeSeries).

- The parameters and column contents that can be set differ depending on the container type.

-

Column settings

- Column settings can be configured when creating a container. The upper limit of the number of columns is 100.

- A column can be added with the Add Column button and deleted with the Del button on the right side of the column.

- All settings except the container type can be returned to their initial status with the Reset button.

-

If Row Key Assigned is set to true when a collection is selected for the container type, the first column will be set up as the row key.

- When a row key is set up, the data type of the first column will be limited to those that can be used. And a NOT NULL constraint will be fixed as true.

- If a timeseries container is selected for the container type, the data type of the first column will be fixed as TIMESTAMP and index settings will be disabled. And a NOT NULL constraint will be fixed as true.

-

Add to container tree

- If a container is created successfully, a temporary work folder will be created under the database of the container tree and the created container will be added automatically under the folder.

[Memo]

- See “GridDB API reference” (GridDB_API_Reference.html) for the detailed settings.

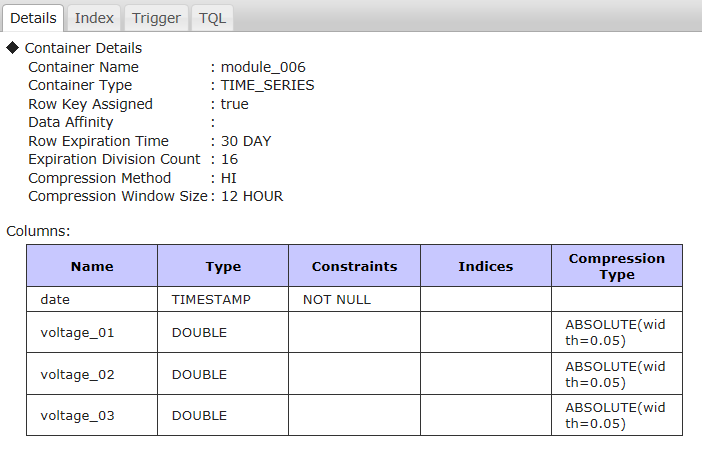

3.5.6 Container details screen

Summary

The container details screen contains column and index configuration data of a container.

Method of use

| Type of tree | Operating target | Tab |

|---|---|---|

| Container tree | Container | Details |

Screen

Container details screen

Functions

The following functions are available in the container details screen.

-

Display container parameters

- Displaying the container parameters, including the container name, container type etc.

-

Displaying column data of a container

-

Display the following data as column data of a container.

- Column name, data type, column constraints, index, compression data (timeseries container only), table partitioning data (partitioned table only)

-

Display the following data as column data of a container.

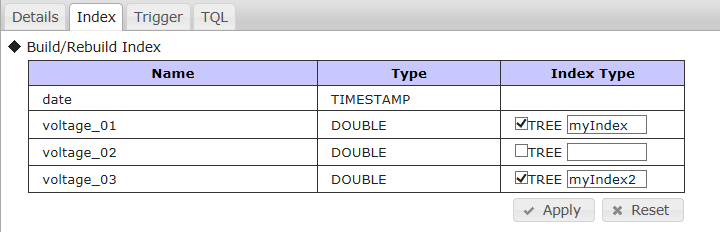

3.5.7 Index setting screen

Summary

Index setting window allows an index to be created or deleted for each column of a container.

Method of use

| Type of tree | Operating target | Tab |

|---|---|---|

| Container tree | Container | Index |

Screen

Index setting screen

Functions

The following functions are available in the index setting screen.

-

Index setting of a column (◆Build/Rebuild Index)

- Select/deselect a check box and click the Apply button to create/delete the target index.

- An index name can be specified in textbox.

- Click the Reset button to dismiss any changes made to the index.

[Memo]

- If the index name is changed and apply, the index is recreated.

- The upper limit of the number of operative columns in this screen is 100.

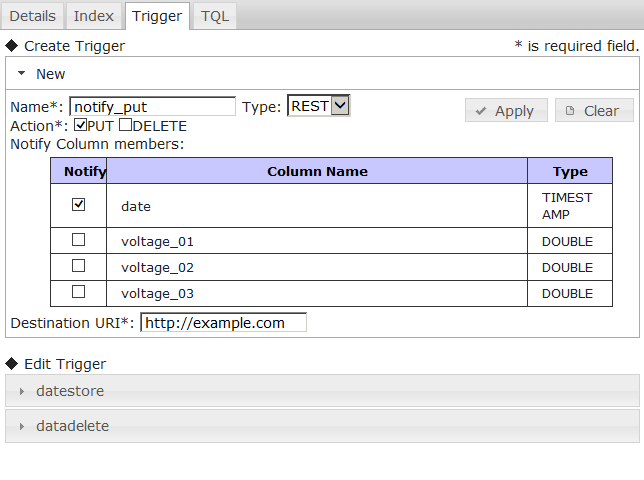

3.5.8 Trigger setting screen

Summary

The trigger setting screen allows for configuration of the container's trigger such creation, edit and deletion.

Method of use

| Type of tree | Operating target | Tab |

|---|---|---|

| Container tree | Container | Trigger |

Screen

Trigger setting screen

Functions

The following functions are available in the trigger setting screen.

-

Creating a trigger (◆Create Trigger)

- To create a trigger in the container, first click New to bring up the configuration boxes. Then, once the setting has been set, click the Apply button to complete the process.

- Click the Clear button to dismiss the changes and return to the default values.

- The trigger name (Name), operation under management (Action), and notification destination URI (Destination URI) are essential items. If the notification method (Type) is set to JMS, the notification name (Destination Name) in the JMS settings becomes necessary to fill in.

- Notification methods (Type) include REST and JMS. Settings vary depending on the notification method.

- The operation under monitoring (Action) is set up by ticking the PUT and DELETE check boxes.

- The column under monitoring (Notify Column members) is set up by ticking the check box.

-

Editing a trigger (◆Edit Trigger)

- When the list of triggers appear, click on the trigger's name to bring up its configuration.

- Only destination URI (Destination URI) and JMS settings can be edited.

- Click the Apply button to save the edited contents.

- Click the Delete button to delete the trigger.

- Click the Clear button to clear the settings.

[Memo]

- See “GridDB API reference” (GridDB_API_Reference.html) for the details.

3.5.9 TQL screen

Summary

In the TQL screen, the TQL (query language) on a container can be executed and the corresponding results can be displayed.

Method of use

| Type of tree | Operating target | Tab |

|---|---|---|

| Container tree | Container | TQL |

Screen

TQL screen

Functions

The following functions are available in the TQL screen.

-

TQL execution (◆TQL)

- Input the TQL command and click the Execute button to execute the command. The results will be displayed in ◆Result.

- Although a new line can be used as an input, 1 TQL command can be executed at a time.

- The FROM phrase can be omitted since this command will be executed on containers subject to the operation.

- The input box can be cleared with the Clear button.

- The number of rows to display is indicated in Display Limit.

-

Displaying result (◆Result)

-

Display the number of hits, the number of rows located (in a table format), and elapsed time (ms). The number of hits is not displayed for a partitioned table.

- The execution time of the TQL statement is displayed as elapsed time in ms.

- A NULL value is displayed as (NULL). A value of BLOB is displayed as (BLOB).

-

A value of TIMESTAMP is displayed as following format:

- Date and time format: ISO8601 format

- Timezone: UTC

- Example: 2018-11-07T12:30:00.417Z

-

Display the number of hits, the number of rows located (in a table format), and elapsed time (ms). The number of hits is not displayed for a partitioned table.

[Memo]

- See “GridDB API Reference” (GridDB_API_Reference.html) for the TQL details.

- When the number of displaying columns is over 1024, the number of displaying rows at once is decreased and some displaying formats are changed.

3.6 How to use gs_admin

This section provides a guide on how to use various functions accessible by gs_admin.

3.6.1 Commencing management of a cluster in operation

To manage the current active cluster in gs_admin, use the repository management function and follow the procedure below.

- Select the repository manager in the login screen and login as a gs_admin administrator user.

-

Click the Sync button, enter the following data of any cluster in operation, and then click Sync to synchronize the data.

-

Specify

/system/serviceAddressof the node definition file (gs_node.json) as the IP address. -

Specify

/system/servicePortof the node definition file (gs_node.json) as the port.

-

Specify

- Data of a cluster in operation will be reflected in the cluster list and node list.

- Click the Save button to save repository data.

- Click the Logout button to return to the login screen.

- Select the name of the cluster in operation from the list of clusters on the login screen.

- Log in as a gs_admin administrator user or a normal user to commence the operating functions.

3.6.2 Managing multiple clusters

When managing multiple clusters as a single gs_admin user, take note of the gs_admin user settings.

gs_admin user is managed in a single file, therefore if an administrator managing multiple clusters use different passwords for each of the cluster, the admin cannot be specified as a gs_admin user.

Therefore, the appropriate settings need to be configured according to number of admin in charge of the entire clusters.

-

When multiple clusters are managed by different users

- Choose unique names for each of the gs_admin users.

-

When multiple clusters are managed by a single user

- Register gs_admin users with the same password in all the clusters to-be-managed.

The procedure to register a new gs_admin user is shown below.

-

Use the gs_adduser command to add an administrator user to a single node among the clusters that you want to manage as a new user.

Example: If the new user name/password is gs#newuser/newuser

$ su - gsadm $ gs_adduser gs#newuser -p newuser $ cat /var/lib/gridstore/conf/password admin,8c6976e5b5410415bde908bd4dee15dfb167a9c873fc4bb8a81f6f2ab448a918 gs#newuser,9c9064c59f1ffa2e174ee754d2979be80dd30db552ec03e7e327e9b1a4bd594e system,6ee4a469cd4e91053847f5d3fcb61dbcc91e8f0ef10be7748da4c4a1ba382d17

- Distribute the above-mentioned user definition file to all the other nodes of the cluster that you want to manage as a new user.

- All nodes will be restarted to reconstitute the cluster.

-

Add the user name and password added above to the gs_admin user definition file as a Tomcat execution user.

Example: If the new user name/password is gs#newuser/newuser

$ echo gs#newuser,9c9064c59f1ffa2e174ee754d2979be80dd30db552ec03e7e327e9b1a4bd594e >> /var/lib/gridstore/admin/conf/password

3.7 Gathering of error data

(1) Log output

gs_admin error data and other logs are output to the adminHome log directory.

The log output level can be set in the /webapps/gs_admin/WEB-INF/classes/logback.xml under the Tomcat home directory ( /usr/local/tomcat by default).

The output level is INFO by default.

This command is used in collecting data when a gs_admin problem occurs, or when there is a request from the support desk, etc.

<?xml version="1.0" encoding="UTF-8" ?>

<configuration>

<property resource="conf/gs_adminPath.properties" />

<appender name="FILE" class="ch.qos.logback.core.rolling.RollingFileAppender">

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>${adminHome}/log/gs_admin-%d{yyyyMMdd}.log</fileNamePattern>

<maxHistory>10</maxHistory>

</rollingPolicy>

<encoder>

<pattern>%d{yyyy-MM-dd HH:mm:ss.SSS} %level [%thread] %logger [%file::%line] %msg%n</pattern>

</encoder>

</appender>

<root level="info">

<appender-ref ref="FILE" />

</root>

</configuration>

(2) Performance data collection

During gs_admin troubleshooting, the parameter to collect performance data is found in gs_admin.properties.

By setting this parameter (logging.performance) to true, performance data will be added to the data output under adminHome/log. The default value is false.

3.8 Error list

| Error type | Error no. | Message | Treatment method |

|---|---|---|---|

| Internal Server Error | E00104 | Cluster is not servicing. | Cluster under management is not operating. Use the configuration tab and other operation tools to operate the cluster, refresh the clusters from the cluster tree, or login again. |

| Internal Server Error | E00105 | D10135: Failed to check a node status. | Nodes from Ver.1.5 or lower may have been registered in the nodes registered in the repository. Check the version of each node. |

| Internal Server Error | Failed to create <File path>. | File creation failed. Check if there is any directory which does not exist in the displayed path, or any directory for which access rights of Tomcat user have not been assigned. | |

| Internal Server Error | E0030C | [Code:******] <Error message> | Error message of GridDB node. |

| See “GridDB Errorcode List” and check the countermeasure with the corresponding code. | |||

| Bad Request | E00300 | Container "Container name" already exists. | Container name is duplicated. Specify another container name to create a container. |

| Bad Request | E00303 | Container "Container name" not found. | Specified container does not exist. Right click the ContainerTree cluster, select refresh and search for the container again. |

| Bad Request | [Code:******] <Error message> | Error message of GridDB node. | |

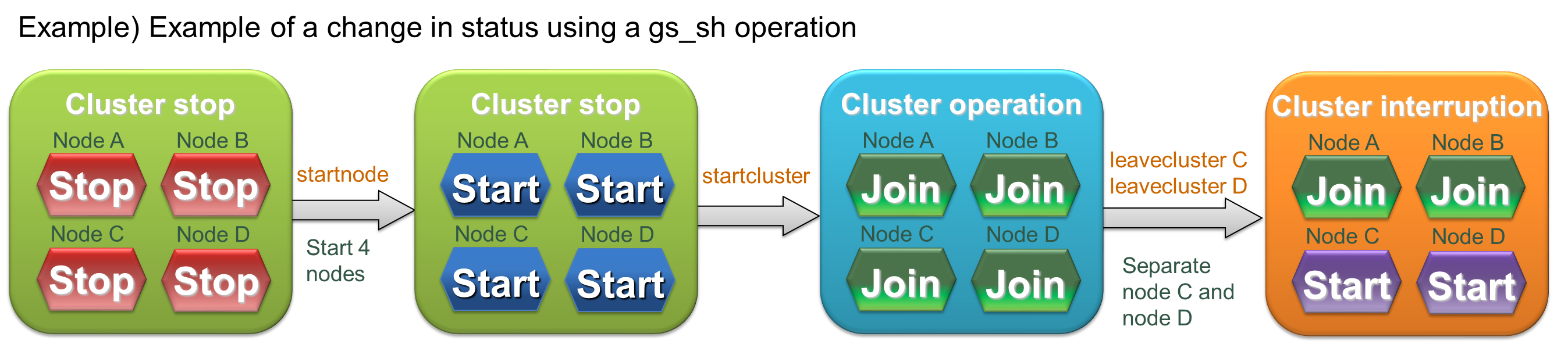

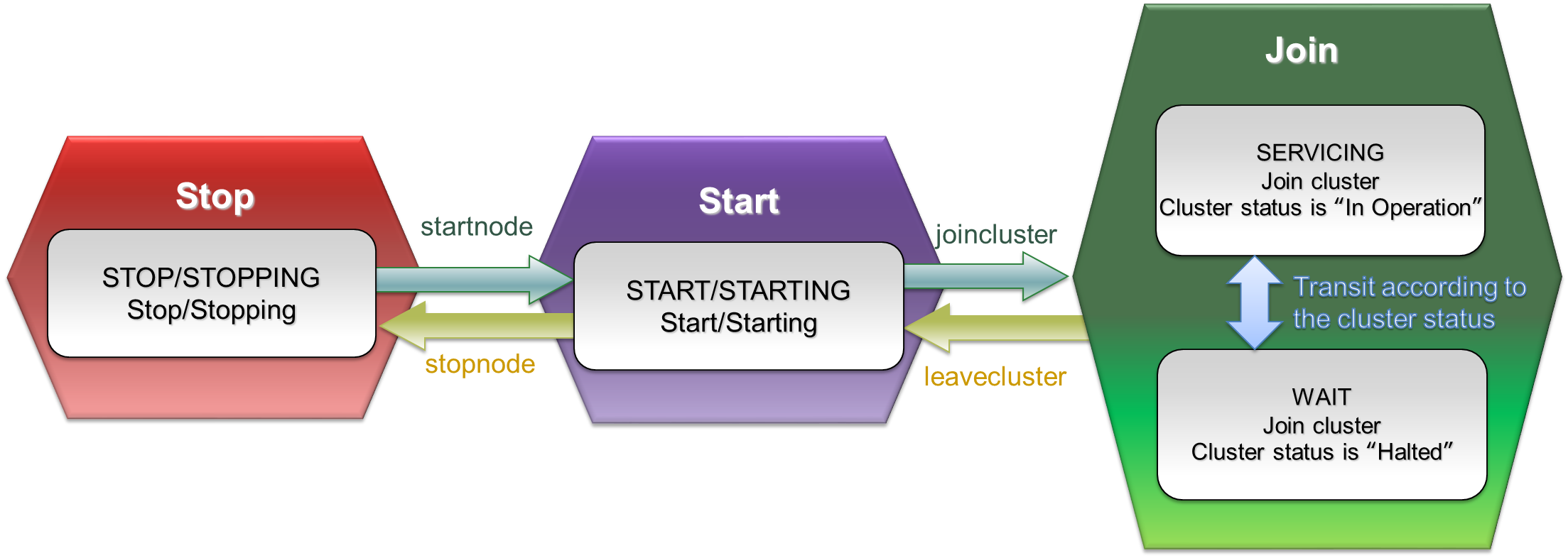

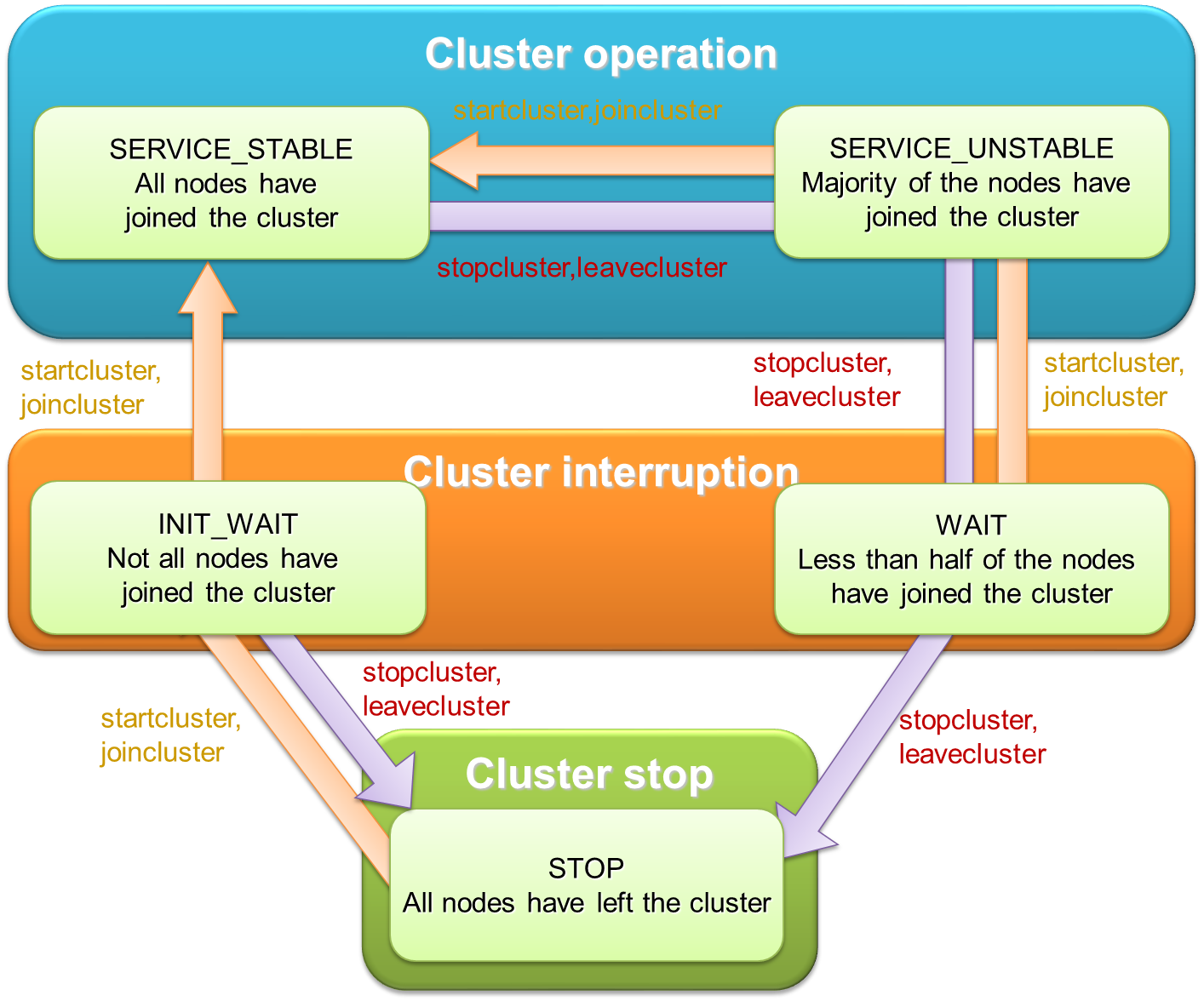

| See “GridDB Errorcode List” and check the countermeasure with the corresponding code. | |||