GridDB technical reference

Revision: 1818

Table of Contents

- 1 Introduction

- 2 What is GridDB?

- 3 Structure of GridDB

- 4 GridDB functions

- 4.1 Resource management

- 4.2 User management

- 4.3 Data management function

- 4.3.1 Container (table) data type

- 4.3.2 GEOMETRY-type (Spatial-type)

- 4.3.3 Container (table) ROWKEY

- 4.3.4 Container (table) index

- 4.3.5 Timeseries container (timeseries table)

- 4.3.6 Selection and interpolation of a timeseries container (timeseries table)

- 4.3.7 Affinity function

- 4.3.8 Table partitioning (GridDB AE/VE)

- 4.3.9 Container placement information

- 4.3.10 Block data compression

- 4.3.11 Deallocation of unused data blocks

- 4.4 Transaction processing

- 4.5 Trigger function

- 4.6 Failure process function

- 4.7 Data access

- 4.8 Operating function

- 5 Parameters

- 6 Terminology

- 7 System limiting values

1 Introduction

1.1 Aim & configuration of this manual

This manual explains the GridDB architecture and functions provided.

This manual is targeted at administrators who are in-charge of the operational management of GridDB and designers and developers who perform system design and development using GridDB.

The manual is composed as follows.

-

What is GridDB?

- Describes the features and application examples of GridDB.

-

Architecture of GridDB

- Describes the data model and cluster operating structure in GridDB.

-

Functions provided by GridDB

- Describes the data management functions, functions specific to the data model and operating functions provided by GridDB.

-

Parameters

- Describes the parameters to control the operations in GridDB.

2 What is GridDB?

GridDB is a distributed NoSQL database to manage a group of data (known as a row) that is made up of a key and multiple values. Besides having a composition of an in-memory database that arranges all the data in the memory, it can also adopt a hybrid composition combining the use of a disk (including SSD as well) and a memory. By employing a hybrid composition, it can also be used in small scale, small memory systems.

In addition to the 3 Vs (volume, variety, velocity) required in big data solutions, data reliability/availability is also assured in GridDB. Using the autonomous node monitoring and load balancing functions, laborsaving can also be realized in cluster applications.

2.1 Features of GridDB

2.1.1 Big data (volume)

As the scale of a system expands, the data volume handled increases and thus the system needs to be expanded so as to quickly process the big data.

System expansion can be broadly divided into 2 approaches - scale-up (vertical scalability) and scale-out (horizontal scalability).

-

What is scale-up?

This approach reinforces the system by adding memory to the operating machines, using SSD for the disks, adding processors, and so on. Generally, there is a need to stop the nodes once during scale-up operation as it is not a cluster application using multiple machines even though each individual processing time is shortened and the system processing speed is increased. When a failure occurs, failure recovery is also time-consuming.

-

What is scale-out?

This approach increases the number of nodes constituting a system to improve the processing capability. Generally, there is no need to completely stop service when a failure occurs and during maintenance as multiple nodes are linked and operating together. However, the application management time and effort increases as the number of nodes increases. This architecture is suitable for performing highly parallel processing.

In GridDB, in addition to the scale-up approach to increase the number of operating nodes and reinforce the system, new nodes can be added to expand the system with a scale-out approach to incorporate nodes into an operating cluster.

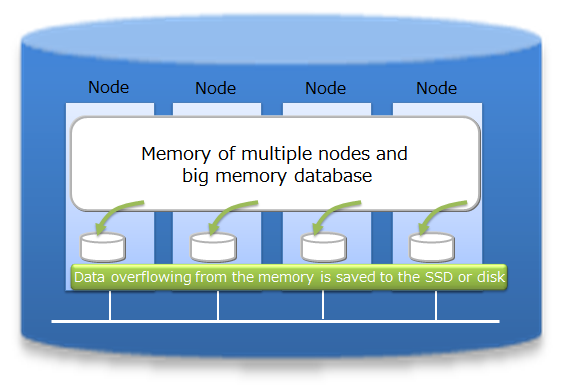

As an in-memory processing database, GridDB can handle a large volume of data with its scale-out model. In GridDB, data is distributed throughout the nodes inside a cluster that is composed of multiple nodes. Therefore, a large-scale memory database can be provided as the memories of multiple nodes can be used as a single, large memory space.

In addition, since data management of a hybrid composition that combines the use of disk with memory is also possible, data exceeding the memory size can be retained and accessed even when operating with a standalone node. A large capacity that is not limited by the memory size can also be realized.

Combined use of in-memory/disk

System expansion can be carried out online with a scale-out approach. As a result, a system in operation can be supported without having to stop it as it will support the increasing volume of data as the system grows.

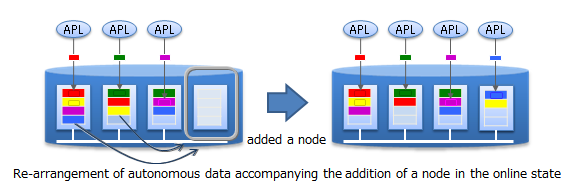

In the scale-out approach, data is arranged in an appropriate manner according to the load of the system in the nodes built into the system. As GridDB will optimize the load balance, the application administrator does not need to worry about the data arrangement. Operation is also easy because a structure to automate such operations has been built into the system.

Scale-out model

2.1.2 Various data types (variety)

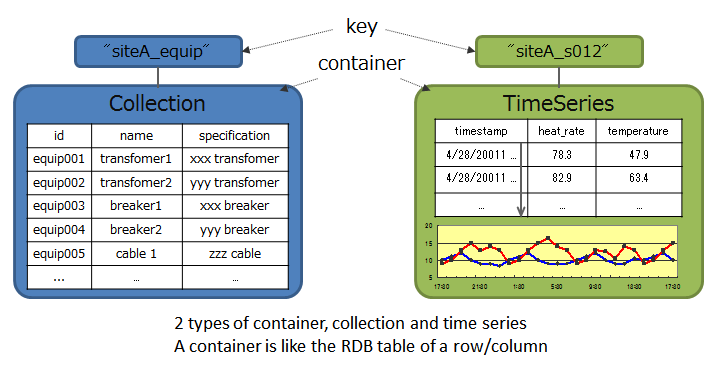

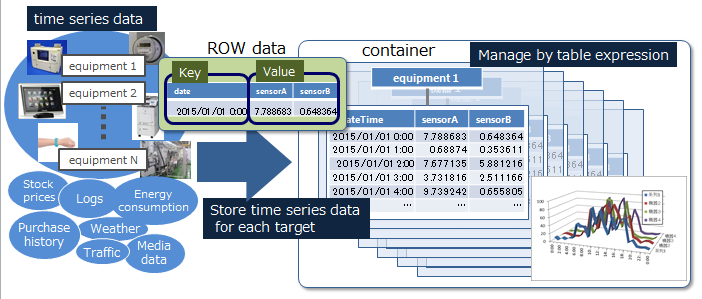

GridDB data adopts a Key-Container data model that is expanded from Key-Value. Data is stored in a device equivalent to a RDB table known as a container. (A container can be considered a RDB table for easier understanding.)

When accessing data in GridDB, the model allows data to be short-listed with a key thanks to its Key-Value database structure, allowing processing to be carried out at the highest speed. A design that prepares a container serving as a key is required to support the entity under management.

Data model

Besides being suitable for handling a large volume of time series data (TimeSeries container) that is generated by a sensor or the like and other values paired with the time of occurrence, space data such as position information, etc. can also be registered and space specific operations (space intersection) can also be carried out in a container. A variety of data can be handled as the system supports non-standard data such as array data, BLOB and other data as well.

A unique compression function and a function to release data that has expired and so on are provided in a TimeSeries container, making it suitable for the management of data which is generated in large volumes.

2.1.3 High-speed processing (velocity)

A variety of architectural features is embedded in GridDB to achieve high-speed processing.

2.1.3.1 Processing is carried out in the memory space as much as possible

In the case of an operating system with an in-memory in which all the data is arranged, there is no real need to be concerned about the access overhead in the disk. However, in order to process a volume of data so large that it cannot be saved in the memory, there is a need to localize the data accessed by the application and to reduce access to the data arranged in the disk as much as possible.

In order to localize data access in GridDB, a function is provided to arrange related data in the same block as far as possible. Since data in the data block can be consolidated according to the hints provided in the data, memory mishit is reduced during data access, thereby increasing the processing speed for data access. By setting hints for memory consolidation according to the access frequency and access pattern in the application, limited memory space can be used effectively for operation. (Affinity function)

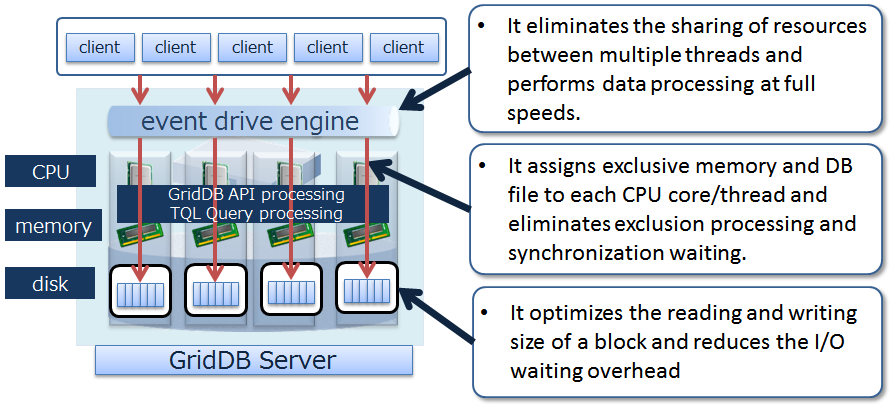

2.1.3.2 Reduces the overhead

In order to reduce events that cause delay in the database execution by as much as possible e.g. a lock or latch event when accessing the database in parallel, exclusive memory and DB files are assigned to each CPU core and thread, so as to eliminate time spent waiting for exclusion and synchronization processing to be carried out.

Architecture

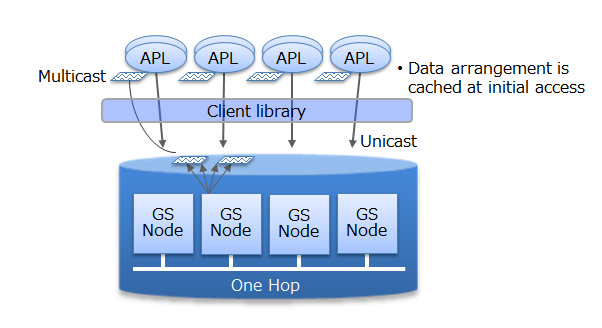

In addition, direct access between the client and node is possible in GridDB by caching the data arrangement when accessing the database for the first time on the client library end. Since direct access to the target data is possible without going through the master node to manage the operating status of the cluster and data arrangement, access to the master node can be centralized to reduce communication cost substantially.

Access from a client

2.1.3.3 Processing in parallel

High-speed processing is realized through parallel processing e.g. by dividing a request into processing units capable of parallel processing in the drive engine and executing the process using a thread in the node and between nodes, as well as dispersing a single large data into multiple nodes (partitioning) for processing to be carried out in parallel between nodes.

2.1.4 Reliability/availability

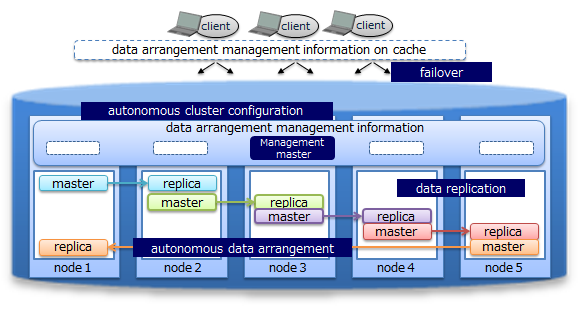

Duplicate data (hereinafter referred to replicas) are created in the cluster and processing can be continued by using these replicas even when a failure occurs in any of the nodes constituting a cluster. Special operating procedures are not necessary as the system will also automatically perform re-arrangement of the data after a node failure occurs (autonomous data arrangement). Data arranged in a failed node is restored from a replica and then the data is re-arranged so that the set number of replicas is reached automatically.

Duplex, triplex or multiplex replica can be set according to the availability requirements.

Each node performs persistence of the data update information using a disk, and all registered and updated data up to that point in time can be restored without being lost even if a failure occurs in the entire cluster system.

In addition, since the client also possesses cache information on the data arrangement and management, upon detecting a node failure, it will automatically perform a failover and data access can be continued using a replica.

High availability

2.2 GridDB Editions

There are currently three distinct versions of GridDB available.

- GridDB Standard Edition

- GridDB Advanced Edition

- GridDB Vector Edition

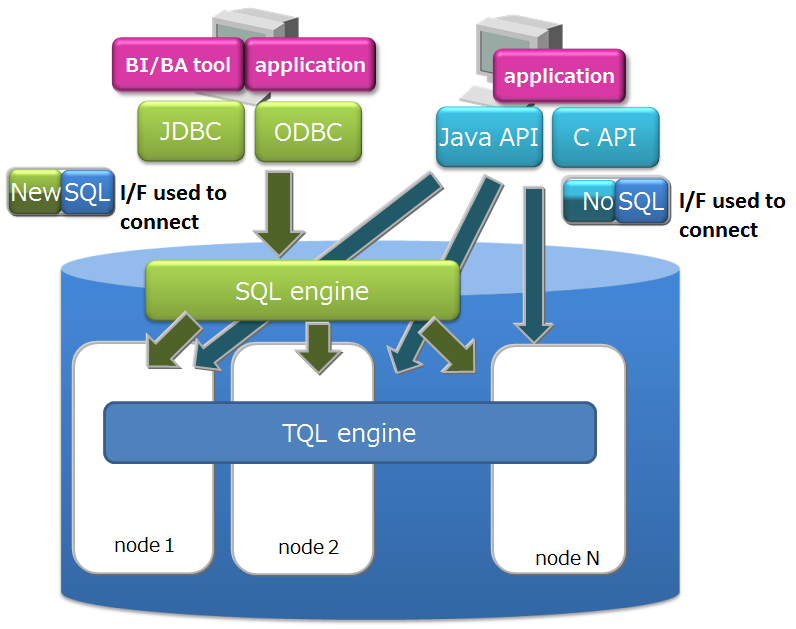

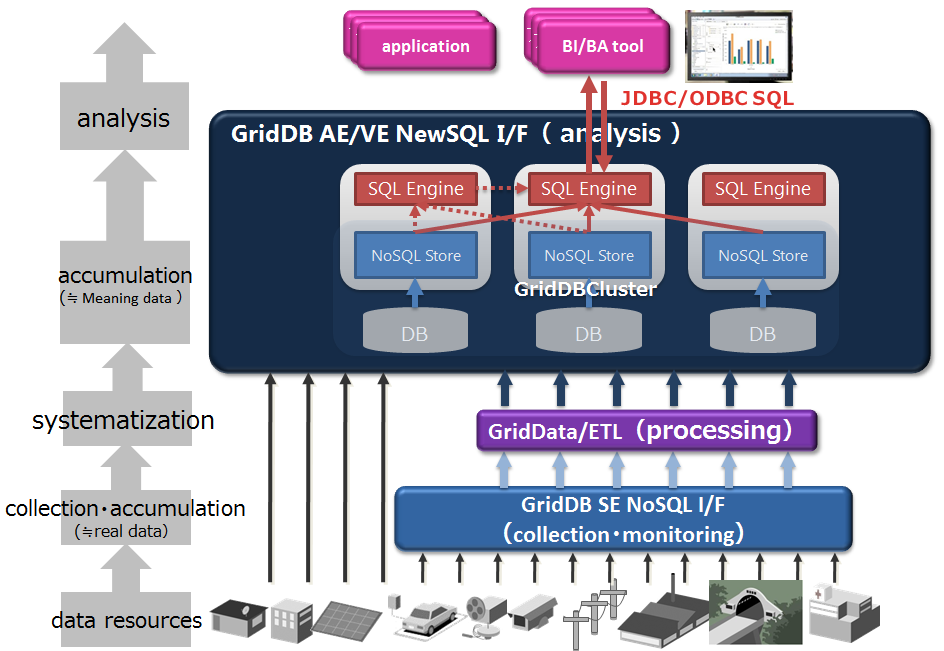

GridDB Advanced Edition (hereinafter referred to as AE) adds SQL processing engine on top of the high performance offered in GridDB Standard Edition (hereinafter referred to as SE). Containers can be considered as tables and operated.

GridDB Vector Edition (hereinafter referred to as VE) is a product added to GridDB AE for high speed matching of high dimensional vector data used in image recognition, machine learning in big data analysis and large scale media analysis.

All products features are described in Features of GridDB.

GridDB AE has 2 additional features as shown below.

-

NewSQL interfaces

- In addition to being SQL 92 compliant, GridDB AE supports ODBC (C language interface) and JDBC (Java interface) application interfaces.

- By using ODBC/JDBC, direct access to the database from BI (Business Intelligence) or ETL (Extract Transfer Load) tool becomes possible.

-

Table partitioning function

- Partitioning function for high speed access to a huge table.

- Since data is divided into multiple parts and distributed to multiple nodes, it is possible to parallelize data search and extraction from the table, thus realizing faster data access.

GridDB VE has 2 additional features as shown below:

-

Ultra-high-speed vector processing

- Realize ultra-high-speed vector processing by expressing data in high-dimension vectors and pre-indexing similar vector groups.

-

Pattern analyze by extended SQL

- Arbitrary pattern analysis can be performed freely with extended SQL that incorporates pattern recognition function.

GridDB editions

The features of each interface are as follows.

-

NoSQL interface (NoSQL I/F)

- Client APIs (C, Java) of NoSQL I/F focus on batch processing of big data at high speed.

- It is used for data collection, high-speed access of key value data, simple aggregate calculation using TQL, etc.

-

NewSQL interface (NewSQL I/F) (GridDB AE/VE only)

- ODBC/JDBC of NewSQL I/F focus on cooperation with existing applications and development productivity using SQL.

- It is used to classify and analyze data collected using BI tools, etc.

When using GridDB AE/VE, both NoSQL I/F and NewSQL I/F can be used depending on the use case.

Use case

Since GridDB SE and AE share the same data structure, GridDB SE data can be used in GridDB AE.

The database and NoSQL/NewSQL inteface of GridDB have compatibility among the same major version (for the minor version up). The notation of version is as follows.

-

The version of GridDB is represented as "X.Y[.Z]", and each symbol represents the following.

- Major version (X) ・・・ It is changed for significant enhancements.

- Minor version (Y) ・・・ It is changed for expanding or adding functions.

- Revision (X) ・・・ It is changed for such as bug fixes.

When replacing GridDB SE with GridDB AE/VE or when using both NoSQL I/F and NewSQL I/F in GridDB AE/VE, it is necessary to understand the following specification in advance. It is unnecessary when using only GridDB SE.

- Containers created with NoSQL I/F can be accessed as tables in NewSQL I/F. And tables created with NewSQL I/F can be accessed as containers in NoSQL I/F.

- The names of tables and containers must be unique.

- In GridDB SE/AE/VE, the data structure of the database and user management information is common.

3 Structure of GridDB

The operating structure and data model of a GridDB cluster is described.

3.1 Composition of a cluster

GridDB is operated by clusters which are composed of multiple nodes. To access the database from an application system, the nodes have to be started up and the cluster has to be constituted (cluster service is executed).

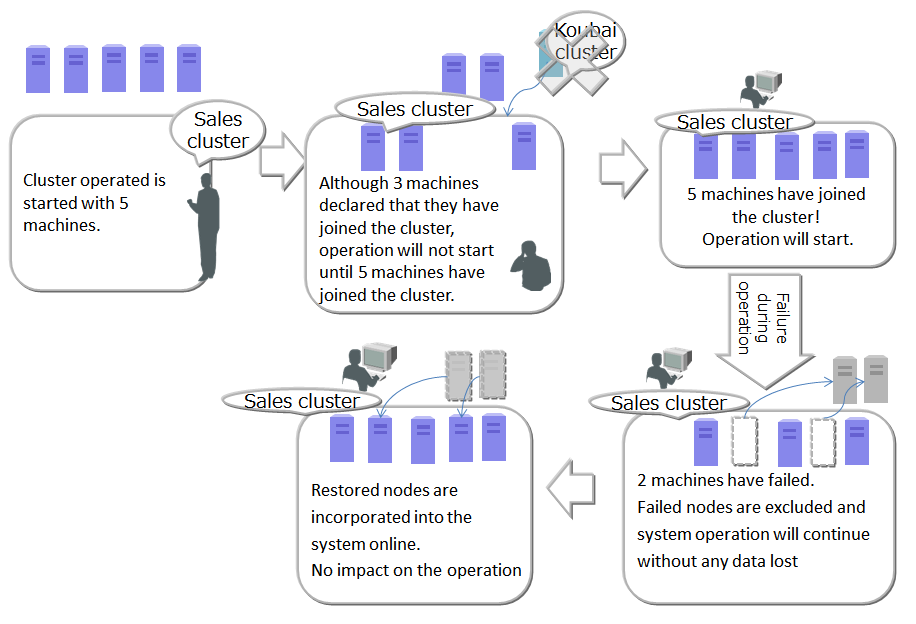

A cluster is formed and cluster service is started when a number of nodes specified by the user joins the cluster. Cluster service will not be started and access from the application will not be possible until all nodes constituting a cluster have joined the cluster.

A cluster needs to be composed even when operating with 1 node only. In this case, the number of nodes constituting a cluster is 1. A composition that operates a single node is known as a single composition.

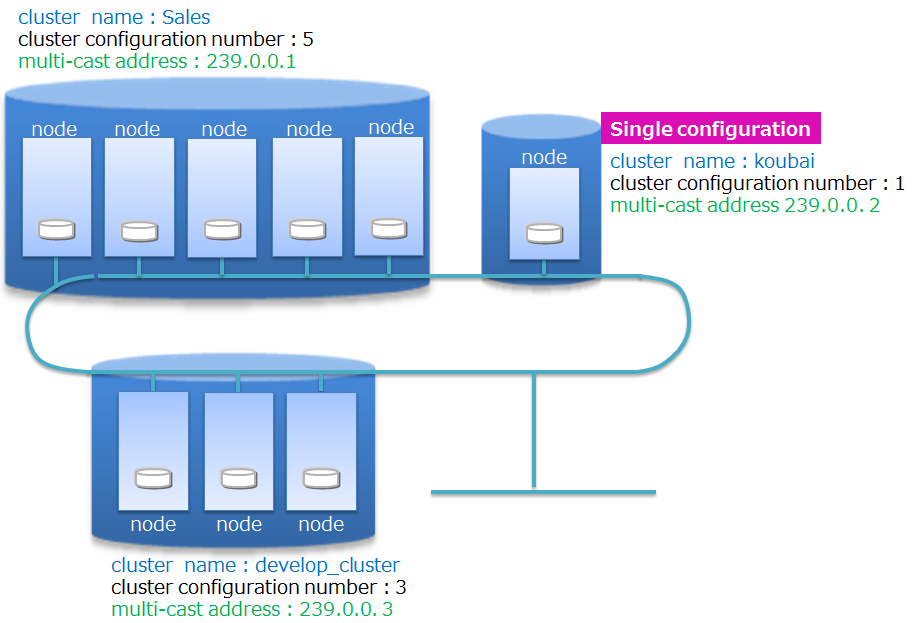

Cluster name and number of nodes constituting a cluster

Cluster names are used to separate multiple clusters so that the correct clusters (using the intended nodes) can be composed using multiple GridDB nodes on a network. Multiple GridDB clusters can be composed in the same network. A cluster is composed of nodes with the same cluster name, number of nodes constituting a cluster, multi-cast address setting. When composing a cluster, the parameters need to be specified as well in addition to setting the cluster name in the cluster definition file which is a definition file saved for each node constituting a cluster.

The method of constituting a cluster using multicast is called multicast method. See Cluster configuration methods for details.

The operation of a cluster composition is shown below.

Operation of a cluster composition

To start up a node and compose a cluster, the operation commands gs_startnode/gs_joincluster command or gs_sh are used. In addition, there is a service control function to start up the nodes at the same time as the OS and to compose the cluster.

To compose a cluster, the number of nodes joining a cluster (number of nodes constituting a cluster) and the cluster name must be the same for all the nodes joining the cluster.

Even if a node fails and is separated from the cluster after operation in the cluster started, cluster service will continue so long as the majority of the number of nodes is joining the cluster.

Since cluster operation will continue as long as the majority of the number of nodes is in operation, when a node is separated online due to maintenance and other work during cluster operation, it can be incorporated after the maintenance work ends. Furthermore, nodes can be added online to reinforce the system.

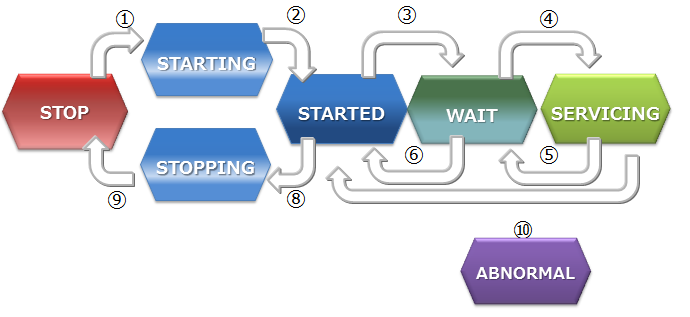

3.1.1 Status of node

There are 2 GridDB status, nodeStatus and clusterStatus that can be checked with a gs_stat command. The status of a node is determined by these 2 statuses.

nodeStatus indicates the operating status of the node while clusterStatus indicates the role of each node in the constituted cluster. The status of the entire cluster is determined by the status of these multiple nodes belonging to the cluster.

-

Transition in the node status

The node status may be one of the following statuses shown in the diagram below.

Node status

- [STOP]: State in which the GridDB server has not been started in the node.

- [STARTING]: State in which the GridDB server is starting in the node. Depending on the previous operating state, start-up processes such as recovery processing of the database are carried out. The only possible access from a client is checking the status of the system with a gs_stat command or gs_sh command. Access from the application is not possible.

- [STARTED]: State in which the GridDB server has been started in the node. However, continued access from the application is not possible as the node has not joined the cluster. To obtain the cluster composition, a command is issued to join a cluster with the gs_joincluster or gs_sh cluster operating command.

- [WAIT]: State in which the system is waiting for the cluster composition. Nodes have been informed to join a cluster but the number of nodes constituting a cluster is insufficient, so the system is waiting for the number of nodes constituting a cluster to be reached. It also indicates the node status when the number of nodes constituting a cluster drops below the majority and the cluster service is stopped.

- [SERVICING]: State in which a cluster has been constituted and access from the application is possible. However, access may be delayed if synchronization between the clusters of the partition occurs due to a re-start after a failure when the node is stopped or the like.

- [STOPPING]: Intermediate state in which a node has been instructed to stop but has not stopped yet.

- [ABNORMAL]: SERVICING state or state in which an error is detected by the node in the middle of the state transition. A node in the ABNORMAL state will be automatically separated from the cluster. After obtaining the operating information of the system, the system needs to be stopped by force and then re-started. By re-starting the system, recovery processing will be automatically carried out.

-

Description of state transition

A description of events that serve as an opportunity to change the status of a node.

State transition State transition event Description 1 Command execution Node start-up using gs_startnode command, gs_sh, service start-up 2 System Automatic transition at the end of recovery processing or loading of database files 3 Command execution Cluster participation using gs_joincluster/gs_appendcluster command, gs_sh, service start-up 4 System State changes when the required number of component nodes join a cluster 5 System When other nodes that make up a cluster are detached from the service due to a failure, etc., and the number of nodes constituting a cluster drops below half of the value set. 6 Command execution Detaches a node from a cluster using a gs_leavecluster command or gs_sh 7 Command execution Detaches a node from a cluster using a gs_leavecluster/gs_stopcluster command or gs-sh 8 Command execution Stops a node using gs_stopnode command, gs_sh, service stop 9 System Stops the server process once the final processing ends 10 System Detached state due to a system failure. In this state, the node needs to be stopped by force once. -

Node status and nodeStatus, clusterStatus

By using a gs_stat command, the detailed operating information of the node can be checked with text in the json format. The relationship between the clusterStatus and the nodeStatus which is a json parameter to indicate the gs_stat is shown below.

Status /cluster/nodeStatus /cluster/clusterStatus STARTING INACTIVE SUB_CLUSTER STARTED INACTIVE SUB_CLUSTER WAIT ACTIVATING or DEACTIVATING SUB_CLUSTER SERVICING ACTIVE MASTER or FOLLOWER STOPPING NORMAL_SHUTDOWN SUB_CLUSTER ABNORMAL ABNORMAL SUB_CLUSTER The status of the node can be checked with gs_sh or gs_admin.

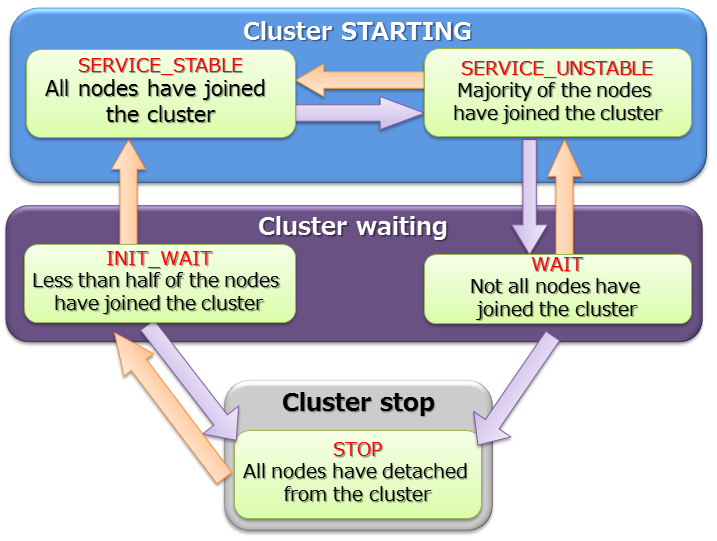

3.1.2 Status of cluster

The cluster operating status is determined by the state of each node, and the status may be one of 3 states - IN OPERATION/INTERRUPTED/STOPPED.

Cluster service starts when all the nodes that make up a cluster (number of nodes constituting a cluster) specified by the user during initial system construction have joined the cluster.

During initial cluster construction, the state in which the cluster is waiting to be composed when all the nodes that make up the cluster have not been incorporated into the cluster is known as [INIT_WAIT]. When the number of nodes constituting a cluster has joined the cluster, the state will automatically change to the operating state.

There are 2 operating states. These are [STABLE] and [UNSTABLE].

-

[STABLE] state

- State in which a cluster has been formed by the number of nodes specified in the number of nodes constituting a cluster and service can be provided in a stable manner.

-

[UNSTABLE] state

- State in which the number of nodes constituting a cluster has not been fulfilled.

- Cluster service will continue for as long as a majority of the number of nodes constituting a cluster is in operation.

A cluster can be operated in an [UNSTABLE] state as long as a majority of the nodes are in operation even if they are detached from a cluster due to maintenance and other reasons.

Cluster service is interrupted automatically in order to prevent a split brain from occurring when the number of nodes making up a cluster falls below the majority of the number of nodes constituting a cluster. The state in which cluster service has been interrupted is known as [WAIT] state.

-

What is split brain?

A split brain is an action where multiple cluster systems performing the same process provide simultaneous service when a system is divided due to a hardware or network failure in a tightly-coupled system that works like a single server interconnecting multiple nodes. If the operation is continued in this state, data saved as replicas in multiple clusters will be treated as master data, resulting in data consistency being lost.

To restart cluster service from the [WAIT] state, new nodes are added to a cluster and nodes with errors are restored. The state will become [STABLE] once the number of nodes constituting a cluster has joined the cluster again.

When the number of clusters constituting a cluster falls below half due to a failure in a node constituting the cluster and the cluster operation is disrupted, new nodes are added to a cluster and nodes with errors are restored. Cluster service is automatically restarted once a majority of the nodes has joined the cluster.

Cluster status

A STABLE state is a state in which the value of the json parameter shown in gs_stat, /cluster/activeCount, is equal to the value of /cluster/designatedCount.

%gs_stat -u admin/admin -s

{

"checkpoint": {

"archiveLog": 0,

:

:

},

"cluster": {

"activeCount":4, // Nodes in operation within the cluster

"clusterName": "test-cluster",

"clusterStatus": "MASTER",

"designatedCount": 4, // Number of nodes constituting a cluster

"loadBalancer": "ACTIVE",

"master": {

"address": "192.168.0.1",

"port": 10040

},

"nodeList": [ // Node list constituting a cluster

{

"address": "192.168.0.1",

"port": 10040

},

{

"address": "192.168.0.2",

"port": 10040

},

{

"address": "192.168.0.3",

"port": 10040

},

{

"address": "192.168.0.4",

"port": 10040

},

:

],

:

:

The status of the cluster can be checked with gs_sh or gs_admin. An example on checking the cluster status with gs_sh is shown below.

% gs_sh gs> setuser admin admin gsadm // Setting connecting user gs> setnode node1 192.168.0.1 10040 // Definition of a node constituting the cluster gs> setnode node2 192.168.0.2 10040 gs> setnode node3 192.168.0.3 10040 gs> setnode node4 192.168.0.4 10040 gs> setcluster cluster1 test150 239.0.0.5 31999 $node1 $node2 $node3 $node4 // Definition of cluster gs> startnode $cluster1 // Start-up of all nodes making up the cluster gs> startcluster $cluster1 // Instructing cluster composition Waiting for cluster to start. Cluster has started. gs> configcluster $cluster1 // Checking status of cluster Name : cluster1 ClusterName : test-cluster Designated Node Count : 4 Active Node Count : 4 ClusterStatus : SERVICE_STABLE // Stable state Nodes: Name Role Host:Port Status ------------------------------------------------- node1 M 192.168.0.1:10040 SERVICING node2 F 192.168.0.2:10040 SERVICING node3 F 192.168.0.3:10040 SERVICING node4 F 192.168.0.4:10040 SERVICING gs> leavecluster $node2 Waiting for node to separate from cluster Node has separated from cluster. gs> configcluster $cluster1 Name : cluster1 ClusterName : test150 Designated Node Count : 4 Active Node Count : 3 ClusterStatus : SERVICE_UNSTABLE // Unstable state Nodes: Name Role Host:Port Status ------------------------------------------------- node1 M 192.168.0.1:10040 SERVICING // Master node node2 - 192.168.0.2:10040 STARTED node3 F 192.168.0.3:10040 SERVICING // Follower node node4 F 192.168.0.4:10040 SERVICING // Follower node

3.2 Cluster configuration methods

A cluster consists of one or more nodes connected in a network. Each node maintains a list of the other nodes' addresses for communication purposes.

GridDB supports 3 cluster configuration methods for configuring the address list. Different cluster configuration methods can be used depending on the environment or use case. Connection method of client or operational tool may also be different depending on the configuration methods.

Besides the recommended Multicast method, Fixed list method and Provider method are also available.

Fixed list or provider method can be used in the environment where multicast is not supported.

-

Multicast method

- This method performs node discovery in multi-cast to automatically configure the address list.

-

Fixed list method

- A fixed address list is saved in the cluster definition file. Each GridDB server reads the list once when the node is started.

-

Provider method

- The address list is acquired from a provider, which has been configured either as a web service or a static content.

The comparison of the method is as follows

| Point | Multicast method (recommended) | Fixed list method | Provider method |

|---|---|---|---|

| Setting | Multicast address and port | List of address and port of each node | URL of the address provider |

| Use case | When multicast is supported | When multicast is not supported | When multicast is not supported |

| System scale estimation can be performed accurately | System scale estimation can not be performed | ||

| Cluster operation | Cluster operation</td><td>Perform automatic discovery of nodes at a specified time interval | Set a common address list for all nodes | Obtain the address list at a specified time interval from address provider |

| Read that list only once at node startup | |||

| Pros. | No need to restart the cluster when adding nodes | No mistake of configuration by consistency check of the list | No need to restart the cluster when adding nodes |

| Cons. | Multicast is required for client connection | Need to restart cluster when adding nodes | Need to ensure the availability of the address provider |

| Need to update the connection setting of the client |

3.3 Data model

GridDB is a unique Key-Container data model that resembles Key-Value. It has the following features.

- A concept resembling a RDB table that is a container for grouping Key-Value has been introduced.

- A schema to define the data type for the container can be set. An index can be set in a column.

- Transactions can be carried out on a row basis within the container. In addition, ACID is guaranteed on a container basis.

GridDB manages data on a block, container, partition, and partition group basis.

Data model

GridDB manages data on a block, container, table, row, partition, and partition group basis.

-

Block

A block is a data unit for data persistence processing in a disk (hereinafter referred to a checkpoint) and is the smallest physical data management unit in GridDB.

Multiple container data are arranged in a block. Before initial startup of GridDB, a size of either 64 KB or 1 MB can be selected for the block size to be set up in the definition file (cluster definition file). Specify 64 KB if the installed memory of the system is low, or if the frequency of data increase is low.

As a database file is created during initial startup of the system, the block size cannot be changed after initial startup of GridDB.

-

Container (Table)

A container is a data structure that serves as an interface with the user. A container consists of multiple blocks.

It is called a container when operating with NoSQL I/F, and a table when operating with NewSQL I/F. 2 data types exist, collection (table) and timeseries container (timeseries table).

Before registering data in an application, there is a need to make sure that a container (table) is created beforehand. Data is registered in a container (table).

-

Row

A row refers to a row of data to be registered in a container or table. Multiple rows can be registered in a container or table but this does not mean that data is arranged in the same block. Depending on the registration and update timing, data is arranged in suitable blocks within partitions.

Normally, there are columns with multiple data types in a row.

-

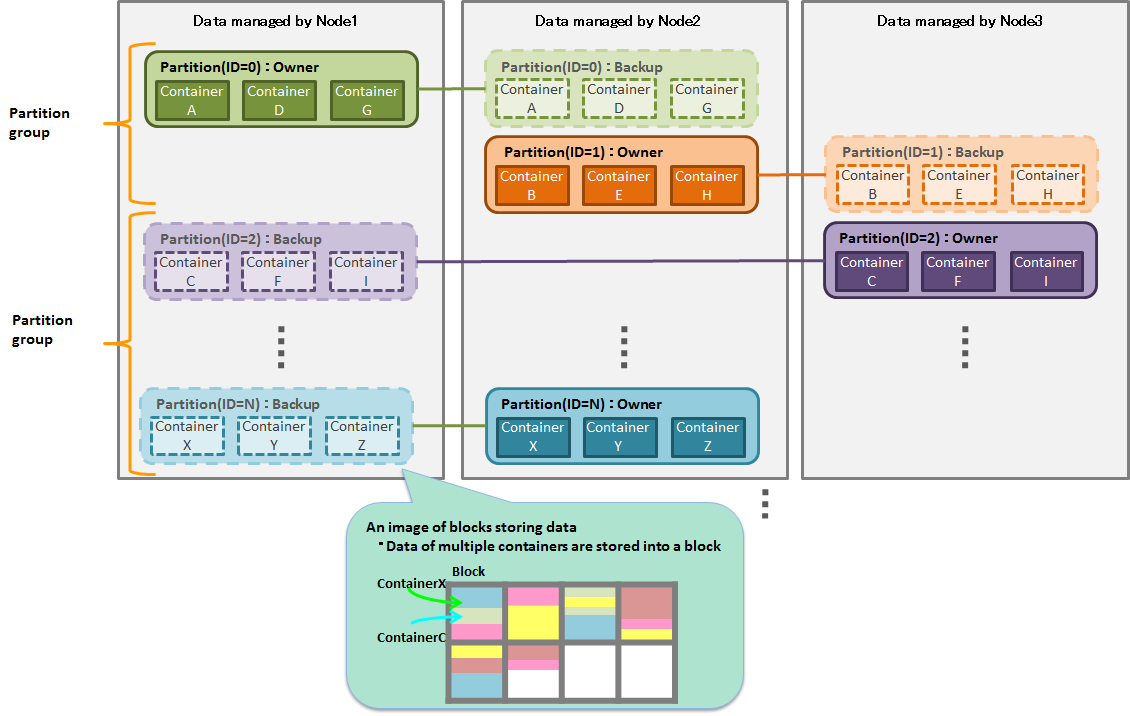

Partition

A partition is a data management unit that includes 1 or more containers or tables.

A partition is a data arrangement unit between clusters for managing the data movement to adjust the load balance between nodes and data multiplexing (replica) in case of a failure. Data replica is arranged in a node to compose a cluster on a partition basis.

A node that can be updated against a container inside a partition is known as an owner node and 1 node is allocated to each partition. A node that maintains replicas other than owner nodes is a backup node. Master data and multiple backup data exist in a partition, depending on the number of replicas set.

The relationship between a container and a partition is persistent and the partition which has a specific container is not changed. The relationship between a partition and a node is temporary and the autonomous data placement may cause partition migration to another node.

-

Partition group

A group of multiple partitions is known as a partition group.

Data maintained by a partition group is saved in an OS disk as a physical database file. A partition group is created with a number that depends on the degree of parallelism of the database processing threads executed by the node.

Data management unit

4 GridDB functions

Describes the data management functions, functions specific to the data model, operating functions and application development interfaces of GridDB.

4.1 Resource management

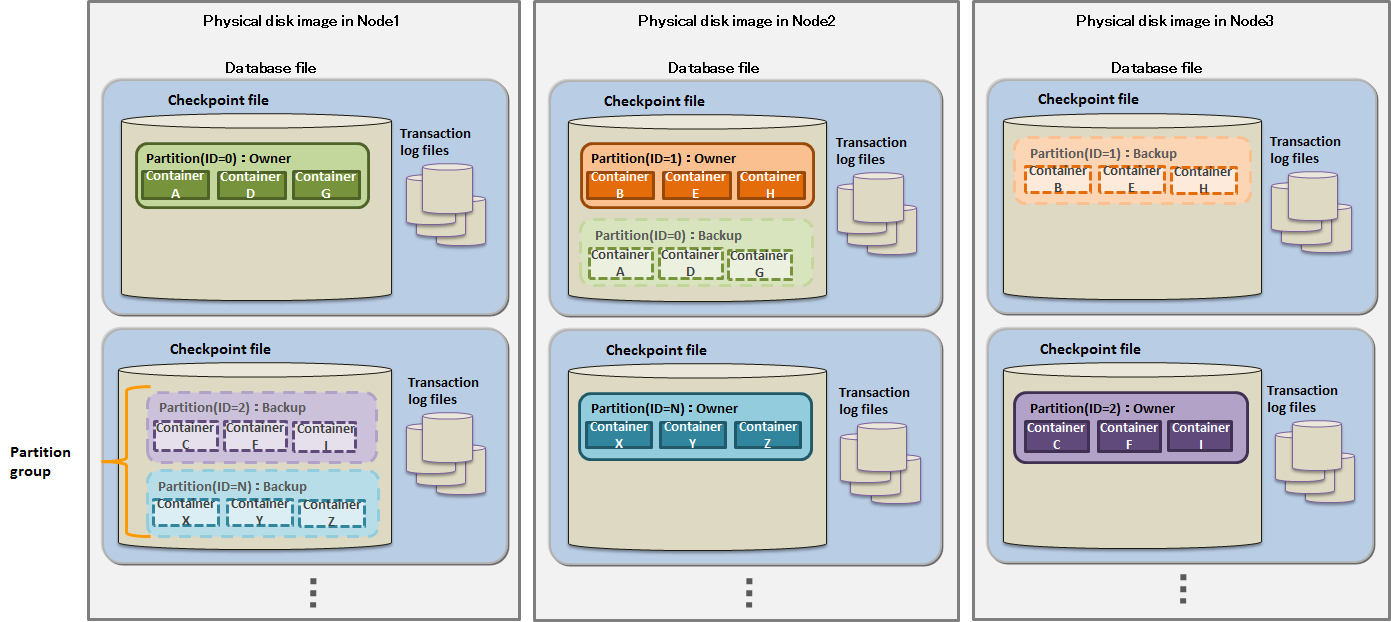

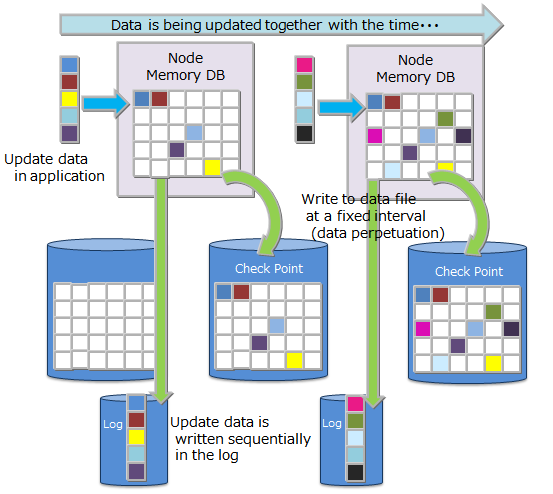

Besides the database residing in the memory, other resources constituting a GridDB cluster are perpetuated to a disk. The perpetuated resources are listed below.

-

Database file

A database file is a file group consisting of transaction log file and checkpoint file that are perpetuated to a HDD or SSD. Transaction log file is updated everytime the GridDB database is updated or a transaction occurs, whereas the checkpoint file is written at a specified time interval.

-

Checkpoint file

A checkpoint file is the perpetuation of a partition group data from the memory to the disk at a specified time interval, as defined in the node definition file (/checkpoint/checkpointInterval). The size of checkpoint file increases along with the size of the data, however once the file gets expanded, its size will not decrease even if data such as containers or rows are deleted. In this case, GridDB reuses the free space instead.

-

Transaction log file

Transaction data that are written to the database in memory is perpetuated to the transaction log file by writing the data sequentially in a log format.

-

Definition file

There are 2 types of definition file, a parameter file (gs_cluster.json: hereinafter referred to as a cluster definition file) when composing a cluster, and a parameter file (gs_node.json: hereinafter referred to as a node definition file) to set the operations and resources of the node in the cluster. In addition, there is also a user definition file for GridDB administrator users.

-

Event log file

The operating log of the GridDB server is saved. Messages such as errors, warnings, etc. are saved.

-

Backup file

Backup data in the data file of GridDB is saved.

Database file

The layout of these resources can be defined in GridDB home (path specified in environmental variable GS_HOME). In the initial installation state, the /var/lib/gridstore directory is GridDB home, and the initial data of each resource is placed under this directory.

The initial configuration status is as follows.

/var/lib/gridstore/ # GridDB home directory

admin/ # gs_admin home directory

backup/ # Backup directory

conf/ # Definition files directory

gs_cluster.json # Cluster definition file

gs_node.json # Node definition file

password # User definition file

data/ # Database directory

log/ # Log directory

The location of GridDB home can be changed by setting the .bash_profile file of the OS user gsadm. If you change the location, please also move resources in the above directory accordingly.

The .bash_profile file contains two environment variables, GS_HOME and GS_LOG.

vi .bash_profile # GridStore specific environment variables GS_LOG=/var/lib/gridstore/log export GS_LOG GS_HOME=/var/lib/gridstore // GridDB home directory path export GS_HOME

The database directory, backup directory and server event log directory can be changed by changing the settings of the node definition file as well.

In a system that has multiple disk drives, be sure to change the definition information in order to prevent loss of backup data during a disk failure.

See Parameters for the contents that can be set in the cluster definition file and node definition file.

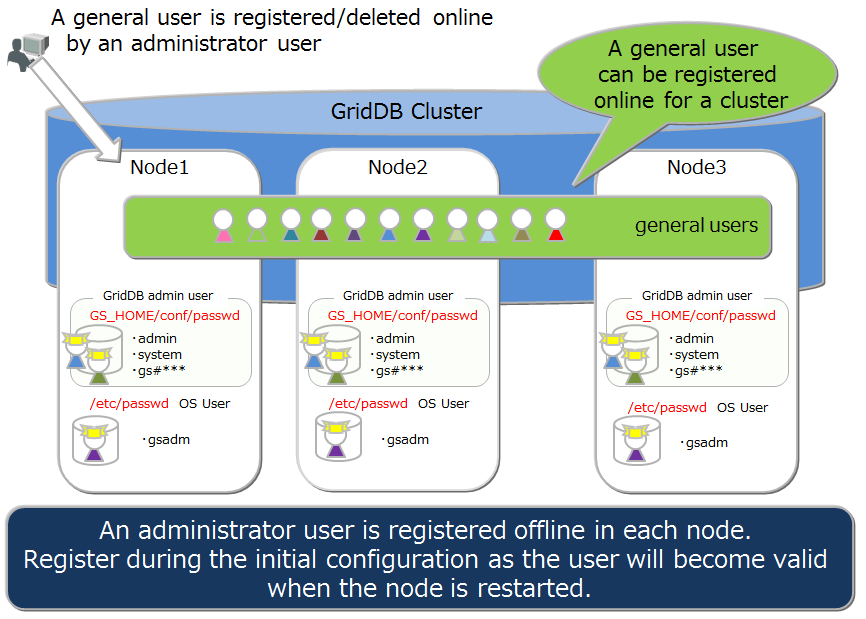

4.2 User management

There are 2 types of GridDB user, an OS user which is created during installation and a GridDB user to perform operations/development in GridDB (hereinafter referred to a GridDB user).

4.2.1 OS user

An OS user has the right to execute operating functions in GridDB and a gsadm user is created during GridDB installation. This OS user is hereinafter referred to gsadm.

All GridDB resources will become the property of gsadm. In addition, all operating commands in GridDB are executed by a gsadm.

A check is conducted to see whether the user has the right to connect to the GridDB server and execute the operating commands. This authentication is performed by a GridDB user.

4.2.2 GridDB user

-

Administrator user and general user

There are 2 types of GridDB user, an administrator user and a general user, which differ in terms of which functions can be used. Immediately after the installation of GridDB, 2 users, a system and an admin user, are registered as default administrator users.

An administrator user is a user created to perform GridDB operations while general users are users used by the application system.

For security reasons, administrator users and general users need to be used differently according to the usage purpose.

-

Creating a user

An administrator user can register or delete a gsadm, and the information is saved in the password file of the definition file directory as a GridDB resource. As an administrator user is saved/managed in a local file of the OS, it has to be placed so that the settings are the same in all the nodes constituting the cluster. In addition, administrator users need to be set up prior to starting the GridDB server. After the GridDB server is started, administrative users are not valid even if they are registered.

A general user can be created after an administrator user starts cluster operations in GridDB. A general user cannot be registered before the start of cluster services. A general user can only be registered using an operating command against a cluster as it is created after a cluster is composed in GridDB and maintained as management information in the GridDB database.

GridDB users

Since information is not communicated automatically among clusters, an administrator user needs to make the same settings in all the nodes and perform operational management such as determining the master management node of the definition file and distributing information from the master management node to all the nodes that constitute the cluster.

-

Rules when creating a user

There are naming rules to be adopted when creating a user name.

- Administrator user: Specify a user starting with “gs#”. After “gs#”, the name should be composed of only alphanumeric characters and the underscore mark. Since the name is not case-sensitive, gs#manager and gs#MANAGER cannot be registered at the same time.

- General user: Specify using alphanumeric characters and the underscore mark. However, the first character cannot be a number. In addition, since the name is not case-sensitive, user and USER cannot be registered at the same time. System and admin users cannot be created as default administrator users.

- Password: No restrictions on the characters that can be specified.

A string consisting of up to 64 characters can be specified for the user name and password.

4.2.3 Usable function

The operations that can be carried out by an administrator and a general user are shown below. Among the operations, commands which can be executed by a gsadm without using a GridDB user are marked with “✓✓”.

| Operations | Operating details | Operating tools used | gsadm | Administrator user | General user |

|---|---|---|---|---|---|

| Node operations | Starting a node | gs_startnode/gs_sh | ✓ | ✗ | |

| Stopping a node | gs_stopnode/gs_sh | ✓ | ✗ | ||

| Cluster operations | Building a cluster | gs_joincluster/gs_sh | ✓ | ✗ | |

| Adding a note to a cluster | gs_appendcluster/gs_sh | ✓ | ✗ | ||

| Detaching a node from a cluster | gs_leavecluster/gs_sh | ✓ | ✗ | ||

| Stopping a cluster | gs_stopcluster/gs_sh | ✓ | ✗ | ||

| User management | Registering an administrator user | gs_adduser | ✓✓ | ✗ | ✗ |

| Deleting an administrator user | gs_deluser | ✓✓ | ✗ | ✗ | |

| Changing the password of an administrator user | gs_passwd | ✓✓ | ✗ | ✗ | |

| Creating a general user | gs_sh | ✓ | ✗ | ||

| Deleting a general user | gs_sh | ✓ | ✗ | ||

| Changing the password of a general user | gs_sh | ✓ | ✓: Individual only | ||

| Database management | Creating/deleting a database | gs_sh | ✓ | ✗ | |

| Assigning/cancelling a user in the database | gs_sh | ✓ | ✗ | ||

| Data operations | Creating/deleting a container or table | gs_sh | ✓ | ✓: Only in the DB of the individual | |

| Registering data in a container or table | gs_sh | ✓ | ✓: Only in the DB of the individual | ||

| Searching for a container or table | gs_sh | ✓ | ✓: Only in the DB of the individual | ||

| Creating index to a container or table | gs_sh | ✓ | ✓: Only in the DB of the individual | ||

| Backup management | Creating a backup | gs_backup | ✓ | ✗ | |

| Restoring a backup | gs_restore | ✓✓ | ✗ | ✗ | |

| Displaying a backup list | gs_backuplist | ✓ | ✗ | ||

| System status management | Acquiring system information | gs_stat | ✓ | ✗ | |

| Changing system parameter | gs_paramconf | ✓ | ✗ | ||

| Data import/export | Importing data | gs_import | ✓ | ✓: Only in accessible object | |

| Exporting data | gs_export | ✓ | ✓: Only in accessible object |

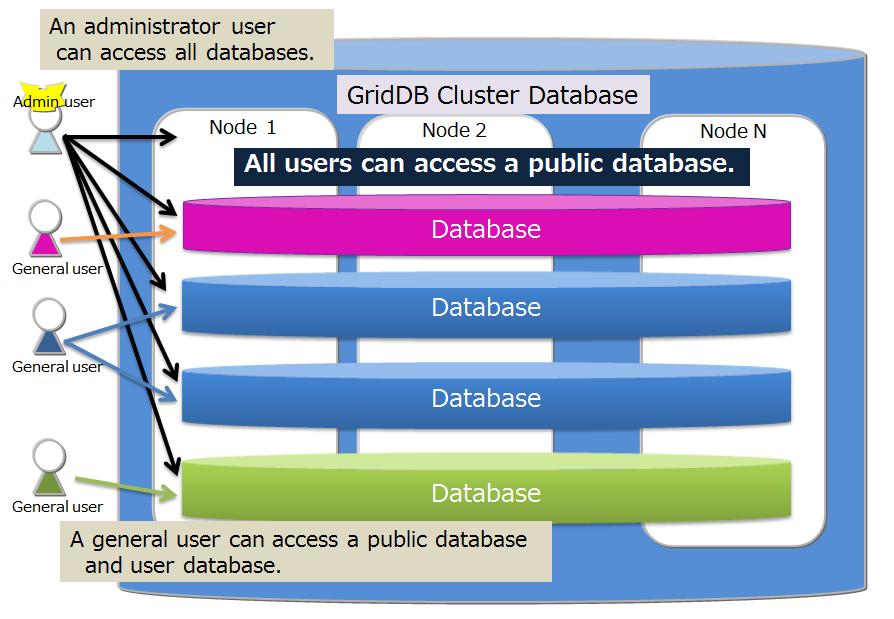

4.2.4 Database and user

Access to a cluster database in GridDB can be separated on a user basis. The separation unit is known as a database. The following is a cluster database in the initial state.

-

public

- The database can be accessed by all administrator user and general users.

- This database is used when connected without specifying the database at the connection point.

Multiple databases can be created in a cluster database. Creation of databases and assignment to users are carried out by an administrator user.

The rules for creating a database are as shown below.

- The maximum no. of users and the maximum no. of databases that can be created in a cluster database is 128.

- A string consisting of alphanumeric characters, the underscore mark, the hyphen mark, the dot mark, the slash mark and the equal mark can be specified for the database. However, the first character cannot be a number.

- A string consisting of 64 characters can be specified for the database name.

- Although the case sensitivity of the database name is maintained, a database which has the same name when it is not case-sensitive cannot be created.

- “public” and “information_schema” cannot be specified for default DB.

Only assigned general users and administrator users can access the database. Administrator user can access all databases. The following rules apply when assign a general user to a database.

- Only 1 general user can be assigned to 1 database

- Multiple databases can be assigned to 1 user

Database and users

4.3 Data management function

To register and search for data in GridDB, a container (table) needs to be created to store the data. It is called a container when operating with NoSQL I/F, and a table when operating with NewSQL I/F. This section describes the data types that can be registered in a container (table), data size, index and data management functions.

The naming rules for containers (tables) are the same as those for databases.

- A string consisting of alphanumeric characters, the underscore mark, the hyphen mark, the dot mark, the slash mark and the equal mark can be specified. However, the first character cannot be a number.

- Although the case sensitivity of the name is maintained, a container (table) which has the same name when it is not case-sensitive cannot be created.

4.3.1 Container (table) data type

There are 2 container (table) data types.

A timeseries container (timeseries table) is a data type which is suitable for managing hourly data together with the occurrence time while a collection (table) is suitable for managing a variety of data.

The schema can be set in a container (table).

The basic data types that can be registered in a container (table) are the basic data type and array data type .

4.3.1.1 Basic data types

Describes the basic data types that can be registered in a container (table). A basic data type cannot be expressed by a combination of other data types.

| Data type | Description |

|---|---|

| BOOL | True or false |

| STRING | Composed of an arbitrary number of characters using the unicode code point |

| BYTE | Integer value from -2^{7} to 2^{7}-1 (8 bits) |

| SHORT | Integer value from -2^{15} to 2^{15}-1 (16 bits) |

| INTEGER | Integer value from -2^{31} to 2^{31}-1 (32 bits) |

| LONG | Integer value from -2^{63} to ら2^{63}-1 (64 bits) |

| FLOAT | Single-precision data type (32 bits) Floating-point number defined in IEEE754 |

| DOUBLE | Double-precision data type (64 bits) Floating-point number defined in IEEE754 |

| TIMESTAMP | Data type expressing the date and time Data format maintained in the database is UTC, and accuracy is in milliseconds |

| GEOMETRY | Data type to represent a space structure |

| BLOB | Data type for binary data such as images, audio, etc. |

The following restrictions apply to the size of the data that can be managed for STRING, GEOMETRY and BLOB data. The restriction value varies according to the block size which is the input/output unit of the database in the GridDB definition file (gs_node.json).

| Data type | Block size (64KB) | Block size (1MB) |

|---|---|---|

| STRING | Maximum 31KB (equivalent to UTF-8 encode) | Maximum 128KB (equivalent to UTF-8 encode) |

| GEOMETRY | Maximum 31KB (equivalent to the internal storage format) | Maximum 128KB (equivalent to the internal storage format) |

| BLOB | Maximum 1GB - 1Byte | Maximum 1GB - 1Byte |

[Memo]

- GEOMETRY is not supported in a table (operation by NewSQL I/F). Please use GEOMETRY in a container (operation by NoSQL I/F).

4.3.1.2 HYBRID

A data type composed of a combination of basic data types that can be registered in a container. The only hybrid data type in the current version is an array.

-

ARRAY

Expresses an array of values. Among the basic data types, only GEOMETRY and BLOB data cannot be maintained as an array. The restriction on the data volume that can be maintained in an array varies according to the block size of the database.

Data type Block size (64KB) Block size (1MB) Number of arrays 4000 65000 [Memo]

The following restrictions apply to TQL operations in an array column.

-

Although the i-th value in the array column can be compared, calculations (aggregation) cannot be performed on all the elements.

-

*(Example) When column A is an array and assumed to be defined

- The elements in an array such as select * where ELEMENT (0, column A) > 0 can be specified and compared. However, the variable in the ELEMNT "0" section cannot be specified.

- Aggregation such as select SUM (column A) cannot be carried out.

-

*(Example) When column A is an array and assumed to be defined

-

Although the i-th value in the array column can be compared, calculations (aggregation) cannot be performed on all the elements.

4.3.2 GEOMETRY-type (Spatial-type)

GEOMETRY data are widely used in map information systems, etc. This type can only be specified for container and not be supported for table.

For GEOMETRY, data is written in WKT (Well-known text). WKT is formulated by the Open Geospatial Consortium (OGC), a nonprofit organization promoting standardization of information on geospatial information.

The following WKT format data can be stored in the GEOMETRY column.

-

POINT

- Point represented by two or three-dimensional coordinate.

- Example) POINT(0 10 10)

-

LINESTRING

- Set of straight lines in two or three-dimensional space represented by two or more points.

- Example) LINESTRING(0 10 10, 10 10 10, 10 10 0)

-

POLYGON

- Closed area in two or three-dimensional space represented by a set of straight lines.

- Example) POLYGON((0 0,10 0,10 10,0 10,0 0)), POLYGON((35 10, 45 45, 15 40, 10 20, 35 10),(20 30, 35 35, 30 20, 20 30))

-

POLYHEDRALSURFACE

- Area in the three-dimensional space represented by a set of the specified area.

- Example) POLYHEDRALSURFACE(((0 0 0, 0 1 0, 1 1 0, 1 0 0, 0 0 0)), ((0 0 0, 0 1 0, 0 1 1, 0 0 1, 0 0 0)), ((0 0 0, 1 0 0, 1 0 1, 0 0 1, 0 0 0)), ((1 1 1, 1 0 1, 0 0 1, 0 1 1, 1 1 1)), ((1 1 1, 1 0 1, 1 0 0, 1 1 0, 1 1 1)), ((1 1 1, 1 1 0, 0 1 0, 0 1 1, 1 1 1)))

-

QUADRATICSURFACE

- Two-dimensional curved surface in a three-dimensional space represented by defining equation f(X) = <AX, X> + BX + c.

Operations using GEOMETRY can be executed with API or TQL.

With TQL, management of two or three-dimensional spatial structure is possible. Generating and judgement function are also provided.

SELECT * WHERE ST_MBRIntersects(geom, ST_GeomFromText('POLYGON((0 0,10 0,10 10,0 10,0 0))'))

See “GridDB API Reference” (GridDB_API_Reference.html) for details of the functions of TQL.

4.3.3 Container (table) ROWKEY

A ROWKEY is the data set in the row of a container. The uniqueness of a row with a set ROWKEY is guaranteed.

In NewSQL I/F, ROWKEY is called as PRIMARY KEY.

A ROWKEY can be set in the first column of the row. (This is set in Column No. 0 since columns start from 0 in GridDB.)

-

For a timeseries container (timeseries table)

- ROWKEY (PRIMARY KEY) is a TIMESTAMP

- Must be specified.

-

For a collection (table)

- A ROWKEY (PRIMARY KEY) is either a STRING, INTEGER, LONG or TIMESTAMP column.

- Need not be specified.

A default index prescribed in advance according to the column data type can be set in a column set in ROWKEY (PRIMARY KEY).

In the current version, the default index of all STRING, INTEGER, LONG or TIMESTAMP data that can be specified in a ROWKEY (PRIMARY KEY) is the TREE index.

4.3.4 Container (table) index

A condition-based search can be processed quickly by creating an index for the columns of a container (table).

There are 3 types of index - hash index (HASH), tree index (TREE) and space index (SPATIAL). A hash index is used in an equivalent-value search when searching with a query in a container. Besides equivalent-value search, a tree index is used in comparisons including the range (bigger/same, smaller/same etc.).

The index that can be set differs depending on the container (table) type and column data type.

-

HASH INDEX

- An equivalent value search can be conducted quickly but this is not suitable for searches that read the rows sequentially.

-

Columns of the following data type can be set in a collection. Cannot be set in a timeseries container, a table, and a timeseries table.

- STRING

- BOOL

- BYTE

- SHORT

- INTEGER

- LONG

- FLOAT

- DOUBLE

- TIMESTAMP

-

TREE INDEX

- Besides equivalent-value search, a tree index is used in comparisons including the range (bigger/same, smaller/same etc.).

-

This can be used for columns of the following data type in any type of container (table), except for columns corresponding to a rowkey in a timeseries container (timeseries table).

- STRING

- BOOL

- BYTE

- SHORT

- INTEGER

- LONG

- FLOAT

- DOUBLE

- TIMESTAMP

-

SPATIAL INDEX

- Can be used for only GEOMETRY columns in a collection. This is specified when conducting a spatial search at a high speed.

Although there are no restrictions on the no. of indices that can be created in a container, creation of an index needs to be carefully designed. An index is updated when the rows of a configured container are inserted, updated or deleted. Therefore, when multiple indices are created in a column of a row that is updated frequently, this will affect the performance in insertion, update or deletion operations.

An index is created in a column as shown below.

- A column that is frequently searched and sorted

- A column that is frequently used in the condition of the WHERE section of TQL

- High cardinality column (containing few duplicated values)

4.3.5 Timeseries container (timeseries table)

In order to manage data from a sensor etc. occurring at a high frequency, data is placed in accordance with the data placement algorithm (TDPA: Time Series Data Placement Algorithm) making maximum effective use of the memory . In a timeseries container (timeseries table), memory is allocated while classifying internal data by its periodicity. When hint information is given in an affinity function, the placement efficiency rises further. Expired data in a timeseries container is released at almost zero cost while being expelled to a disk where necessary.

A timeseries container (timeseries table) has a TIMESTAMP ROWKEY (PRIMARY KEY).

4.3.5.1 Expiry release function

In a timeseries data, an expiry release function and the data retention period can be set so that when the period set is exceeded, the data will be released (deleted).

The settings refer to the deadline unit and deadline, and no. of divisions when the data is released during timeseries container (timeseries table) creation. The settings of a timeseries container (timeseries table) that has been created cannot be changed.

The deadline can be set in day/hour/minute/sec/millisec units. The year unit and month unit cannot be specified. The current time used in determining whether the valid period has expired is dependent on the execution environment of each node in GridDB. Therefore, if the GridDB node time is faster than the client time due to a network delay or a deviation in the time setting of the execution environment, or if a row prior to expiry is no longer accessible, or conversely if only the client time is faster, the expired row may be accessible. We recommend that a value larger than the minimum required time is set in order to avoid unintended loss of rows.

An expired row is deemed as non-existent and is no longer subject to row operations such as search and update.

Expired rows are physically deleted based on the number of divisions for the valid period (number of divisions when the data is deleted).

For example, if the valid period is 720 days and the specified number of divisions is 36, although data access will be immediately disabled upon passing the 720-day mark, the data will only be deleted after 20 days have passed from the 720 days. 20 days’ worth of physical data is deleted together.

The number of divisions is specified when creating a timeseries container (timeseries table).

4.3.5.2 Compression function

In timeseries container (timeseries table), data can be compressed and held. Data compression can improve memory usage efficiency. Compression options can be specified when creating a timeseries container (timeseries table).

However, the following row operations cannot be performed on a timeseries container (timeseries table) for which compression options are specified.

- Updating a specified row.

- Deleting a specified row.

- Inserting a new row when there is a row at a later time than the specified time.

The following compression types are supported:

- HI: thinning out method with error value

- SS: thinning out method without error value

- NO: no compression.

The explanation of each option is as follows.

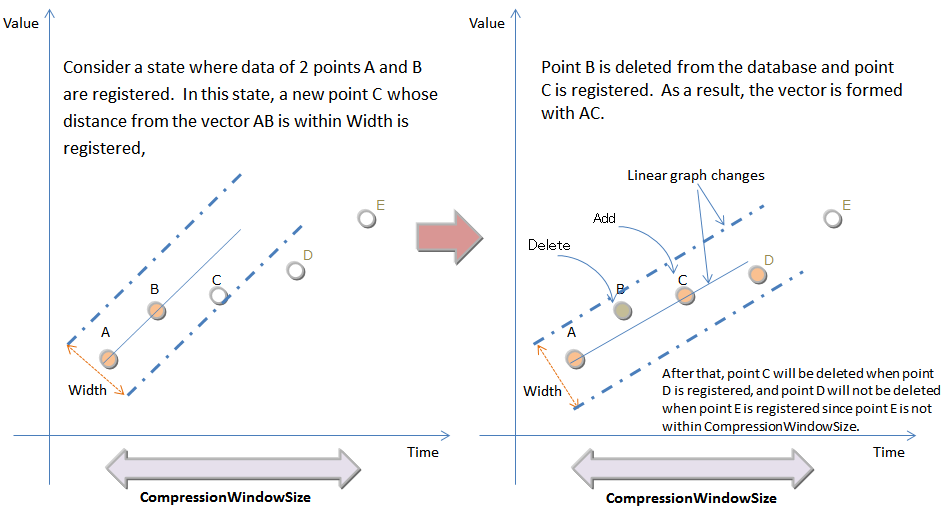

- Thinning out method with error value (HI)

HI compression is illustrated below. When the previous and the following registered data lies in the same slope, the current data, which is represented by a row is omitted. The condition of the slope can be specified by the user.

The row data is omitted only when the specified column satisfies the condition and the values of the other columns are the same as the previous data. The condition is specified by the error width (Width).

Compression of timeseries container (timeseries table)

Compression can be enabled to the following data types:

- LONG

- INTEGER

- SHORT

- BYTE

- FLOAT

- DOUBLE

Since lossy compression is used, data omitted by the compression cannot be restored to its original value.

Omitted data will be restored without error value at the process of interpolate and sample processing.

- Thinning out method without error value (SS)

With SS type, the row with the same data as the row registered just before and immediately after will be omitted. Omitted data will be restored without error value at the process of interpolate and sample processing.

4.3.5.3 Calculation of a timeseries container

There are calculations to perform time correction in addition to calculations to aggregate containers in a timeseries container (timeseries table).

-

Aggregate operations

When performing an aggregate operation on a container, the start and end time needs to be specified before applying the aggregate operation on a row set or specific column.

The functions of the aggregate operation are as follows:

-

AVG(column)

Returns the average value of the specified column.

-

COUNT(*)

Return the number of rows satisfying a given condition(s).

-

MAX(column)

Returns the largest value in the specified column.

-

MIN(column)

Returns the smallest value in the specified column.

-

STDDEV(column)

Returns the standard deviation of values in the specified column.

-

SUM((column)

Return the sum of values in the specified column.

-

VARIANCE(column)

Returns the variance of values in the specified column.

-

AVG(column)

-

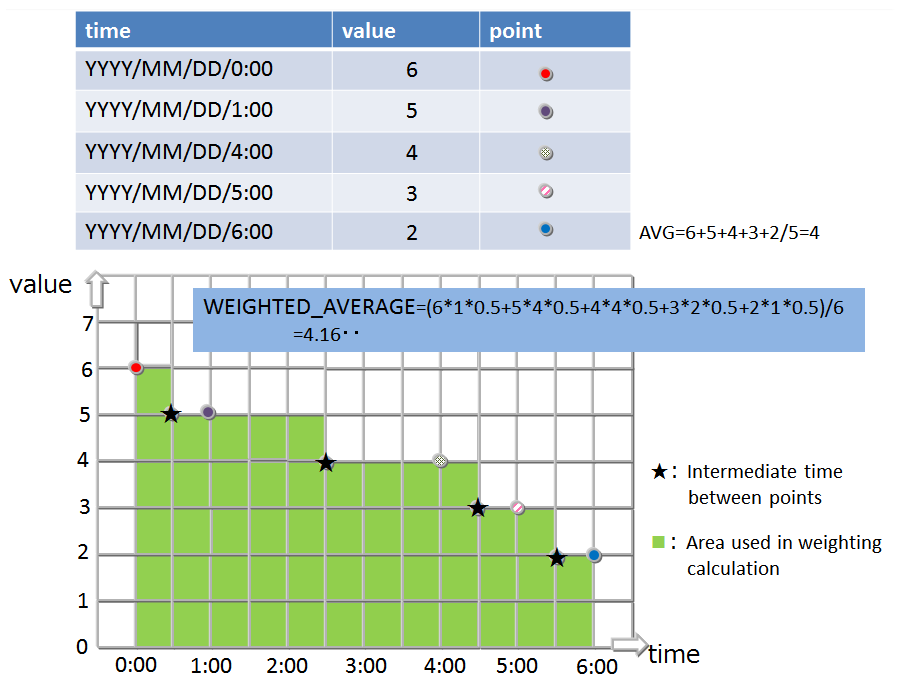

Aggregate operation specific to a timeseries container (timeseries table)

In a timeseries container (timeseries table), the calculation is performed with the data weighted at the time interval of the sampled data. In other words, if the time interval is long, the calculation is carried out assuming the value is continued for an extended time.

The functions of the aggregate operation specific to a timeseries container (timeseries table) is as follows:

-

TIME_AVG

Returns the average weighted by a time-type key of values in the specified column.

The weighted average is calculated by dividing the sum of products of sample values and their respective weighted values by the sum of weighted values. Only a numeric-type Column can be specified. The method for calculating a weighted value is as shown above.

The details of the calculation method are shown in the figure:

Aggregation of weighted values (TIME_AVG)

-

TIME_AVG

4.3.6 Selection and interpolation of a timeseries container (timeseries table)

Time data may deviate slightly from the expected time due to the timing of the collection and the contents of the data to be collected. Therefore when conducting a search using time data as a key, a function that allows data around the specified time to be acquired is also required.

The functions for searching the timeseries container (timeseries table) and acquiring the specified row are as follows:

-

TIME_NEXT(*, timestamp)

Selects a time-series row whose timestamp is identical with or just after the specified timestamp.

-

TIME_NEXT_ONLY(*, timestamp)

Select a time-series row whose timestamp is just after the specified timestamp.

-

TIME_PREV(*, timestamp)

Selects a time-series row whose timestamp is identical with or just before the specified timestamp.

-

TIME_PREV_ONLY(*, timestamp)

Selects a time-series row whose timestamp is just before the specified timestamp.

In addition, the functions for interpolating the values of the columns are as follows:

-

TIME_INTERPOLATED(column, timestamp)

Returns a specified column value of the time-series row whose timestamp is identical with the specified timestamp, or a value obtained by linearly interpolating specified column values of adjacent rows whose timestamps are just before and after the specified timestamp, respectively.

-

TIME_SAMPLING(*|column, timestamp_start, timestamp_end, interval, DAY|HOUR|MINUTE|SECOND|MILLISECOND)

Takes a sampling of Rows in a specific range from a given start time to a given end time.

Each sampling time point is defined by adding a sampling interval multiplied by a non-negative integer to the start time, excluding the time points later than the end time.

If there is a Row whose timestamp is identical with each sampling time point, the values of the Row are used. Otherwise, interpolated values are used.

4.3.7 Affinity function

An affinity is a function to connect related data. There are 2 types of affinity function in GridDB, data affinity and node affinity.

4.3.7.1 Data affinity function

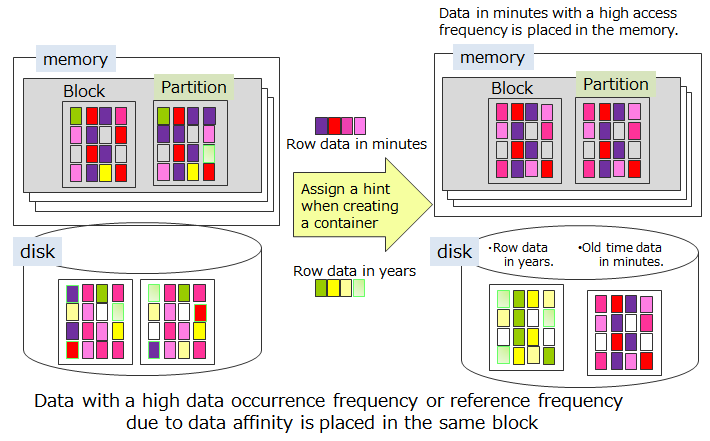

A data affinity is a function to raise the memory hit rate by arranging highly correlated data in the same block and localizing data access. By raising the memory hit ratio, the no. of memory mishits during data access can be reduced and the throughput can be improved. By using data affinity, even machines with a small memory can be operated effectively.

The data affinity settings provide hint information as container properties when creating a container (table). The characters that can be specified for the hint information are restricted by naming rules that are similar to those for the container (table) name. Data with the same hint information is placed in the same block as much as possible.

Data affinity hints are set separately by the data update frequency and reference frequency. For example, consider the data structure when system data is registered, referenced or updated by the following operating method in a system that samples and refers to the data on a daily, monthly or annual basis in a monitoring system.

- Data in minutes is sent from the monitoring device and saved in the container created on a monitoring device basis.

- Since data reports are created daily, one day’s worth of data is aggregated from the data in minutes and saved in the daily container

- Since data reports are created monthly, daily container (table) data is aggregated and saved in the monthly container

- Since data reports are created annually, monthly container (table) data is aggregated and saved in the annual container

- The current space used (in minutes and days) is constantly updated and displayed in the display panel.

In GridDB, instead of occupying a block in a container unit, data close to the time is placed in the block. Therefore, refer to the daily container (table) in 2., perform monthly aggregation and use the aggregation time as a ROWKEY (PRIMARY KEY). The data in 3. and the data in minutes in 1. may be saved in the same block.

If the memory is small and the data is so big that all the monitoring data cannot be stored in the memory, when the aggregation process in 4. is carried out on an annual basis, the block is divided and data placed in 3. is placed in the memory. As a result, data that you want to monitor may get swapped out as the data read may not be the latest e.g. data in 1. which is not required all the time is driven out of the memory.

In this case, by providing hints to the container (table) according to the container (table) access frequency using a data affinity e.g. on a minute, daily or monthly basis, etc., data with a low access frequency and data with a high access frequency is separated into different blocks when the data is placed.

In this way, data can be placed to suit the usage scene of the application by the data affinity function.

Data Affinity

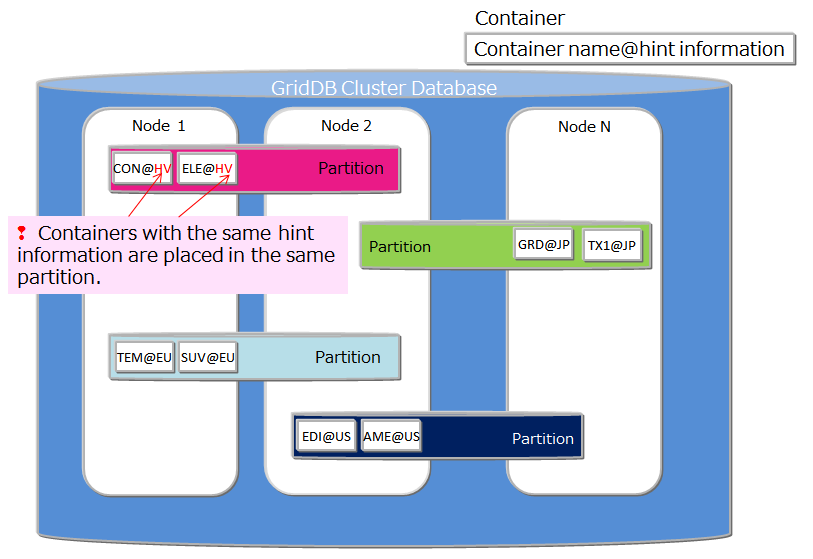

4.3.7.2 Node affinity function

Node affinity is a function to reduce the network load when accessing data by arranging highly correlated containers and tables in the same node. Although there is no container JOIN operation In the TQL of a NoSQL product, a table JOIN operation can be described in the SQL of a SQL product. When joining a table, the network access load of a table placed in another node of the cluster can be reduced. In addition, since concurrent processing using multiple nodes is no longer possible, there is no effect on shortening the turnaround time. Nonetheless, throughput may still rise due to a reduction in the network load.

Placement of container/table based on node affinity

To use the node affinity function, hint information is given in the container (table) name when the container (table) is created. A container (table) with the same hint information is placed in the same partition. Specify the container name as shown below.

- Container (table) name@node affinity hint information

The naming rules for node affinity hint information are the same as the naming rules for the container (table) name.

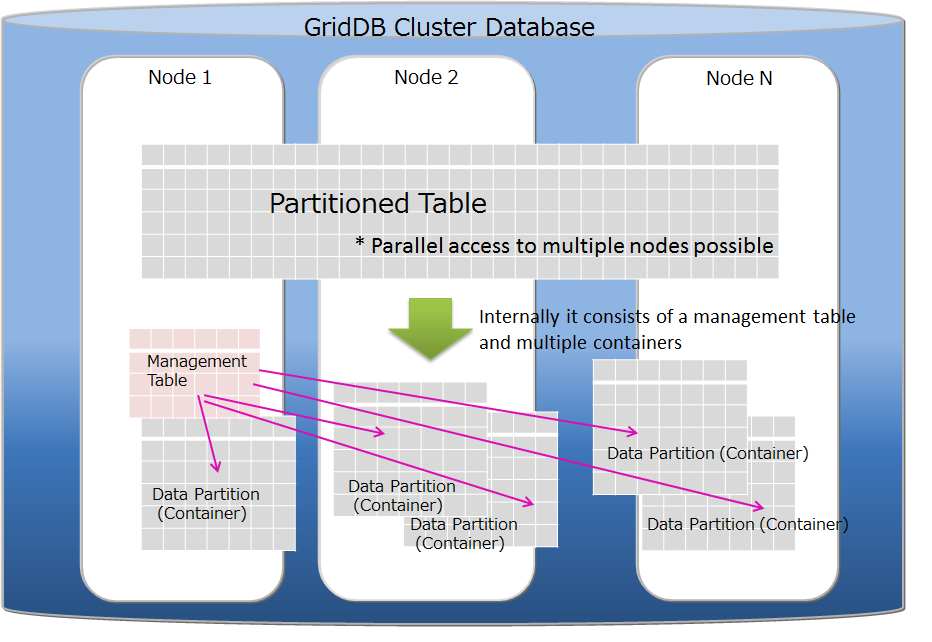

4.3.8 Table partitioning (GridDB AE/VE)

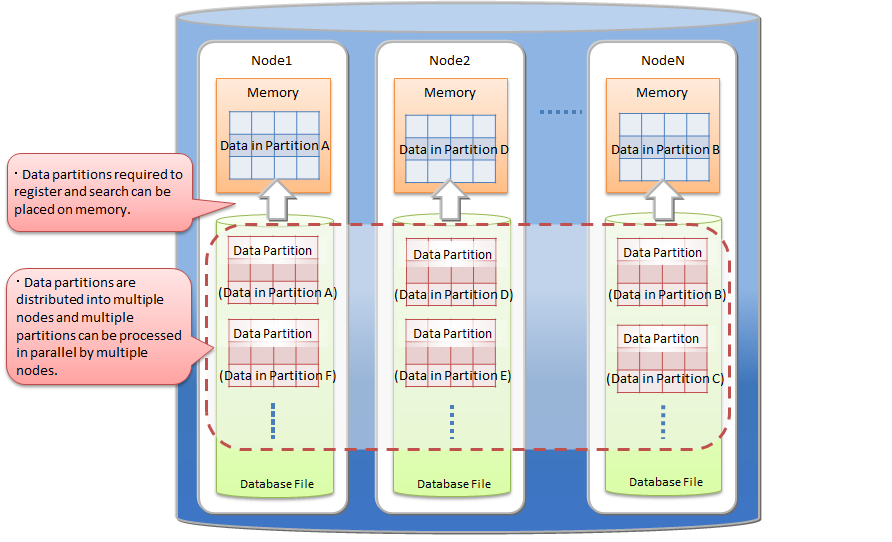

In order to improve the operation speed of applications connected to multiple nodes of the GridDB cluster, it is important to arrange the data to be processed in memory as much as possible. For huge table with a large number of rows, by distributing rows of the table to multiple nodes, processors and memory of multiple nodes can be effectively used. Distributed rows are stored in the internal containers called "data partition". The allocation of each row to the data partition is determined by a "partitioning key" column specified at the time of the table creation.

GridDB supports hash partitioning, interval partitioning and interval-hash partitioning as table partitioning methods.

Table partitioning is a function only for GridDB AE/VE. Creating and Deleting tables can be performed only through the NewSQL interface. Data registration, update and search can be performed through the NewSQL/NoSQL interface. (There are some restrictions. See TQL and SQL for the details.)

-

Data registration

When data is registered into a table, the data is stored in the appropriate data partition according to the partitioning key value and the partitioning method. It is not possible to specify a data partition to be stored.

-

Index

When creating an index on a table, a local index for each data partition is created. It is not possible to create a global index for the whole table.

-

Data handling

An error occurs for updating the partitioning key value. If updating the partitioning key is needed, delete and reregister the data.

-

Functions of timeseries tables

The expiry release function can be used for partitioned timeseries tables. The compression function cannot be used for the tables.

-

Notes

When specifying the column as a partitioning key other than the primary key, the primary key constraint is ensured in each data partition, but it is not ensured in the whole table. So, the same value may be registered in multiple rows of a table.

Table partitioning

4.3.8.1 Benefits of table partitioning

Dividing a large amount of data through a table partitioning is effective for efficient use of memory and for performance improvement in data search which can select the target data.

-

efficient use of memory

In data registration and search, data partitions required for the processing are loaded into memory. Other data partitions, not target to the processing, are not loaded. So when the data to be processed is locally concentrated on some data partitions, the amount of loading data is reduced. The frequency of swap-in and swap-out is decreased and the performance is upgraded.

-

selecting target data in data search

In data search, only data partitions matching the search condition are selected as the target data. Unnecessary data partitions are not accessed. This function is called "pruning". Because the amount of accessed data reduces, the search performance is upgraded. Search conditions which can enable the pruning are different depending on the type of the partitioning.

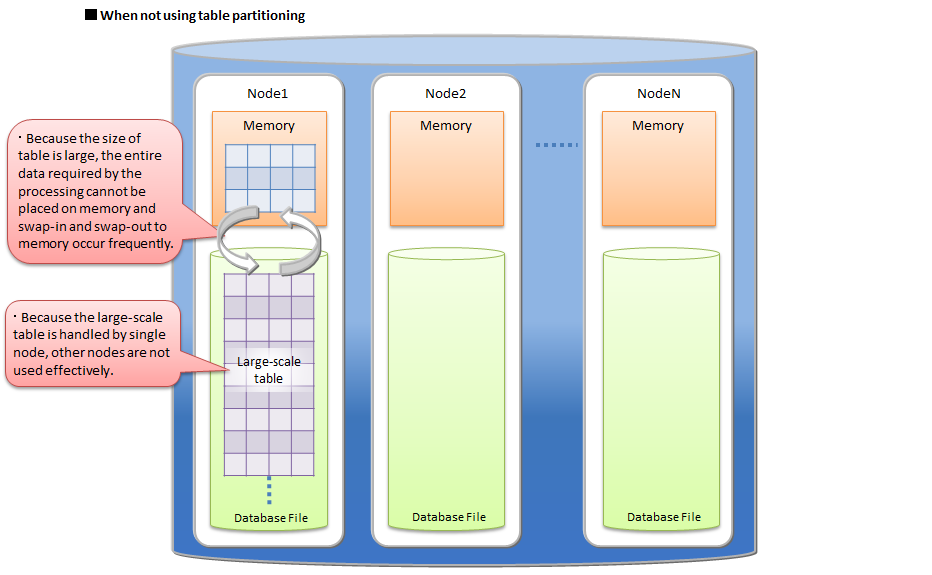

The followings describe the behaviors on the above items for both cases in not using the table partitioning and in using the table partition.

When a large amount of data is stored in single table which is not partitioned, all the required data might not be able to be placed on main memory and the performance might be degraded by frequent swap-in and swap-out between database files and memory. Particularly the degradation is significant when the amount of data is much larger than the memory size of a GridDB node. And data accesses to that table concentrate on single node and the parallelism of database processing decreases.

When not using table partitioning

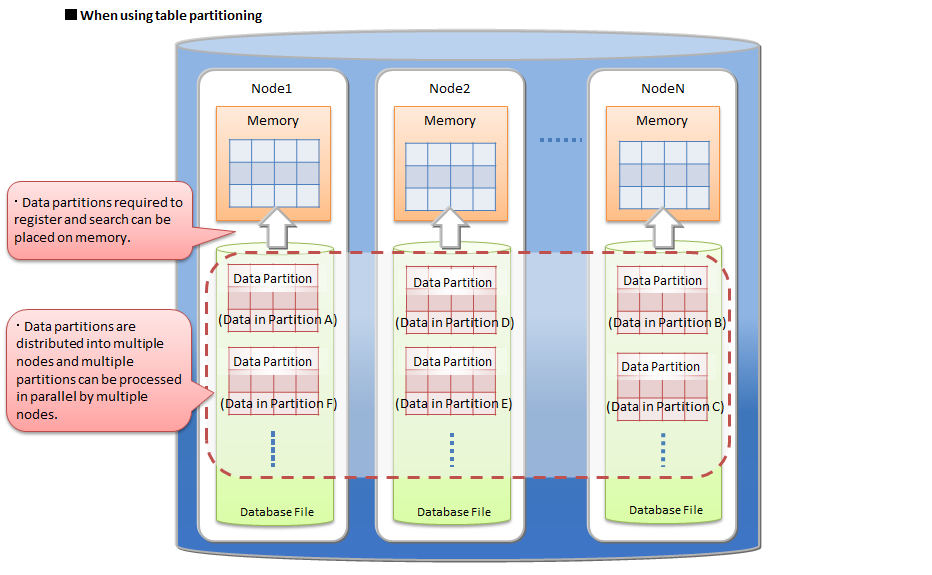

By using a table partitioning, the large amount of data is divided into data partitions and those partitions are distributed on multiple nodes.

In data registration and search, only necessary data partitions for the processing can be loaded into memory. Data partitions not target to the processing are not loaded. Therefore, in many cases, data size required by the processing is smaller than for a not partitioned large table and the frequency of swap-in and swap-out decreases. By dividing data into data partitions equally, CPU and memory resource on each node can be used more effectively.

In addition data partitions are distributed on nodes, so parallel data access becomes possible.

When using table partitioning

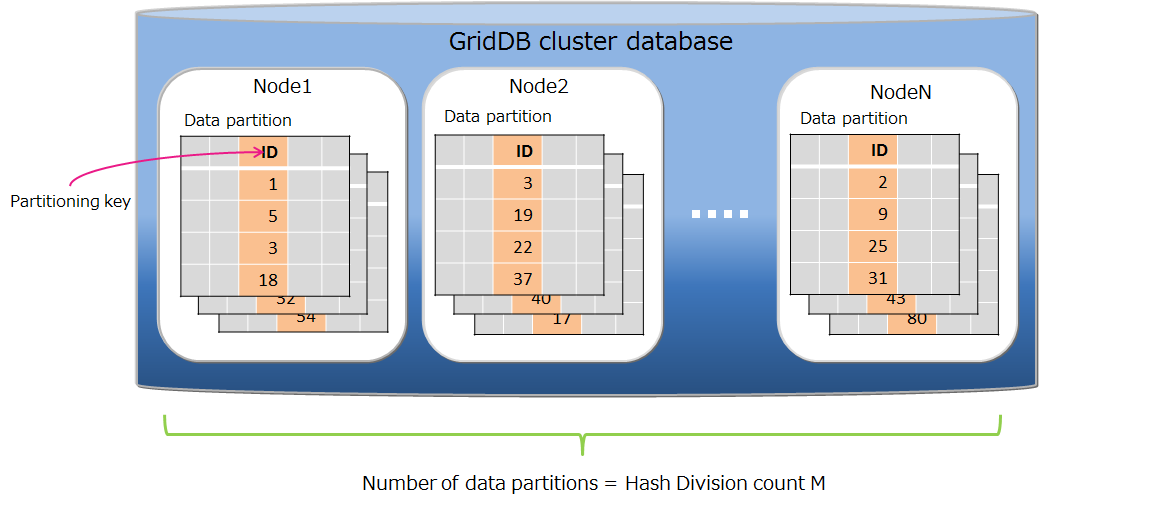

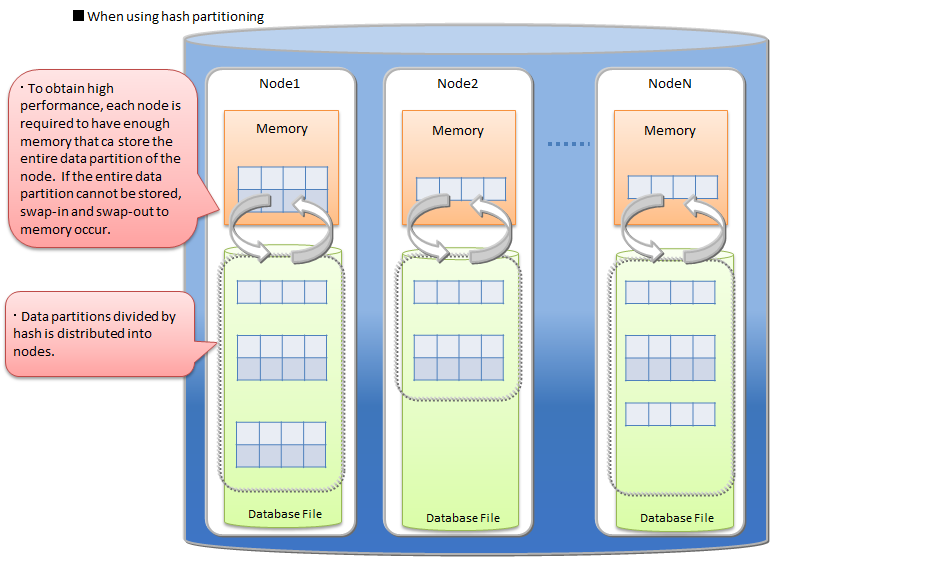

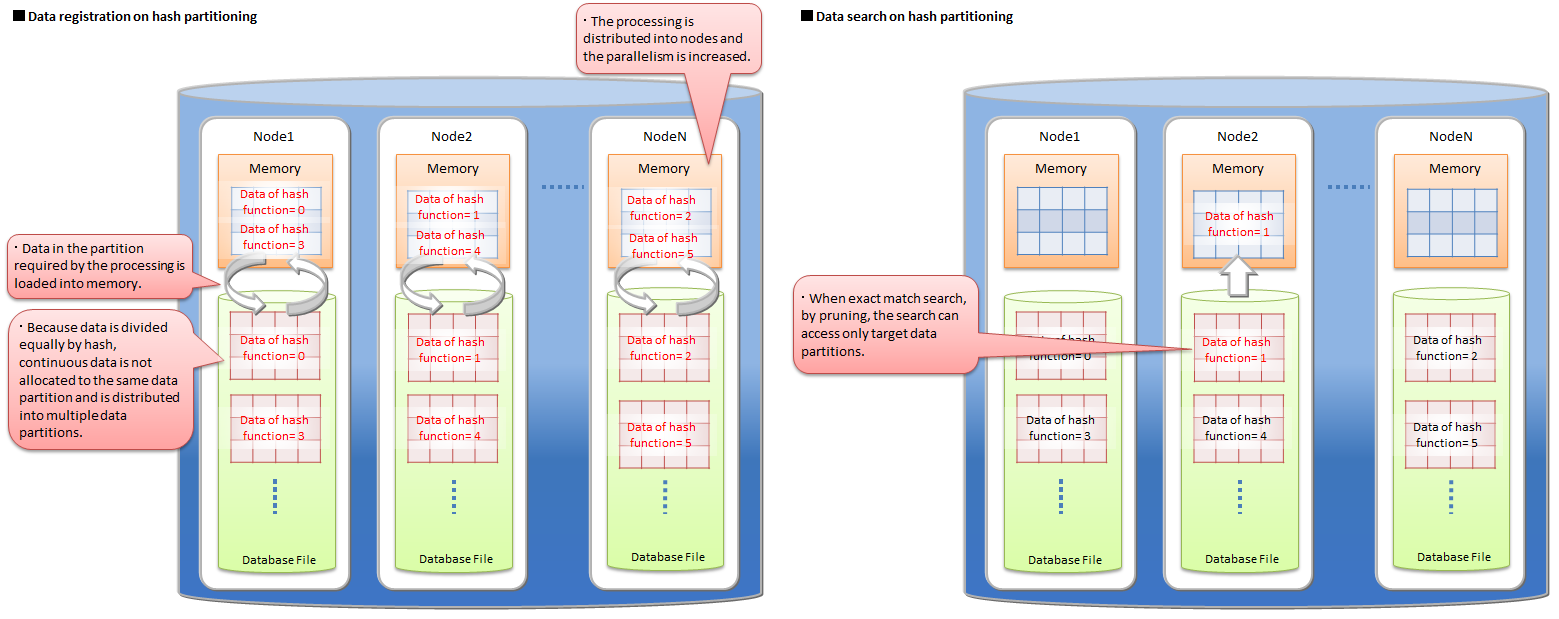

4.3.8.2 Hash partitioning

The rows are evenly distributed in the data partitions based on the hash value.

Also, when using an application system that performs data registration at a high frequency, the access will concentrate at the end of the table and may lead to a bottleneck. Since multiple table can be prepared by hash partitioning, it can be used to distribute the access.

A hash function that returns an integer from 1 to N is defined by specifying the partition key column and division number N, and division is performed based on the returned value.

Hash partitioning

-

Data partitioning

By spceifying the partitioning key and the division count M, a hash function that returns 1〜M is defined, and data partitioning is performed by the hash value. The maximum hash value is 1024.

-

Partitioning key

There is no limitation for the column type of a partitioning key.

-

Creation of data partitions

Specified number of data partitions are created automatically at the time of the table creation. It is not possible to change the number of data partitions. The table re-creation is needed for changing the number.

-

Deletion of a table

It is not possible to delete only a data partition.

By deleting a hash partitioned table, all data partitions that belong to it are also deleted

-

Pruning

In key match search on hash partitioning, by pruning, the search accesses only data partitions which match the condition. So the hash partitioning is effective for performance improvement and memory usage reduction in that case.

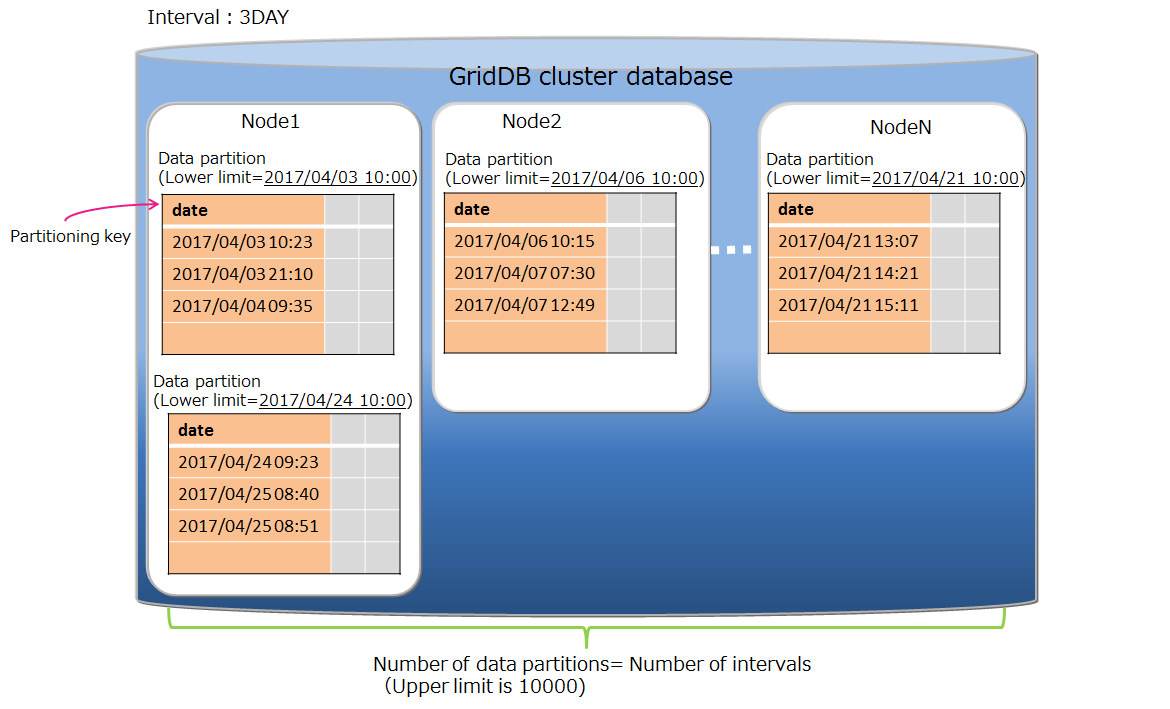

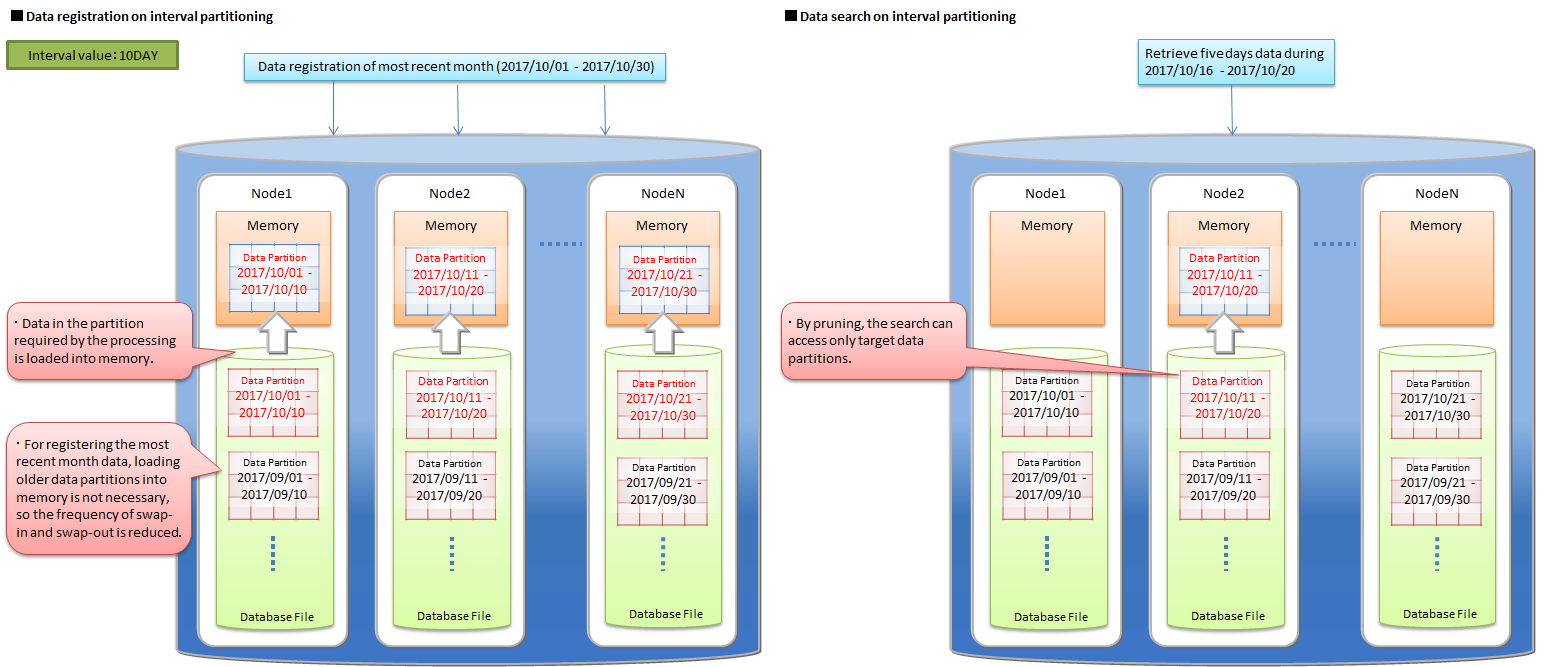

4.3.8.3 Interval partitioning

In the interval partitioning, the rows in a table are divided by the specified interval value and is stored in data partitions. The range of each data partition (from the lower limit value to the upper limit value) is automatically determined by the interval value.

The data in the same range are stored in the same data partition, so for the continuous data or for the range search, the operations can be performed on fewer memory.

Interval partitioning

-

Data partitioning

Data partitioning is performed by the interval value. The possible interval values are differnt depending on the partitioning key type.

- BYTE: 1~127

- SHORT: 1~32767

- INTEGER: 1~2147483647

- LONG: 1000~9223372036854775807

- TIMESTAMP: 1~

When the partitioning key type is TIMESTAMP, it is necessary to specify the interval unit as 'DAY'.

-

Partitioning key

Data types that can be specified as a partitioning key are BYTE, SHORT, INTEGER, LONG and TIMESTAMP. The partitioning key is a column that needs to have "NOT NULL" constraint.

-

Creation of data partitions.

Data partitions are not created at the time of creating the table. When there is no data partition for the registered row, a new data partition is automatically created.

The upper limit of the number of the data partitions is 10000. When the number of the data partitions reaches the limit, the data registration that needs to create a new data partition causes an error. For that case, delete unnecessary data partitions and reregister the data.

It is desired to specify the interval value by considering the range of the whole data and the upper limit, 10000, for the number of data partitions. If the interval value is too small to the range of the whole data and too many data partitions are created, the maintenance of deleting unnecessary data partitions is required frequently.

-

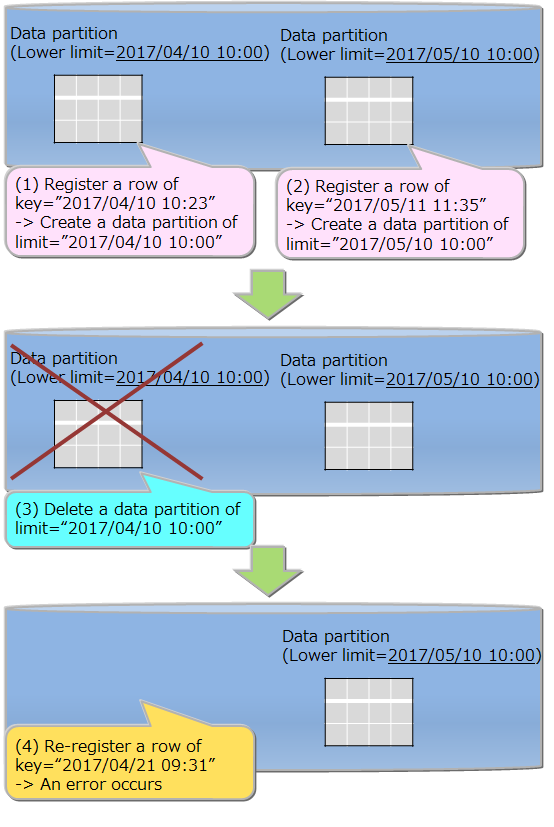

Deletion of data partitions

Each data partition can be deleted. The data partition that has been deleted cannot be recreated. All registration operations to the deleted data partition cause an error. Before deleting the data partition, check its data range by a metatable. See the SQL reference manual for the details of metatable operations.

By deleting an interval partitioned table, all data partitions that belong to it are also deleted.

If the expiry release function is set, the data partition that becomes empty for the expiration is not deleted automatically. All data partitions are processed for the data search on the whole table, so the search can be performed efficiently by deleting unnecessary data partitions beforehand.

-

Maintenance of data partitions

In the case of reaching the upper limit, 10000, for the number of data partitions or existing unnecessary data partitions, the maintenance by deleting data partitions is needed.

-

How to check the number of data partitions

It can be check by search the metatable that holds the data about data partitions. See "GridDB Advanced Edition SQL reference" (GridDB_AE_SQL_Reference.pdf) for the details.

-

How to delete data partitions

They can be deleted by specifying the lower limit value in the data partition. See "GridDB Advanced Edition SQL reference" (GridDB_AE_SQL_Reference.pdf) for the details.

-

How to check the number of data partitions

Examples of interval partitioned table creation and deletion

-

Pruning

By specifying a partitioning key as a search condition in the WHERE clause, the data partitions corresponding the specified key are only referred for the search, so that the processing speed and the memory usage are improved.

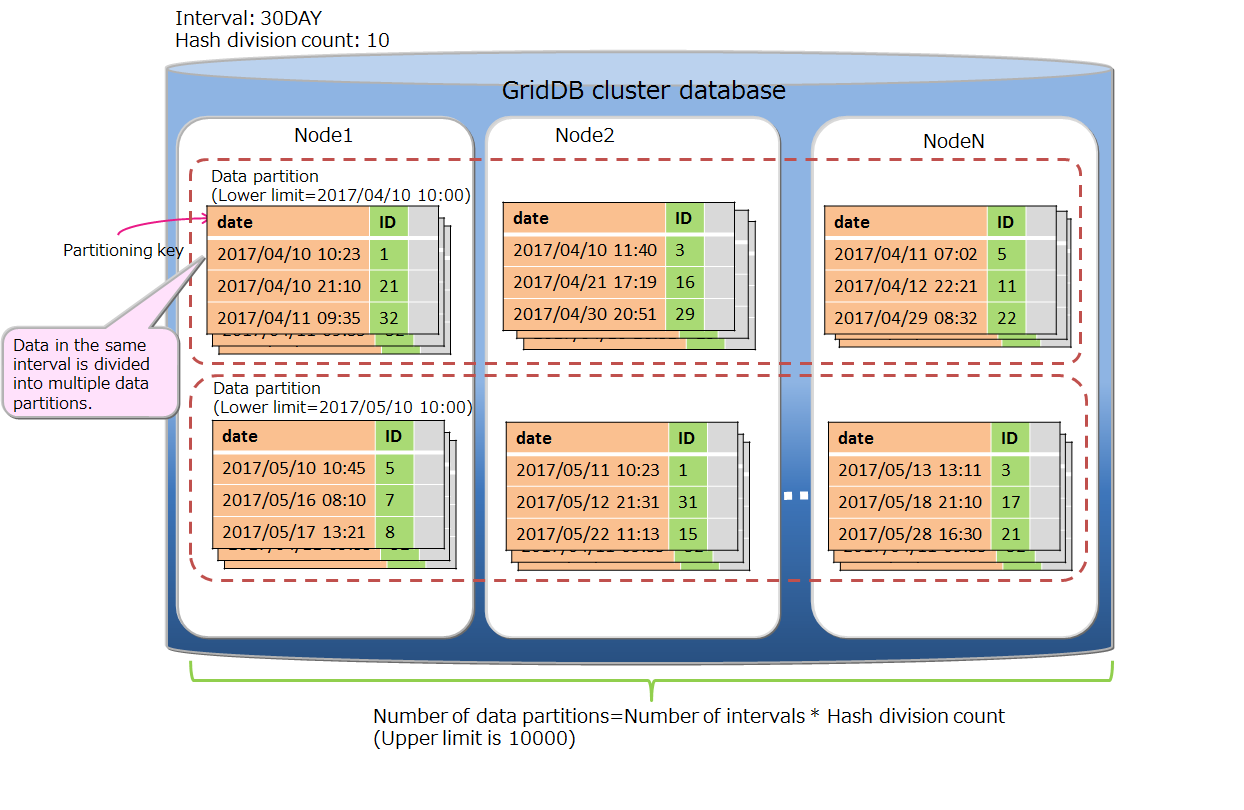

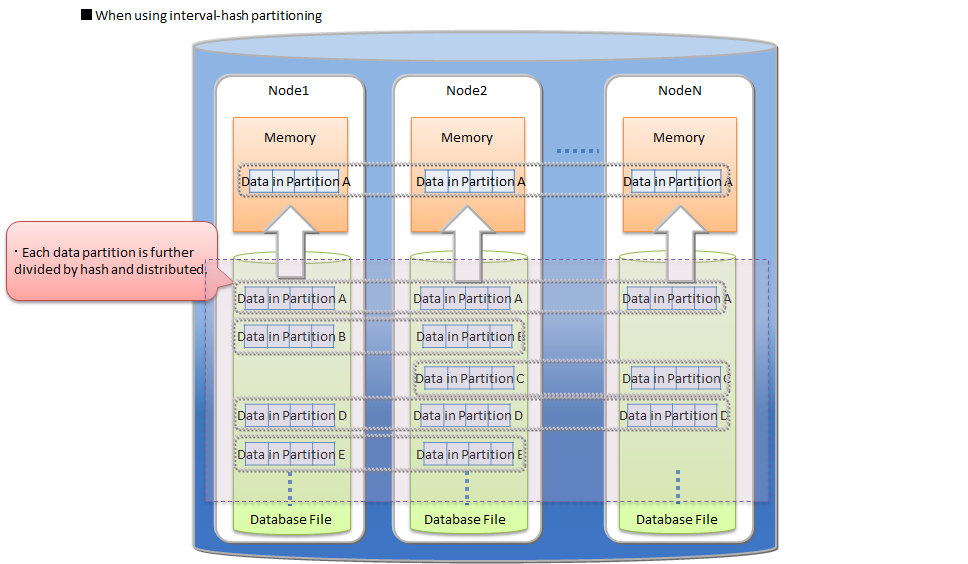

4.3.8.4 Interval-hash partitioning

The interval-hash partitioning is a combination of the interval partitioning and the hash partitioning. First the rows are divided by the interval partitioning, and further each division is divided by hash partitioning. The number of data partitions is obtained by multiplying the interval division count and the hash division count together.

Interval-hash partitioning

The rows are distributed to multiple nodes appropriately through the hash partitioning on the result of the interval partitioning. On the other hand, the number of data partitions increases, so that the overhead of searching on the whole table also increases. Please judge to use the partitioning by considering its data distribution and search overhead.

The basic functions of the interval-hash partitionig are the same as the functions of interval partitioning and the hash partitioning. The items specific for the interval-hash partitionig are as follows.

-

Data partitioning

The possible interval values of LONG are different from the interval partitioning.

- BYTE: 1~127

- SHORT: 1~32767

- INTEGER: 1~2147483647

- LONG: 1000*the hash division count~9223372036854775807

- TIMESTAMP: 1~

-

Number of data partitions

Including partitions divided by hash, the upper limit of number of data partitions is 10000. The behavior and requiring maintenance when the limit has been reached are same as interval partitioning.

-

Deletion of data partitions

A group of data partitions which have the same range can be deleted. It is not possible to delete only a data partition divided by the hash partitioning.

4.3.8.5 Selection criteria of table partitioning type

Hash, interval and interval-hash are supported as a type of table partitioning by GridDB.

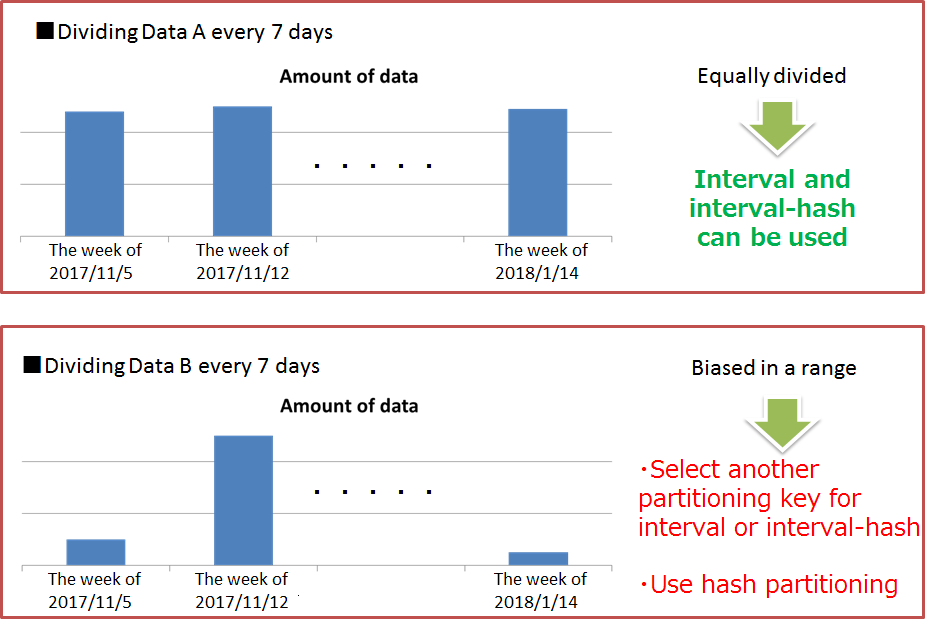

A column which is used in conditions of search or data access must be specified as a partitioning key for dividing the table. If a width of range that divides data equally can be determined for values of the partitioning key, interval or interval-hash is suitable. Otherwise hash should be selected.

Data range

-

Interval partitioning, interval-hash partitioning

If an interval, a width of range to divide data equally, can be determined beforehand, interval partitioning is suitable. In the query processing on interval partitioning, by partitioning pruning, the result is acquired from only the data partitions matching the search condition, so the performance is improved.

Interval partitioning

Therefore, when using interval partitioning, by selecting an appropriate interval value based on frequently registered or searched data range in application programs, required memory size is reduced. For example, when most recent range in time series data, such as sensing data, is accessed frequently, by specifying the width of the access range as the interval of table partitioning, data processing is performed only on the memory which has the target data partition and the search performance is not degraded.

Examples of data registration and search on interval partitioning

Further by using interval-hash partitioning, data in each interval is distributed to multiple nodes equally, so accesses to the same data partition can also be performed in parallel.

Interval-hash partitioning

-

Hash partitioning

When the characteristics of data to be stored is not clear or finding the interval value, which can divide the data equally, is difficult, hash partitioning should be selected. By specifying a column holding unique values as a partitioning key, uniform partitioning for a large amount of data is performed easily.

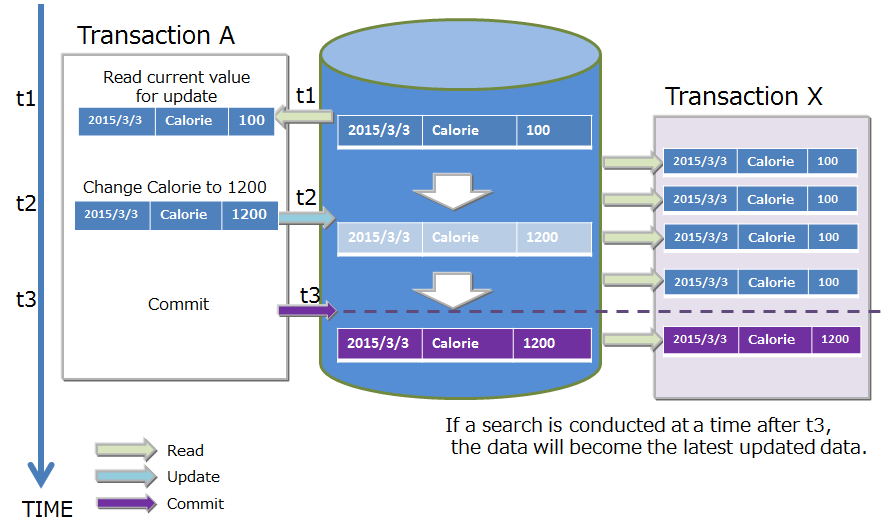

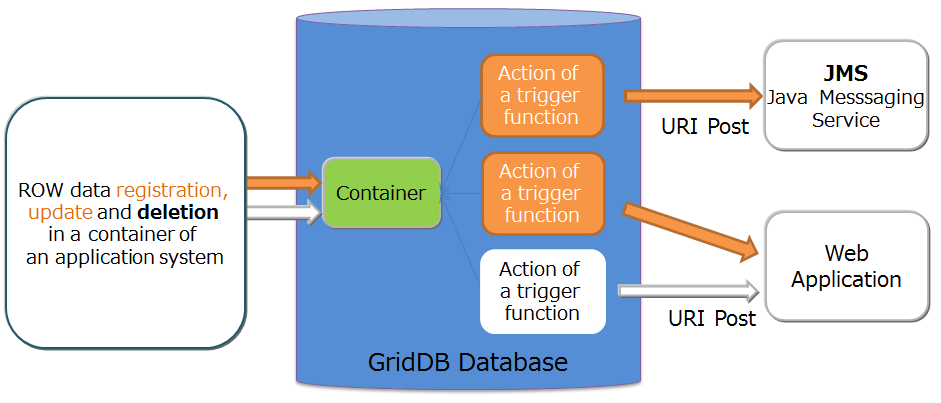

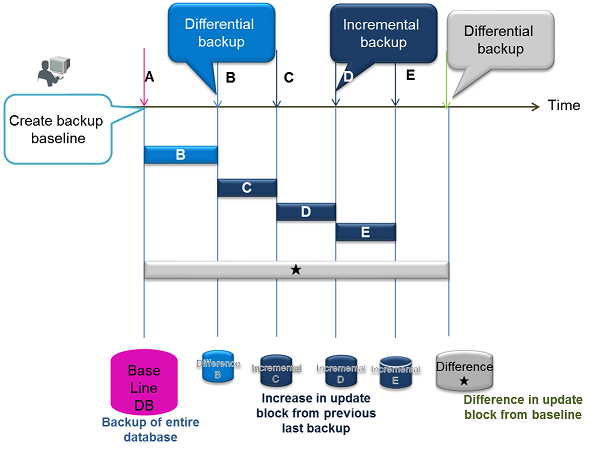

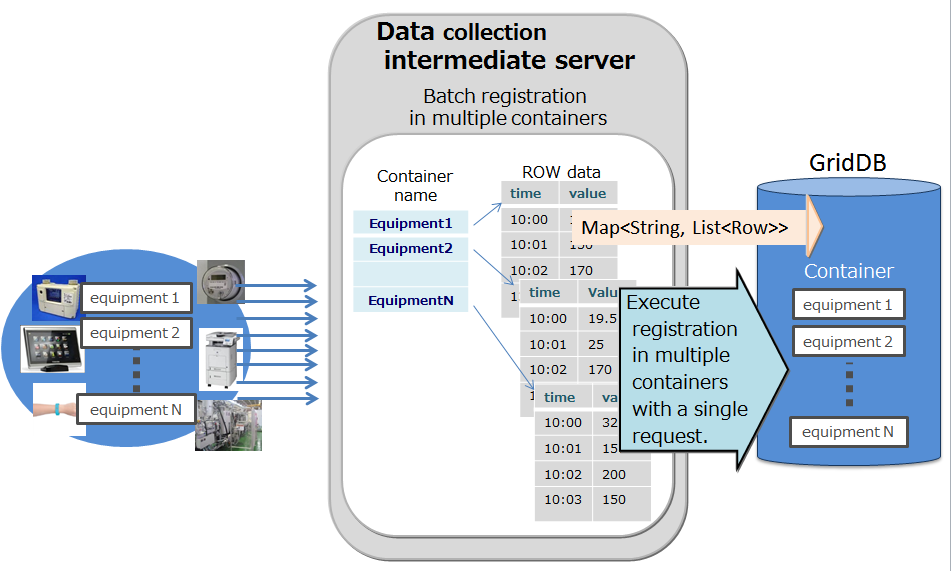

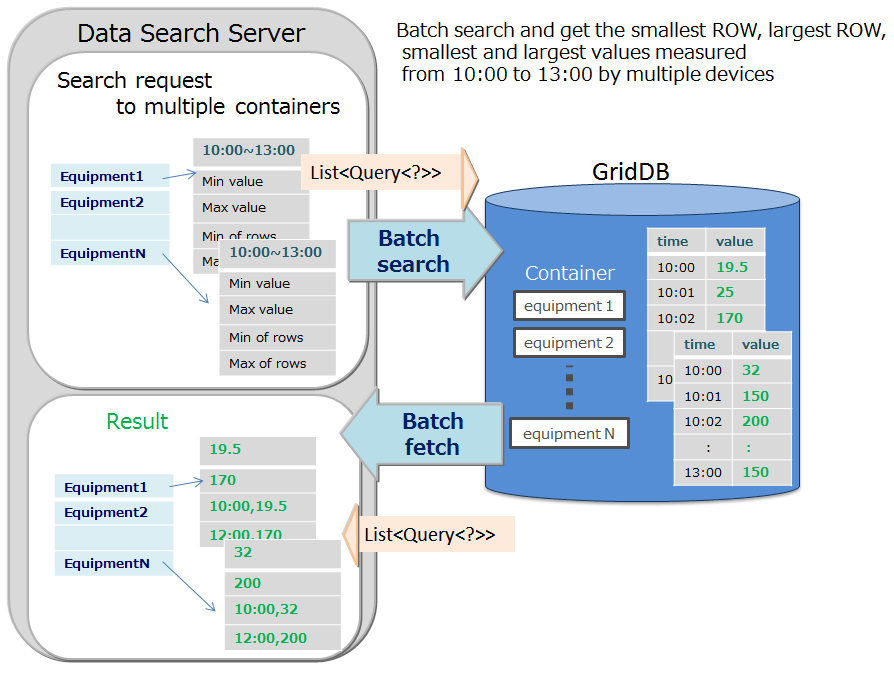

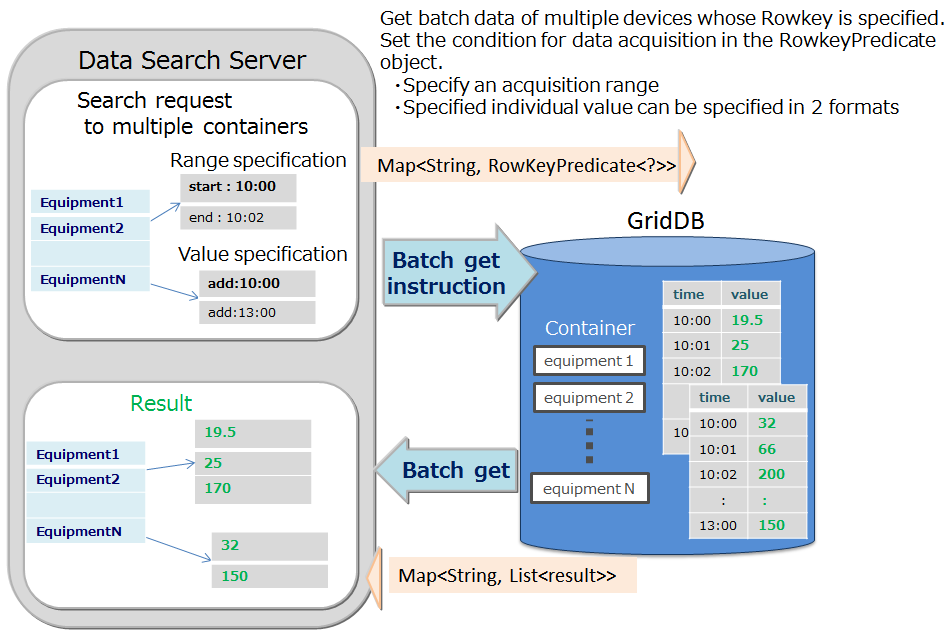

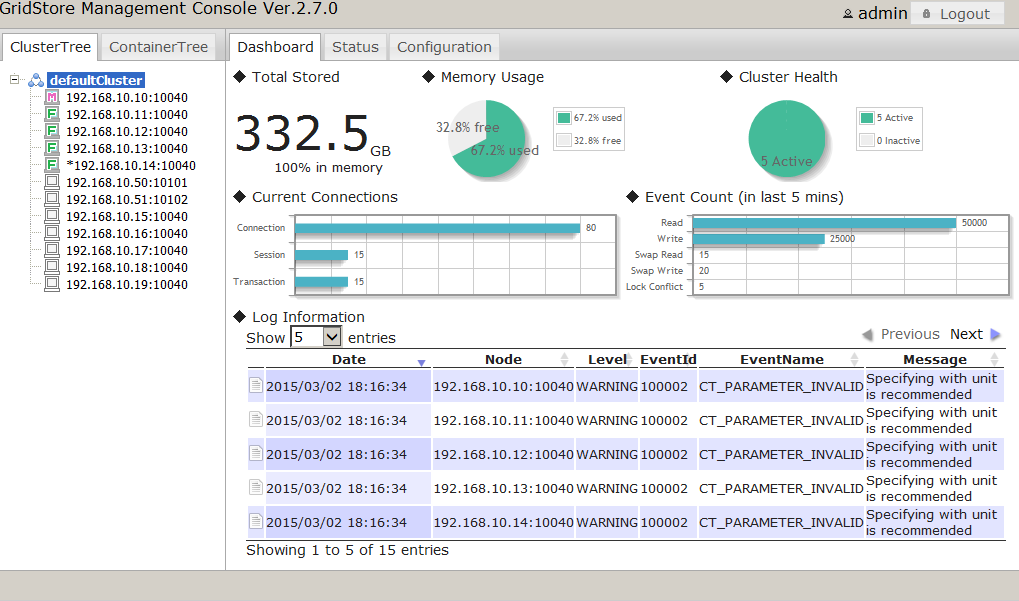

Hash partitioning